By Sina Pakazad, Vice President, Data Science, C3 AI, Henrik Ohlsson, Chief Data Scientist, Data Science, C3 AI, Aarushi Dhanuka, Data Scientist, Data Science, C3 AI, Ansh Guglani, Senior Product Manager, Gen AI Products, C3 AI, and John Abelt, Director, Generative AI Products, C3 AI

The emergence of autonomous coding agents (ACAs) marks a pivotal moment in the evolution of generative AI. Building on the reasoning and tool-use capabilities of large language models (LLMs), ACAs extend beyond developer productivity, empowering non-technical users to design, adapt, and deploy complex processes.

However, most of the industry’s adoption so far has focused on developer-facing ACAs, tools that assist in coding tasks or accelerate CI/CD pipelines. The real transformation begins with headless ACAs available in the cloud, that can be leveraged freely and at scale, and that are embedded within applications themselves. These agents enable software systems to evolve dynamically as usage patterns shift, new demands emerge, or fresh data becomes available. This is a paradigm shift in how we think about software, not as something written and deployed once, but as a living system capable of both self and guided adaptation.

At C3 AI, we are operationalizing ACAs as core cloud infrastructure for enterprise software, extending their role beyond developer productivity. In addition to accelerating engineering workflows, we enable the embedding of remotely programmable ACAs directly into production systems for data integration, workflow generation, and decision support. These agents operate as distributed composable services that reason over enterprise data, collaborate with one another, and adapt continuously as business needs evolve.

This approach completely redefines enterprise software as an adaptive network of intelligent components that are each capable of evolving with the business it serves. Keep reading to see how autonomous coding agents are transforming enterprise software, from static code to adaptive, reasoning systems that evolve with every interaction.

Figure 1: Traditional software development using autonomous coding agents (ACAs) produces static software, systems that execute predefined logic but remain disconnected from real-world changes and evolving user needs. In the new paradigm, ACAs are not only used to create software but are embedded within it, enabling applications to reason, adapt, and respond dynamically as data, context, and user intent evolve.

From Generative AI to Agentic Systems

LLMs have rapidly become the workhorses of modern AI applications, powering solutions across industries and use cases. Despite their well-known quirks, non-determinism, hallucinations, and sycophancy, advances in fine-tuning, prompting strategies, and safety guardrails have enabled practitioners to harness their remarkable capabilities while mitigating unwanted behaviors.

A pivotal breakthrough in this evolution has been the emergence of agents, LLMs augmented with reasoning and self-reflection, tool use, and memory. These agents are often purpose-built for specific tasks, leveraging specialized tools and accumulated experience, stored in both short- and long-term memory, to operate either collaboratively with humans or fully autonomously. When orchestrated with the right combination of tools, prompts, and objectives, agents can now perform complex workflows that once required extensive manual effort and coordination.

The introduction of multi-agent workflows pushed this concept even further, allowing agents to coordinate, share context, and collectively execute multi-stage processes that once demanded cross-team orchestration.

Autonomous Coding Agents: The Next Evolution in AI-Driven Software

The next frontier in this progression is the rise of ACAs. Recent advances in LLM reasoning and code synthesis have given rise to generalist coding agents capable of tackling sophisticated development tasks when equipped with basic toolkits such as code-execution sandboxes, web search, and environment-management utilities.

An ACA typically consists of three core components:

- The LLM: Drives reasoning and code generation.

- The Coding Agent: Structures task execution, manages state, and applies reasoning loops.

- The Sandbox Infrastructure: Provides a controlled environment for safe, reproducible code execution and iteration.

In this fast-moving space, several notable systems have emerged: Claude Code, Codex, OpenHands, Devin, Cursor, Windsurf, and others. Each implements bespoke coding agents, designed either to integrate tightly with proprietary LLMs (e.g., Claude Code, Codex) or operate in a model-agnostic manner, depending on user preference. They expose their capabilities through IDE plugins, web-based IDE-like interfaces, CI/CD integrations, and programmatic access via SDKs or CLI tools. Most also include local or cloud-based sandboxing (often limited to CI/CD contexts) for safe code testing and validation.

While these agents have already transformed software development by accelerating CI/CD cycles and boosting developer productivity, their true promise extends far beyond efficiency gains. This represents not just a step toward the democratization of software creation, but a fundamental reimagining of software itself: from static deployments to living systems capable of continuous self-adaptation.

Rethinking Interaction and Accessibility: Embedding Autonomous Coding Agents in the Cloud

Realizing this vision, however, requires reimagining how users interact with ACAs. Current commercial offerings largely confine interactions to predefined UIs or narrow workflows, much as if early LLMs had only been accessible through a single chat window, purely locally on your machine or only as part of specific workflows. These limitations stifle innovation and make it difficult to embed ACAs deeply into complex, multi-step systems or deliver adaptive software experiences at enterprise scale.

Imagine if LLMs had launched with the same restrictions many ACA offerings still impose today. You could still access them through a local SDK, but only on your laptop or within pre-specified usage processes. They would work fine for one-off experiments or simple automations, but the moment you wanted to deploy them at scale, integrate them into a product, or connect them across workflows, you’d hit a wall. The model would only run inside pre-approved environments or rigid frameworks, each with its own rules and limits.

In that world, LLMs would never have become the flexible, API-driven infrastructure we rely on today. They would remain powerful, but siloed, tools that would be bound to local experimentation instead of fueling large-scale applications. That’s where most ACA offerings are stuck now: locally usable, but not yet truly freely programmable in the cloud, where they can evolve, scale, and collaborate across systems.

By contrast, unrestricted, remote access to ACAs, operating as cloud-native services, enables seamless embedding within applications, pipelines, and workflows. It empowers end users to construct, adapt, and evolve sophisticated processes programmatically, unlocking the full potential of autonomous agents as a foundation for dynamic, self-improving software systems.

Enterprise AI in Action: C3 AI and the C3 Agentic AI Platform, Powered by OpenHands

At C3 AI, we have been building for this inevitable future. Over the past few months, we have developed systems that leverage autonomous coding agents not merely to accelerate developer workflows, but to empower non-developers, subject-matter experts, and end users to design, modify, and deploy complex dynamic processes on their own. We have used ACAs for a variety of use cases, including data integration and analysis, agentic workflow generation (STAFF), and decision-support systems (Alchemist), among others.

These solutions serve as composable building blocks, powered by remote, LLM-style interactions with ACAs that enable non-technical users to transform how they work, bridging natural-language intent, data-driven insights, and optimized decision making. To this end, we have leveraged OpenHands as our ACA of choice.

Among existing ACAs, OpenHands (Cloud) stands out for providing full remote programmability and unconstrained interaction in the cloud, resulting in unlimited scalability. Developers can control autonomous coding agents via SDKs with the same flexibility and precision as an LLM endpoint. Beneath this simplicity lies a sophisticated reasoning loop. Invoking an ACA triggers a cycle of code generation, execution, and self-correction within a secure sandbox, yielding reliable, verifiable results.

Alchemist: Old Agentic Paradigm vs. New ACA-based Paradigm

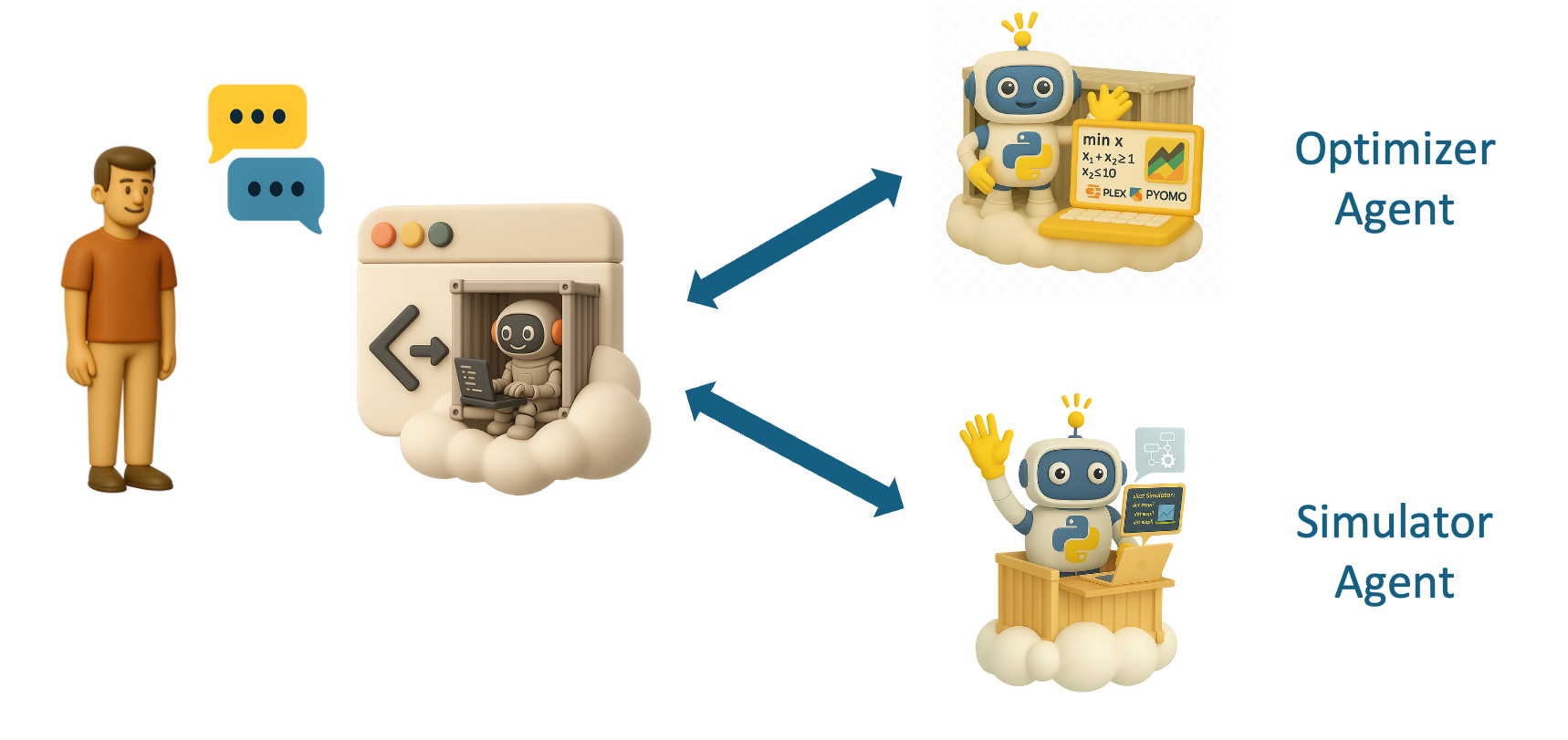

Figure 2: Alchemist embodies a new paradigm by building with ACAs. Both the Simulator and Optimizer agents are implemented as ACA-driven components that interact remotely and collaborate within a governed, ACA-orchestrated workflow, enabling adaptive, self-improving decision systems.

Traditional agentic systems, built before the rise of autonomous coding agents, are largely static in nature. They relied on pre-defined, domain-specific agent behaviors, with workflows encoded directly into the system’s code. Each agent was built for a narrow task, with limited ability to adapt, generate new logic, or evolve its own toolset.

When confronted with unseen requirements or new problem structures, these agents often hit dead ends, requiring developers to manually extend their logic, change the workflow structure, or rebuild the orchestration layer. Iteration cycles as a result were slow, constrained by engineering intervention, and verification across components like simulators and optimizers required explicit, often brittle, integration work.

Recently we introduced Alchemist, a modular, agent-driven system for decision optimization that uses specialized autonomous coding agents (ACAs) to define, simulate, and optimize complex decision processes directly from natural-language instructions. By dynamically generating both the optimizer and simulator, and enabling these components to interact, verify, and refine each other, Alchemist replaces rigid, prebuilt agent logic with a self-adaptive, continuously improving workflow.

Alchemist’s ACA-based architecture represents a decisive break from the traditional agentic systems’ rigidity. Instead of relying on pre-scripted agents, it leverages ACAs that can generate, modify, and verify their own codebases within governed sandboxes. When tasked with building an optimizer or simulator, these ACAs dynamically create the necessary software packages, complete with tests, interfaces, and validation routines, entirely through natural-language instructions.

This dynamic creation capability enables a continuous feedback loop:

- The Simulator Agent and Optimizer Agent don’t just execute predefined templates — they generate, test, and interconnect their artifacts.

- Each component validates the other: the Simulator Agent is used to test and verify optimization outputs, while the Optimizer Agent relies on the Simulator for performance evaluation and realism checks.

- Iteration is asynchronous and conversational; users can request ad hoc modifications (“add nurse fatigue as a constraint” or “allow overstaffing tolerance of 5%”) without breaking the workflow or redeploying the system.

In this paradigm, development becomes self-healing and evolutionary rather than brittle and linear. The ACA infrastructure ensures that every generated artifact, be it code, solver logic, or a test suite, is versioned, reproducible, and verifiable within its sandbox. This eliminates the “code freeze” points typical of older agent frameworks and allows for rapid, traceable evolution of the decision-optimization logic directly from user intent.

Where old agentic paradigms required human coordination between static agents and engineering teams to rewire models, Alchemist’s ACA-based paradigm turns that coordination into a programmatic conversation — a fluid cycle of generation, validation, and refinement.

The result is a system that can evolve its reasoning, tooling, and interfaces as naturally as the domain it serves, transforming agentic workflows from rigid task automators into adaptive, intelligent collaborators. Read our rrecent blog on Alchemist for a full deep dive on this topic.

Governance and Safety in Autonomous AI Systems

This shift to ACA-based autonomous coding and self-evolving agent workflows introduces challenges and quirks familiar from the early days of LLMs. Allowing open-ended coding through autonomous agents, especially for non-technical users, can lead to errors, frustration (never ending iteration loops!), or risk. As with LLM prompt engineering, we mitigate this through structured prompting and role definition, guiding agents toward focused, goal-aligned behavior powered by sandboxed development and execution. To further improve the focus and success rate of ACAs, we have developed external memory and self-reflection mechanisms based on their planning processes and execution trajectories when orchestrating code development, execution, and tool usage.

Persistent challenges such as non-determinism, hallucination, and sycophancy remain and are often amplified in the coding domain, particularly during autonomous testing and validation. We have proposed approaches and guardrails that mitigate and protect against these behaviors. Specifically, to ensure safety, reliability, and reproducibility, we have implemented robust guardrails and governance mechanisms, including:

- Automated test generation and mining from user-agent interactions

- Continuous validation for backward compatibility and functional integrity

- Enforcement of CI/CD best practices within the sandbox and as encompassing processes

- MBR-style measures to improve performance/reliability and mitigate variability and non-determinism of ACAs

Together, these mechanisms allow non-technical users to safely generate, test, and evolve executable logic from natural-language intent, without sacrificing reliability, reproducibility, or control.

From Tools to Thinking Software: The Future of Enterprise AI

The real distinction with autonomous coding agents lies not just in how they create software, but in how they become part of it. Early ACAs were external assistants, tools that generated or modified code. Now, they can be embedded directly within applications and their execution environments, turning the software itself into an adaptive, reasoning system.

This marks a fundamental shift from using ACAs to build software, to building software with ACAs inside it. These embedded agents continuously interpret user intent, adjust workflows, and evolve functionality as conditions change. They enable applications to reason about their own behavior, to diagnose, adapt, and extend themselves dynamically.

At C3 AI, we view this as the next phase of human-software interaction: applications that do not just run code but contain intelligence. This is software that collaborates, learns, and evolves, blurring the boundary between creation and operation.

Read more about how C3 AI is powering the future of agentic software and explore how autonomous coding agents enable dynamic, reasoning systems across industries.

About the Author

Henrik Ohlsson is the Vice President and Chief Data Scientist at C3 AI. Before joining C3 AI, he held academic positions at the University of California, Berkeley, the University of Cambridge, and Linköping University. With over 70 published papers and 30 issued patents, he is a recognized leader in the field of artificial intelligence, with a broad interest in AI and its industrial applications. He is also a member of the World Economic Forum, where he contributes to discussions on the global impact of AI and emerging technologies.

Sina Khoshfetrat Pakazad is the Vice President of Data Science at C3 AI, where he leads research and development in Generative AI, machine learning, and optimization. He holds a Ph.D. in Automatic Control from Linköping University and an M.Sc. in Systems, Control, and Mechatronics from Chalmers University of Technology. With experience at Ericsson, Waymo, and C3 AI, Dr. Pakazad has contributed to AI-driven solutions across healthcare, finance, automotive, robotics, aerospace, telecommunications, supply chain optimization, and process industries. His recent research has been published in leading venues such as ICLR and EMNLP, focusing on multimodal data generation, instruction-following and decoding from large language models, and distributed optimization. Beyond this, he has co-invented patents on enterprise AI architectures and predictive modeling for manufacturing processes, reflecting his impact on both theoretical advancements and real-world AI applications.

Aarushi Dhanuka is a Data Science Intern at C3 AI, where she focuses on agentic development and intelligent workflow automation, leveraging emerging AI technologies to improve data-driven application design. She works on applying data science and engineering techniques to develop AI-driven tools that support enterprise operations. Aarushi is currently pursuing her M.S. in Computational Science and Engineering at the Georgia Institute of Technology, where she specializes in applied machine learning and computational data systems.

Ansh Guglani is a Senior Product Manager at C3 AI. As the product lead for the agentic AI team, he has helped define the company’s strategy for agentic systems and drive adoption across industries. Before joining C3 AI, Ansh developed products at Applied Intuition, helping major automakers integrate autonomous-vehicle simulation systems. Earlier in his career, he led robotics and automation initiatives at Barry-Wehmiller Design Group, delivering large-scale systems for Tesla that transformed advanced manufacturing operations. He holds a B.S. in Electrical Engineering from Penn State University, with extensive coursework in computer science, engineering, and business.

John Abelt is the Lead Product Manager for C3 Generative AI. John has been with C3 AI for 7 years, and previously led ML Products for the C3 Agentic AI Platform. John holds a Master’s in Computer Science from University of Illinois at Urbana-Champaign and a Bachelor of Science in Systems Engineering from University of Virginia.