- AI Software

- C3 AI Applications

- C3 AI Applications Overview

- C3 AI Anti-Money Laundering

- C3 AI Cash Management

- C3 AI Contested Logistics

- C3 AI CRM

- C3 AI Decision Advantage

- C3 AI Demand Forecasting

- C3 AI Energy Management

- C3 AI ESG

- C3 AI Health

- C3 AI Intelligence Analysis

- C3 AI Inventory Optimization

- C3 AI Process Optimization

- C3 AI Production Schedule Optimization

- C3 AI Property Appraisal

- C3 AI Readiness

- C3 AI Reliability

- C3 AI Smart Lending

- C3 AI Sourcing Optimization

- C3 AI Supply Network Risk

- C3 AI Turnaround Optimization

- C3 Generative AI Constituent Services

- C3 Law Enforcement

- C3 Agentic AI Platform

- C3 Generative AI

- Get Started with a C3 AI Pilot

- Industries

- Customers

- Events

- Resources

- Generative AI for Business

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can the Generative AI Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- What Is Enterprise AI?

- Machine Learning

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download eBook

- All Resources

- Publications

- Customer Viewpoints

- Blog

- Glossary

- Developer Portal

- Generative AI for Business

- News

- Company

- Contact Us

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can the Generative AI Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- C3 Generative AI: Getting the Most Out of Enterprise Data

- The Key to Generative AI Adoption: ‘Trusted, Reliable, Safe Answers’

- Generative AI in Healthcare: The Opportunity for Medical Device Manufacturers

- Generative AI in Healthcare: The End of Administrative Burdens for Workers

- Generative AI for the Department of Defense: The Power of Instant Insights

C3 Generative AI: How Is It Unique?

Generative AI for the enterprise—an accelerant for digital transformation

By Nikhil Krishnan, CTO, Products

While ChatGPT has shattered the user adoption records of any consumer technology in history, the bigger opportunity for applying generative AI is within enterprises. Industries and government agencies stand to reap enormous benefits — boosting productivity, improving capabilities, and spurring the ability to compete in this rapidly evolving AI era.

The use cases are many, and leaders are eager. In a May 2023 survey, McKinsey estimated that 90% of business leaders expect to use generative AI solutions often over the next two years. Yet challenges remain. Deploying generative AI across an enterprise requires a system that ensures a level of security, accuracy, and transparency that doesn’t exist with consumer generative AI applications. With such guarantees, however, the opportunities could be as transformative as the internet itself.

C3 AI’s Generative AI Journey

C3 AI began working with generative AI long before ChatGPT and the term large language model (LLM) became part of the common lexicon. In 2020, the U.S. Department of Defense’s (DoD’s) Missile Defense Agency (MDA) asked C3 AI to model the trajectory of incoming hypersonic missiles to speed up the MDA’s ability to anticipate incoming missiles. Using traditional supercomputer methods, it could take 12 hours to make a few dozen simulations. The MDA’s goal was to have thousands of trajectories modeled in a matter of minutes.

The MDA’s unique needs required a new approach to deep learning models—an AI method that teaches computers to make predictions and decisions without explicit programming. To run such powerful simulations quickly, and at scale, also required the adoption of transformer models. First described in a Google paper in 2017, transformer models are powerful neural networks that can learn context by tracking relationships in data; they are the foundation of generative AI.

C3 AI’s then-novel use of transformer models for the MDA was an early use of generative AI technologies applied to enterprise-scale (and enterprise-secure) machine learning (ML) use cases. C3 AI achieved the MDA’s request, substantially reducing the time it took to create simulations of hypersonic missiles. The MDA was also able to provide these surrogate models to defense contractors such as Raytheon Technologies and Lockheed Martin so they could better model missile trajectories to build effective defense systems.

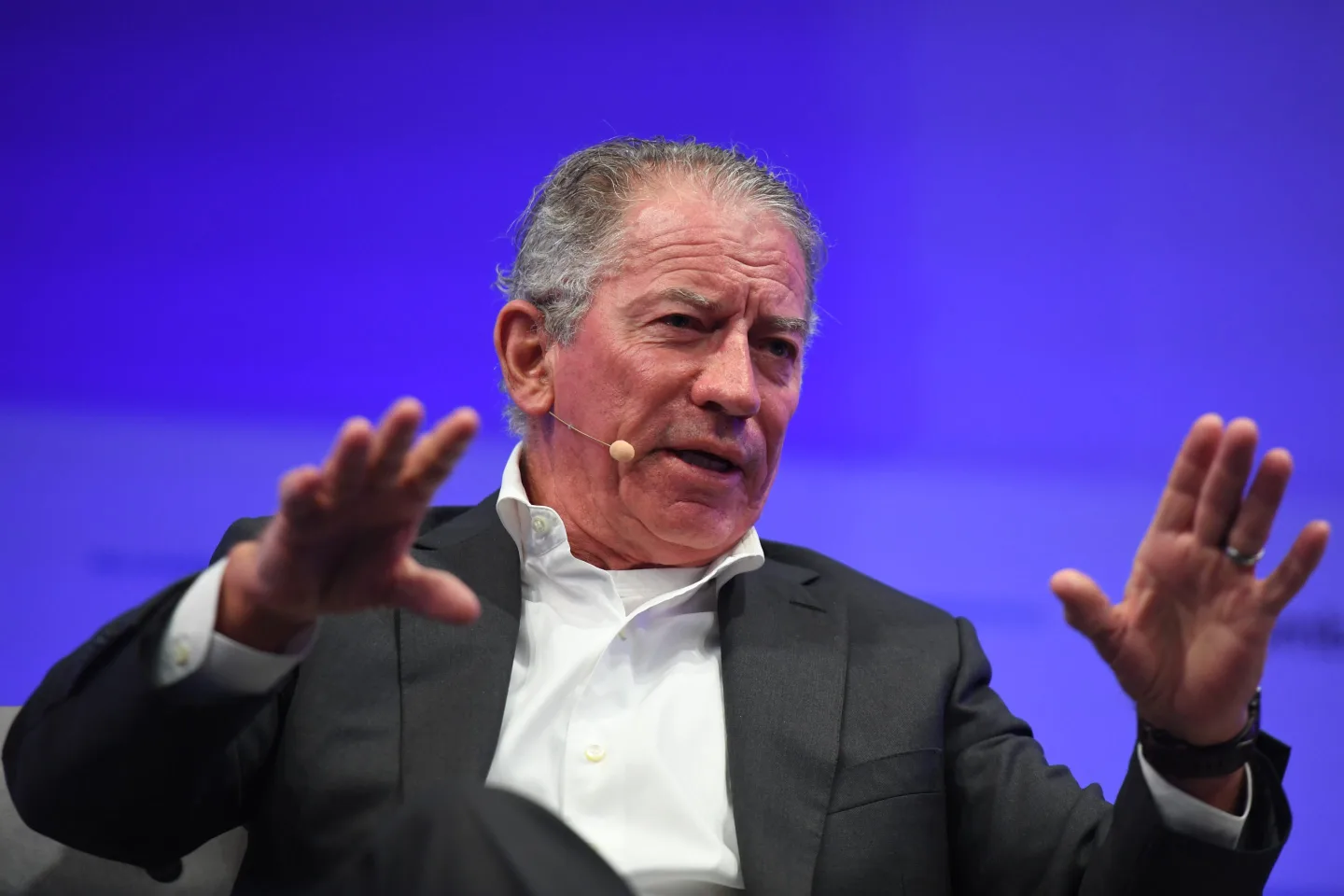

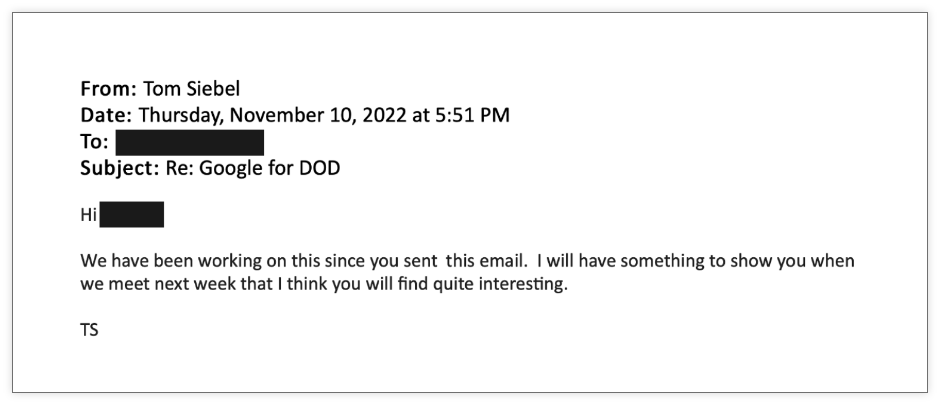

The more significant breakthrough for C3 AI came more recently; it also began with a request from the U.S. military. In September 2022, C3 AI CEO Tom Siebel received an email from an official at the DoD.

“I want to be the Google for DoD,” the official wrote. “A user asks a question and gets an answer. How do I make that happen?”

“Good idea,” Siebel replied. “Give me a couple of weeks to ideate this and propose a plan.”

Siebel and his team sketched out a new way for users to communicate with the disparate data sources and machine learning-models unified by the C3 AI Platform by incorporating the look and experience of a common search interface.

A New Way to Use Large Language Models

The LLMs that underlie generative AI technologies such as ChatGPT, Google Bard, and others, presented C3 Al with a valuable starting point for a broader set of solutions for the enterprise. C3 Generative AI is designed to be LLM agnostic, which is important as LLMs evolve, and new models are introduced—something that is happening at a rapid pace.

Currently, C3 Generative AI can leverage LLMs available through the cloud providers, such as Azure’s OpenAI Service, AWS Bedrock, and Google’s PaLM 2. C3 Generative AI also uses C3 AI’s own fine-tuned LLMs, including FLAN-T5 and Falcon 40B models. With our fine-tuned LLMs, C3 AI can also support highly secure, internet air-gapped environments required by some customers.

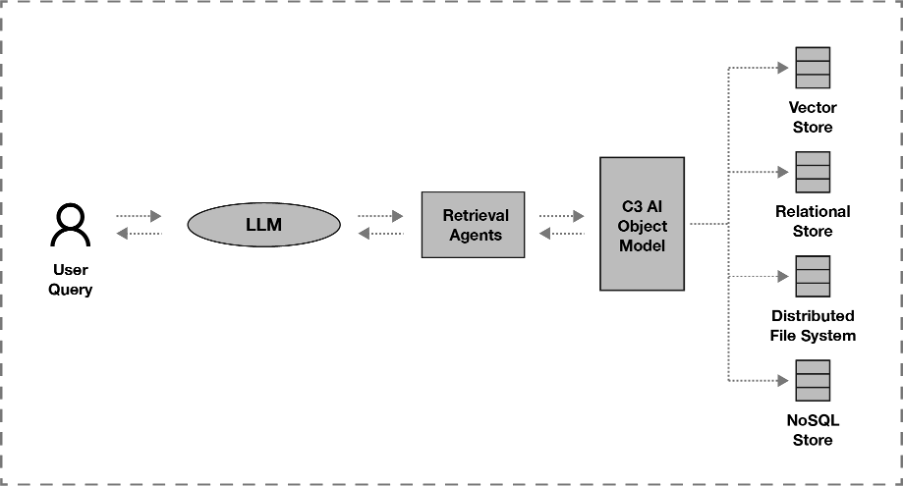

C3 AI doesn’t rely on an LLM the way consumer applications do. LLMs such as ChatGPT interact directly with the data sources they’re trained on, such as publicly available data on the internet. With C3 Generative AI, the LLM serves as a kind of AI librarian that figures out and interprets a user’s question and then directs the query to a retrieval agent that works with the C3 AI Type System to figure out where (and how) to get the best answer amid an enterprise’s data corpus.

C3 AI’s unique implementation of generative AI benefits from its proven enterprise AI technology, that the company spent more than 14 years and roughly $1.5 billion developing. C3 AI’s architectural foundation derives from a proprietary, model-driven architecture that eases and accelerates the development and delivery of enterprise AI applications at scale, empowering business decision makers with predictive analytics, resulting in substantial economic benefit.

For generative AI models to be truly transformative for the enterprise, C3 AI combined the LLM with its deep experience integrating, unifying, and modeling enterprise data for machine learning including predictive analytics, supervised learning, unsupervised learning, and deep learning. Enterprise AI, a category C3 AI established, is broadly recognized today as a large and growing market. (IDC projects the AI software market will nearly double between 2022 and 2026, reaching $791 billion.)

C3 AI’s novel use of large language models (LLMs), retrieval agents, and C3 AI Type System or object models, makes C3 Generative AI the only fit-for-business generative AI technology available today. It’s also constantly evolving. C3 Generative AI includes a feedback system, so users can let the system know if a response needs refining based on an updated piece of information, such as data from a newly installed sensor. In addition, unlike ChatGPT, C3 AI isn’t working off a set of data captured at a given moment in time. The data the system interprets are dynamic — as the data changes, so too do the answers provided. Such fluidity is critical for large enterprises, where the underlying information (data) is frequently changing.

While the impetus for C3 to create a generative AI solution started with a request to be internet-search-like, C3 Generative AI for the enterprise is far different. The search-like interface was used for a simple reason: It’s familiar and intuitive. But what the user gets from C3 Generative AI is more than enterprise search.

C3 Generative AI serves as a knowledge assistant, with domain expertise, that can orchestrate APIs, tools, and help users take action.

And it all happens rapidly, with a user’s prompt, or query, flowing down to the learning models already applied to an enterprise’s datasets.

Giving an enterprise user an intuitive way to ask questions of all an enterprise’s data expands the value of enterprise AI technology to far more people across an organization.