- AI Software

- C3 AI Applications

- C3 AI Applications Overview

- C3 AI Anti-Money Laundering

- C3 AI Cash Management

- C3 AI Contested Logistics

- C3 AI CRM

- C3 AI Decision Advantage

- C3 AI Demand Forecasting

- C3 AI Energy Management

- C3 AI ESG

- C3 AI Health

- C3 AI Intelligence Analysis

- C3 AI Inventory Optimization

- C3 AI Process Optimization

- C3 AI Production Schedule Optimization

- C3 AI Property Appraisal

- C3 AI Readiness

- C3 AI Reliability

- C3 AI Smart Lending

- C3 AI Sourcing Optimization

- C3 AI Supply Network Risk

- C3 AI Turnaround Optimization

- C3 Generative AI Constituent Services

- C3 Law Enforcement

- C3 Agentic AI Platform

- C3 Generative AI

- Get Started with a C3 AI Pilot

- Industries

- Customers

- Events

- Resources

- Generative AI for Business

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can the Generative AI Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- What Is Enterprise AI?

- Machine Learning

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download eBook

- All Resources

- Publications

- Customer Viewpoints

- Blog

- Glossary

- Developer Portal

- Generative AI for Business

- News

- Company

- Contact Us

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can the Generative AI Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- C3 Generative AI: Getting the Most Out of Enterprise Data

- The Key to Generative AI Adoption: ‘Trusted, Reliable, Safe Answers’

- Generative AI in Healthcare: The Opportunity for Medical Device Manufacturers

- Generative AI in Healthcare: The End of Administrative Burdens for Workers

- Generative AI for the Department of Defense: The Power of Instant Insights

Can the Generative AI Hallucination Problem be Overcome?

By Louis Poirier, Vice President, AI/ML CTO

August 31, 2023

The large language models (LLMs) that power consumer generative AI tools seem credible at first glance, churning out authoritative answers in a matter of seconds. But these LLMs frequently generate incorrect and inaccurate responses, a problem known as hallucination that’s gained considerable attention as people realize the extent of the problem.

Hallucination can be solved – and C3 Generative AI does just that – but first let’s look at why it happens in the first place.

Like the iPhone keyboard’s predictive text tool, LLMs form coherent statements by stitching together units — such as words, characters, and numbers — based on the probability of each unit succeeding the previously generated units.

For example, in English, if a generated sentence begins with a subject, there’s a high probability a verb will follow rather than an object. Consequently, an LLM would rank potential verbs higher than an object and select a verb as the next unit in the sentence. This predictive function applies not just to forming a statement’s grammatical structure, but to forming its situational context.

This means that LLMs don’t understand the context of a statement; they predict what output is most likely correct. And unless guided by safeguards, LLMs prioritize generating a “best guess” over not responding at all, even when that means presenting falsehoods as truth. This happens because LLMs are trained on all information across the internet, absorbing every bit of unverified information.

Intrinsic and Extrinsic Hallucinations

Hallucinations are defined as intrinsic or extrinsic. Intrinsic hallucinations occur when an LLM’s output contradicts its source content; the LLM has access to the correct information but makes an incorrect prediction. This issue exists with consumer LLMs, which are trained with large amounts of contradictory data. But enterprise LLMs can be fine-tuned and trained through prompt engineering to mitigate the issue.

More challenging, however, are extrinsic hallucinations that occur when the LLM’s output is not supported by source content — as if the answer is pulled out of thin air. This happens when someone asks a question that the LLM cannot correctly answer because it lacks information to do so. Instead of stating that it doesn’t know the right answer, it makes one up.

For example, imagine that someone wanted to know how many stories tall the C3 AI office building is. They turn to a consumer generative AI tool for the answer, but the LLM was not trained with that information. So, the LLM relies on similar information it has been trained on for an answer; it might notice, for instance, that most of its training data refers to office buildings that are 12 stories tall, which is, in fact, what a consumer LLM inaccurately told us.

While this example seems trivial, extrinsic hallucinations have proved especially problematic, referring to books, articles, research papers, and court cases that never existed. Extrinsic hallucinations can look like LLMs claiming people made statements or committed acts with no corroboration.

Consumer applications of generative AI are prone to lying because, in a sense, they are designed to be creative and foster ideas. While that’s useful if you’re looking for help writing a song, it’s obviously unacceptable for anyone wanting accurate, credible information – be that a student, or analyst at a business or government agency.

Solving for Hallucination

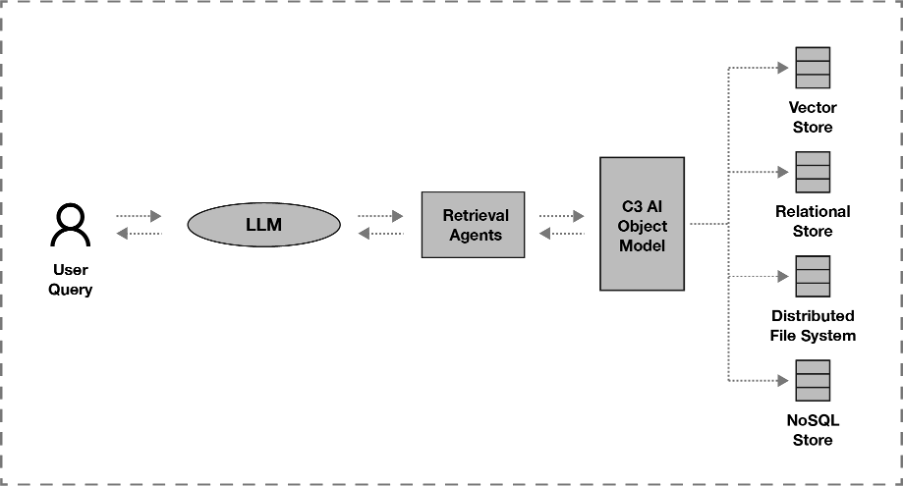

C3 Generative AI by design eliminates hallucinations. When a user submits a query, an LLM interprets its semantic intent, but allows a separate system (a retrieval model connected to a source of truth, such as a vector store, database, or filesystem) to fetch the most relevant information from an enterprise’s dataset. The system then gives the information to the LLM to be synthesized and displayed to the user. If the LLM encounters an unanswerable question, a safeguard blocks it from responding — rather than letting it present an uninformed answer.

We are early in the generative AI era, and enterprises everywhere are exploring how best to take advantage of this once-in-a-generation technological advancement. While hallucination is a top concern, when it comes to generative AI for the enterprise, it’s a solvable problem.

Product Suite