A Series on Multi-Hop Orchestration AI Agents: Part 2

Continue the series: Part 1, Part 3, Part 4

By Ivan Robles, Senior Data Scientist, C3 AI

Previously, we uncovered the game-changing potential of multi-hop orchestration agents, showcasing how their step-by-step reasoning and tool integration redefine adaptability, precision, and scalability in Enterprise AI.

Now, let’s dive into how AI agents are classified, the strengths and limitations of different agent architectures, and how they continuously learn and improve to tackle complex tasks.

Classifying AI Agents: Key Dimensions and Capabilities

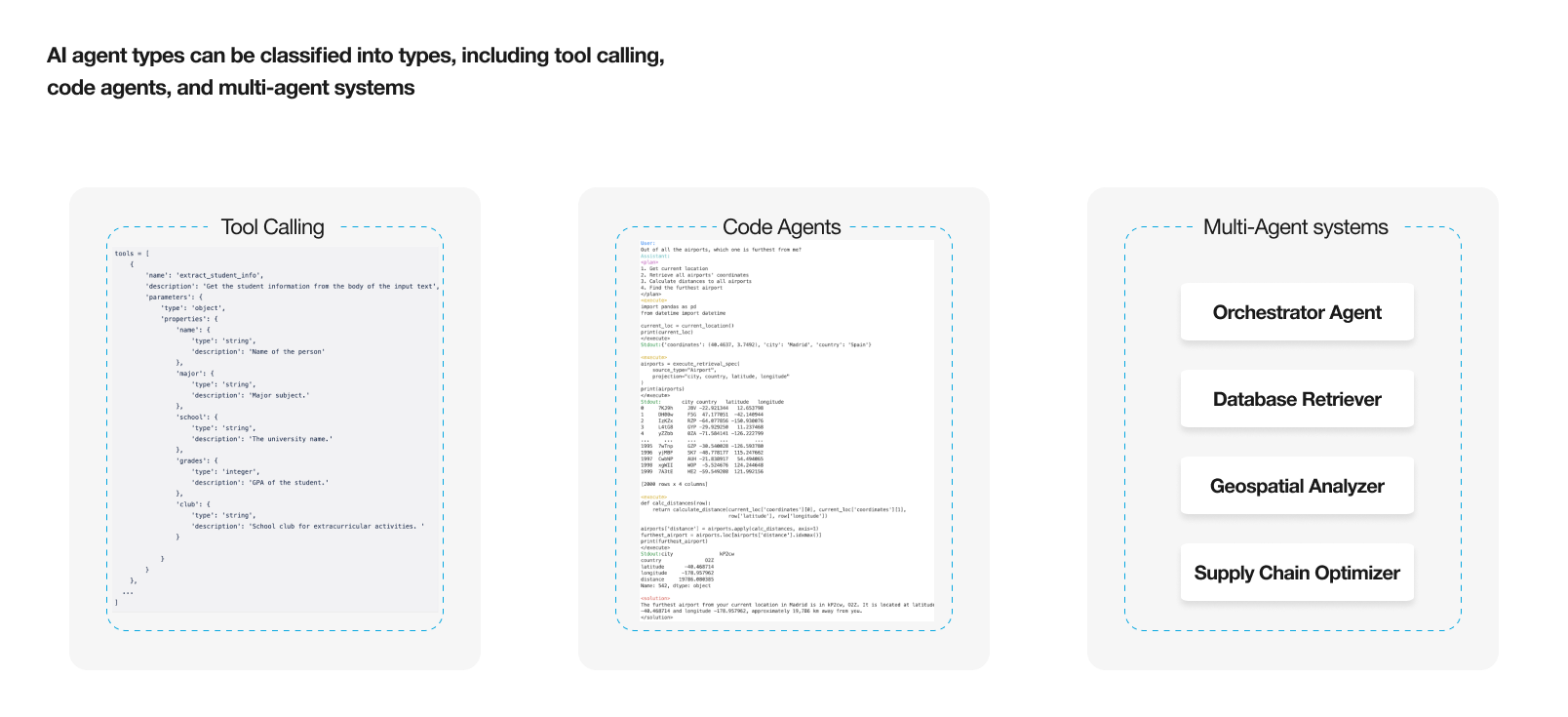

When building or analyzing AI systems, it’s essential to classify agents based on their interaction style and action method. These classifications provide insights into how agents operate and collaborate to solve complex tasks. Two of the most distinctive dimensions are Action Type and Agent Action Method.

Action Type: How do agents interact with their environment?

- Single-Agent Systems: In single-agent systems, one agent operates independently to perform tasks or make decisions without interacting with other agents.

- Multi-Agent Systems: Multi-agent systems consist of multiple agents that may collaborate, compete, or work independently. Often, these systems have an orchestrator agent that delegates tasks to specialized agents, each focusing on a specific function (e.g., geospatial analysis or supply chain optimization).

Agent Action Method: How do agents perform actions?

- Tool-Calling Agents: These agents interact with external tools or services, typically via API calls. They gather data from outside sources and act as intermediaries between the system and external environments.

- Code Agents: These agents focus on data processing, computation, or running algorithms. They can solve optimization problems, calculate distances, or process large datasets, providing in-system solutions without external interaction.

- Hybrid: Multi-agent systems can be set up with a combination of tool-calling and code agents.

AI agent types can be classified into types, including tool calling, code agents, and multi-agent systems

By understanding these key dimensions, businesses can design agent systems tailored to their specific needs, whether for independent, task-focused applications or complex, collaborative workflows that demand coordination across multiple specialized agents.

Comparing AI Agent Architectures: Single-Agent vs. Multi-Agent Systems

When designing AI solutions, understanding the strengths and limitations of different agent architectures is critical. Single-agent and multi-agent systems each offer distinct advantages depending on the complexity and scope of the task. Below is a detailed comparison to guide their application.

Single-Agent vs. Multi-Agent Systems

| Attribute | Single agent | Multi-agent systems |

| Speed | Fast | Moderate to slow (Coordination leads overhead) |

| Flexibility | Low (There is a limit to what a single agent can do) | High (Can have specialized agents to deal with different input and output formats) |

| Can solve complex tasks | Low to Medium (Limited on the breadth of the task) | High (manages and coordinates multiple specialized agents for difference tasks) |

| Expertise to configure | Low (Can easily be configured by non-technical users) | Medium to High (Some agent expertise might be required to configure the system) |

Single-Agent Systems: Best suited for tasks that require fast execution without the need for coordination or collaboration. Single agents are efficient when the task is straightforward and does not involve much complexity.

Example: A chatbot designed to answer FAQs works as a single-agent system, efficiently processing user queries without needing assistance from additional agents.

Multi-Agent Systems: Used when tasks are more complex and require specialization or coordination between different agents. Multiple agents can work together on different parts of a larger problem, such as one agent handling data collection while another processes it. This is not always necessary; sometimes a single agent with tools is sufficient.

Example: In traffic management, one agent may predict congestion, another handles routing, and a third monitors accidents, with the orchestrator ensuring seamless interaction to deliver an integrated solution.

Code Agents vs. Tool-Calling Agents

| Attribute | Tool-calling agents | Code agents |

| Speed | Medium (Tool calling is verbose and thus LLM output generation times take longer) | Fast (Efficient at LLM generation and task processing) |

| Flexibility | Low (Requires very specific input and output formats, all output formats must be passed as strings) | High (Can deal with multiple output formats, e.g. variables, tables, images) |

| Performance on data intensive tasks | Low to Medium (relies on external APIs with simple inputs and outputs) | High (Can perform complex operations on tool calls and output processing) |

| Expertise to configure | Low to medium (Can easily be configured by non-technical users) | Medium to high (Agent and code expertise might be required to configure) |

Code Agents: These agents are useful for tasks involving complex computations, processing large datasets, or performing algorithmic operations internally. They excel when flexibility is needed in handling different input/output formats.

Example: An agent that performs machine learning model training and optimization. It runs internally, processes data, and outputs results like accuracy metrics or predictions—all without needing external calls.

Tool-Calling Agents: Ideal for tasks that involve interacting with external tools or APIs, such as gathering data from web services. They are best for situations where external input is required but without the need for heavy internal processing.

Example: An agent that requests real-time weather data from an external API for analysis in a logistics application.

Hybrid Multi-Agent Systems: Hybrid multi-agent systems leverage the strengths of both tool-calling and code agents to create a powerful, flexible framework for addressing complex tasks. By integrating these two approaches, hybrid systems achieve a balance between external data interaction and internal computation, making them ideal for multifaceted applications.

Example: A hybrid system might use a tool-calling agent to gather external supply chain data and a code agent to process the data and generate optimization recommendations.

The choice between single-agent and multi-agent systems depends on the specific needs of the task. Single-agent systems deliver speed and simplicity for focused, straightforward operations, while multi-agent systems shine in dynamic, multi-faceted environments where specialization and coordination are critical. Understanding these trade-offs ensures that businesses can select the optimal architecture to maximize efficiency and performance.

Continuous Learning and Reasoning in AI Agents

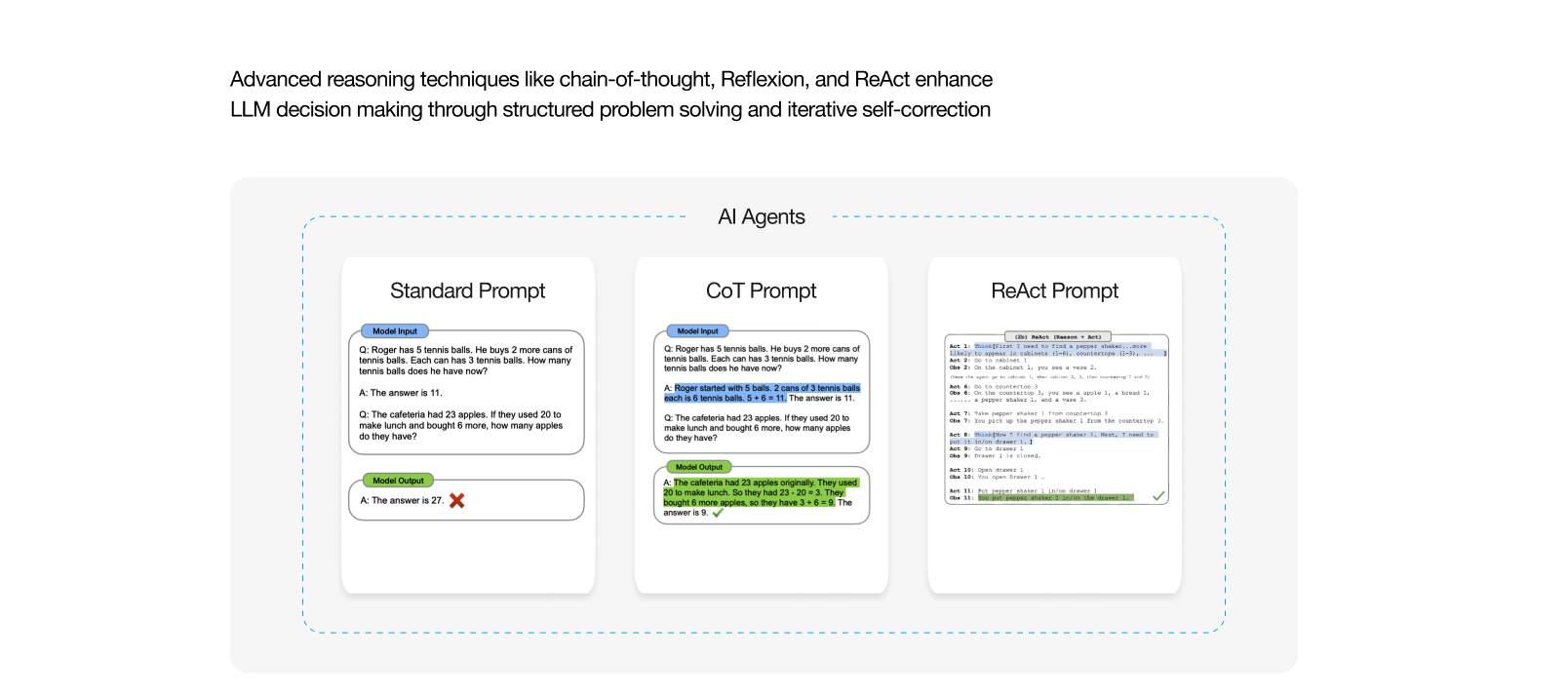

AI agents continuously evolve using advanced reasoning techniques:

- Standard Prompting: Direct generation of responses without breaking down tasks into intermediate steps. Best for simple tasks, factual queries, and quick answers. However, it struggles with complex reasoning or multi-step tasks since there is no clear structure or correction mechanism.

- Chain-of-Thought (CoT): Breaks tasks into smaller steps for step-by-step solutions. Ideal for tasks that involve logical or multi-step reasoning, like solving math problems. The downside is that if an early step is incorrect, the entire reasoning process may still lead to a wrong conclusion without a way to self-correct.

- ReAct (Reasoning + Acting): Combines step-by-step reasoning with real-time actions, allowing the model to interact with an environment and adjust based on feedback. It is suitable for dynamic tasks requiring interaction and real-time decisions. However, this method requires an interactive system and depends on accurate and reliable feedback for self-correction.

- Reflexion: Uses feedback loops to reflect on past outputs and iteratively improve the model’s performance. This technique is great for tasks that need refinement and complex reasoning over multiple attempts, as it provides a mechanism to learn from mistakes and self-correct. It can be more time-consuming, as the model may need multiple iterations to arrive at an optimal solution.

Advanced reasoning techniques like chain-of-thought, Reflexion, and ReAct enhance LLM decision making through structured problem solving and iterative self-correction

Evaluating Performance of Thought Techniques

The effectiveness of reasoning techniques in large language models (LLMs) is evident in tasks like HumanEval Rust, a benchmark for coding challenges. By integrating advanced methods such as test generation and self-reflection, LLMs can significantly improve their accuracy and decision-making abilities.

For example, the Reflexion method incorporates feedback loops and iterative self-correction, enabling the model to refine outputs and enhance problem-solving capabilities. The comparison below demonstrates the impact of these techniques:

| Approach | Test generation | Self-reflection | Accuracy |

| Base model | False | False | 0.6 |

| Reflexion | True | True | 0.68 |

Source: Reflexion Paper (Noah Shinn, 2023); Self-Reflection Paper (Matthew Renze, 2024); HumanEval Rust Dataset (diversoailab, 2024).

This data underscores how empowering LLMs with validation and reasoning techniques results in a marked improvement in performance, transforming them into more robust and reliable systems.

Long-Term Memory for Enhanced Adaptation

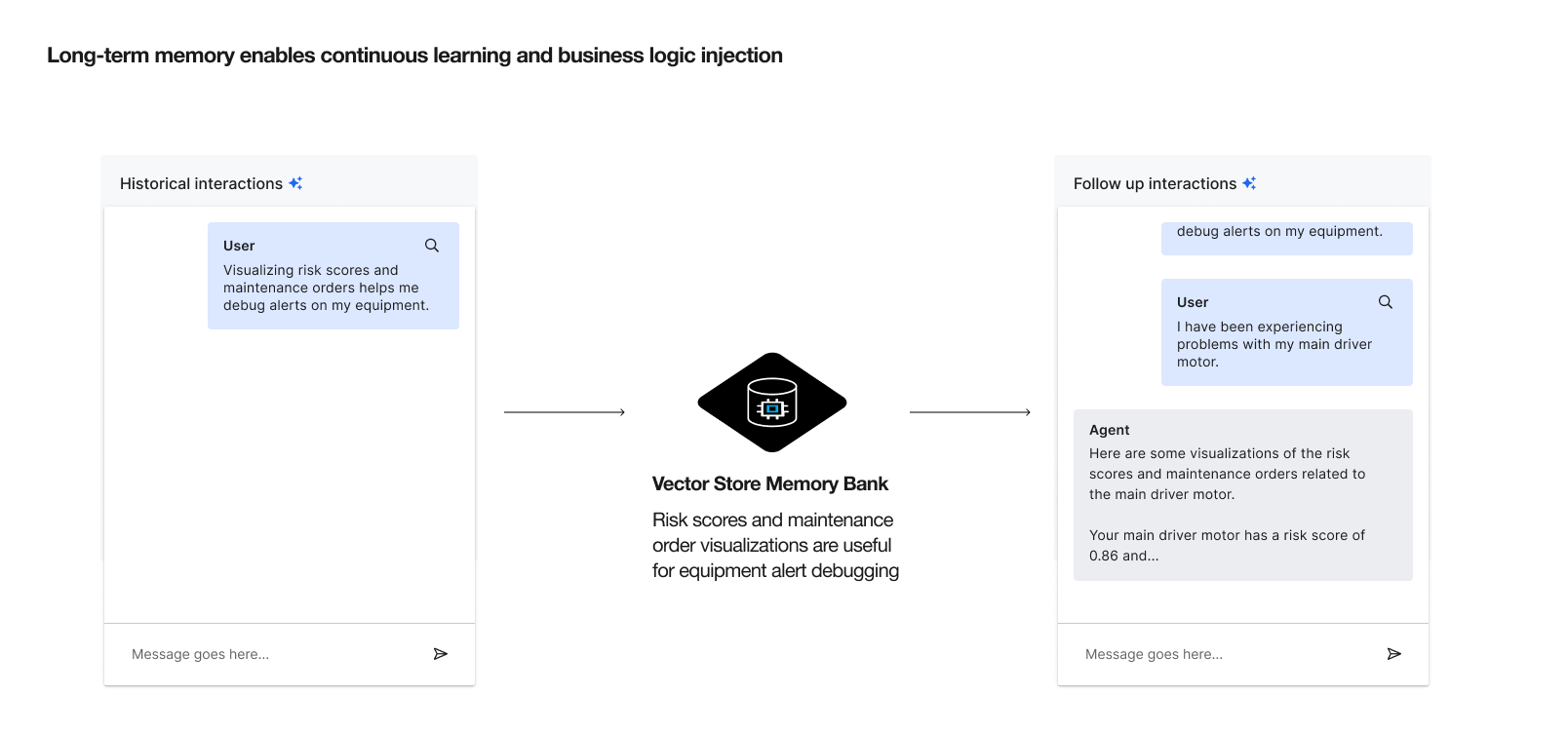

Long-term memory is a cornerstone of advanced LLMs, enabling them to recall knowledge from past interactions and adapt to new scenarios seamlessly. By using a vector database to embed these interactions, the agents can perform retrieval-augmented generation (RAG).

This allows for these systems to access and apply relevant historical data during real-time decision making, enhancing reasoning, accuracy, context awareness, and decision making.

By combining thought techniques like Reflexion and long-term memory capabilities, AI agents achieve more than just functional improvements — they evolve continuously. This adaptability empowers businesses to build systems capable of addressing complex, dynamic challenges while meeting user-specific needs.

For instance, an agent can remember a user’s preferences for optimizing supply chain logistics, applying these insights automatically during subsequent interactions.

Long-term memory enables continuous learning and business logic injection

How It Works

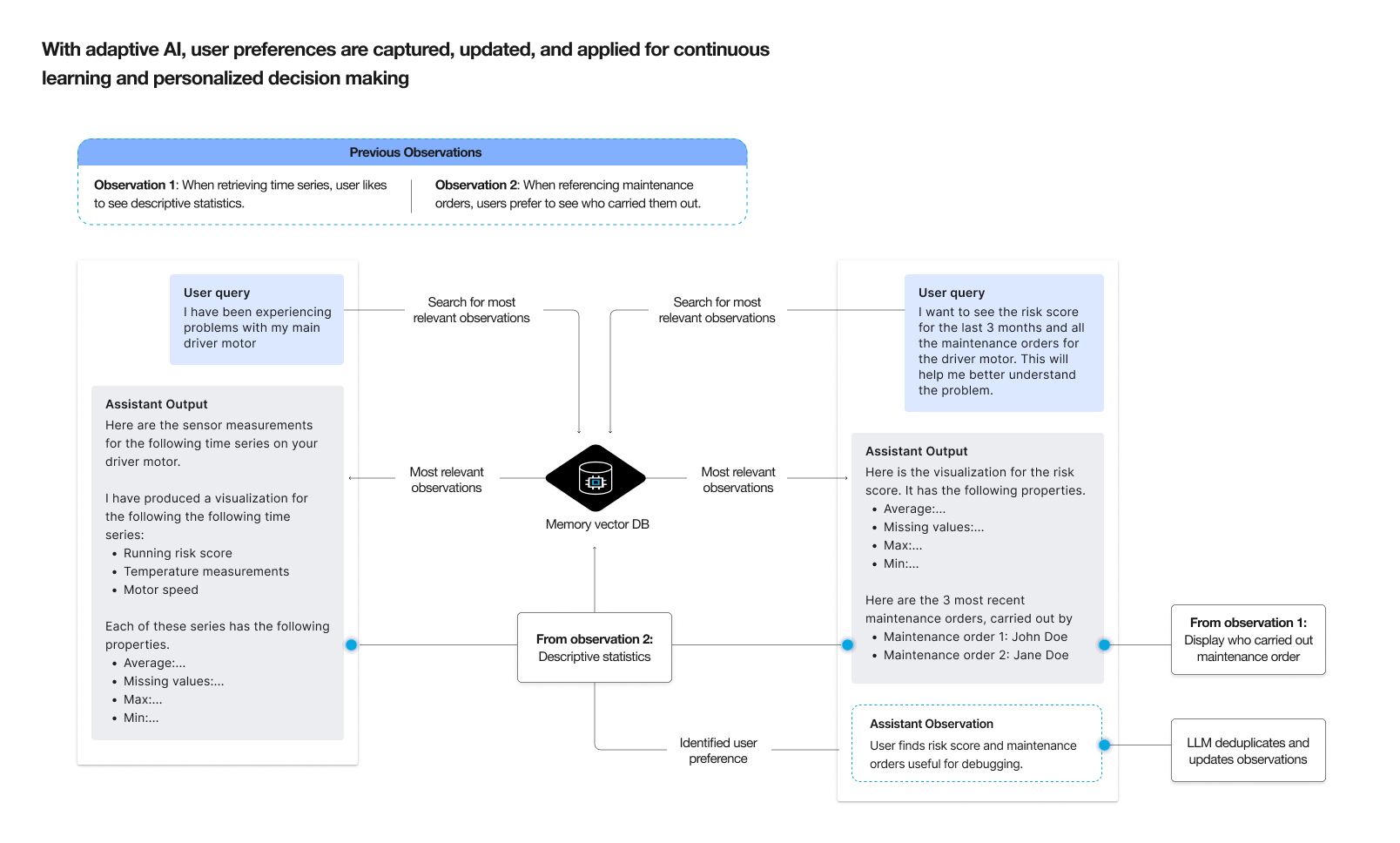

- After each interaction, the LLM generates observations about user preferences or contextual insights.

- These observations are embedded into a vector database, ensuring efficient retrieval during future interactions.

- The system updates records dynamically, avoiding redundancies while continuously learning.

Consider a supply chain optimization scenario. An agent with long-term memory can remember a user’s preference for prioritizing certain suppliers or regions. When queried again, the agent applies this historical knowledge to deliver recommendations that align with the user’s goals.

With adaptive AI, user preferences are captured, updated, and applied for continuous learning and personalized decision making

Adapting to Future Challenges

By classifying agents effectively and leveraging techniques for continuous learning and reasoning, businesses can build AI systems that not only meet today’s demands but adapt seamlessly to future challenges.

To learn more about how C3 Generative AI drives innovation and delivers enterprise-grade solutions, explore the full range of benefits.

About the Author

Ivan Robles is a Lead Data Scientist on the Data Science team at C3 AI, where he develops machine learning and optimization solutions across a variety of industries. He has a record of AI Kaggle competitions, where he ranked on the top 1.5% globally. He received his Master of Science in Advanced Chemical Engineering with Process Systems Engineering from Imperial College London.