The C3.Optim package is an end-to-end solution built to support complex optimization workflows

By Larry Jin, Senior Manager, Data Science, C3 AI and Josh Zhang, Lead Data Scientist, C3 AI

Operations research (OR), often known as optimization, provides businesses with powerful tools and methodologies to tackle complex decision-making challenges. However, one of the most challenging aspects of optimization is putting it into practice. With frequent changes to business goals and inconsistent data quality, organizations can have a difficult time releasing optimization workflows and processes that produce long-term benefits and generate value.

What Is Optimization and Operations Research?

Optimization provides enterprises with analytical approaches that enable informed, data-driven decisions to improve performance and profitability. OR relies on mathematical modeling, statistical analysis, and optimization techniques to determine the most efficient and effective courses of action. For example, OR can optimize manufacturing sequences to minimize production time and costs, schedule agricultural activities to maximize yield and efficiency, and manage retail inventory to balance supply with customer demand.

While modeling and prototyping in operations research is critical, the most challenging aspect is productionization. This difficulty arises due to several factors:

- Frequent changes in business requirements necessitate iterative adjustments to models

- Extensive manual efforts are needed to fine-tune models to meet business expectations

- Tracking experiment runs can be complex

- Resolving data quality issues from upstream sources is often necessary

These challenges can significantly delay implementation and reduce the effectiveness of OR solutions if not managed effectively.

How C3 AI Approaches Optimization

At C3 AI, we recognize that the complexity of productionized optimization workflows increases as production scales. For example, a solution workflow may consist of multiple stages, each representing a large optimization model.

To handle these complexities, C3 AI offers a high-level framework resembling a computation graph to manage intricate workflows. For simpler workflows, such as a single large optimization model with a few pre-processing and post-processing steps, a high-level framework is also more than capable of handling these scenarios effectively. Additionally, we provide a low-level framework to keep individual optimization models organized as they grow.

These frameworks are industry- and product-agnostic, supporting a variety of customers across different product offerings. Both frameworks are features of the C3.Optim package, which we will discuss in the next section.

How C3.Optim Helps

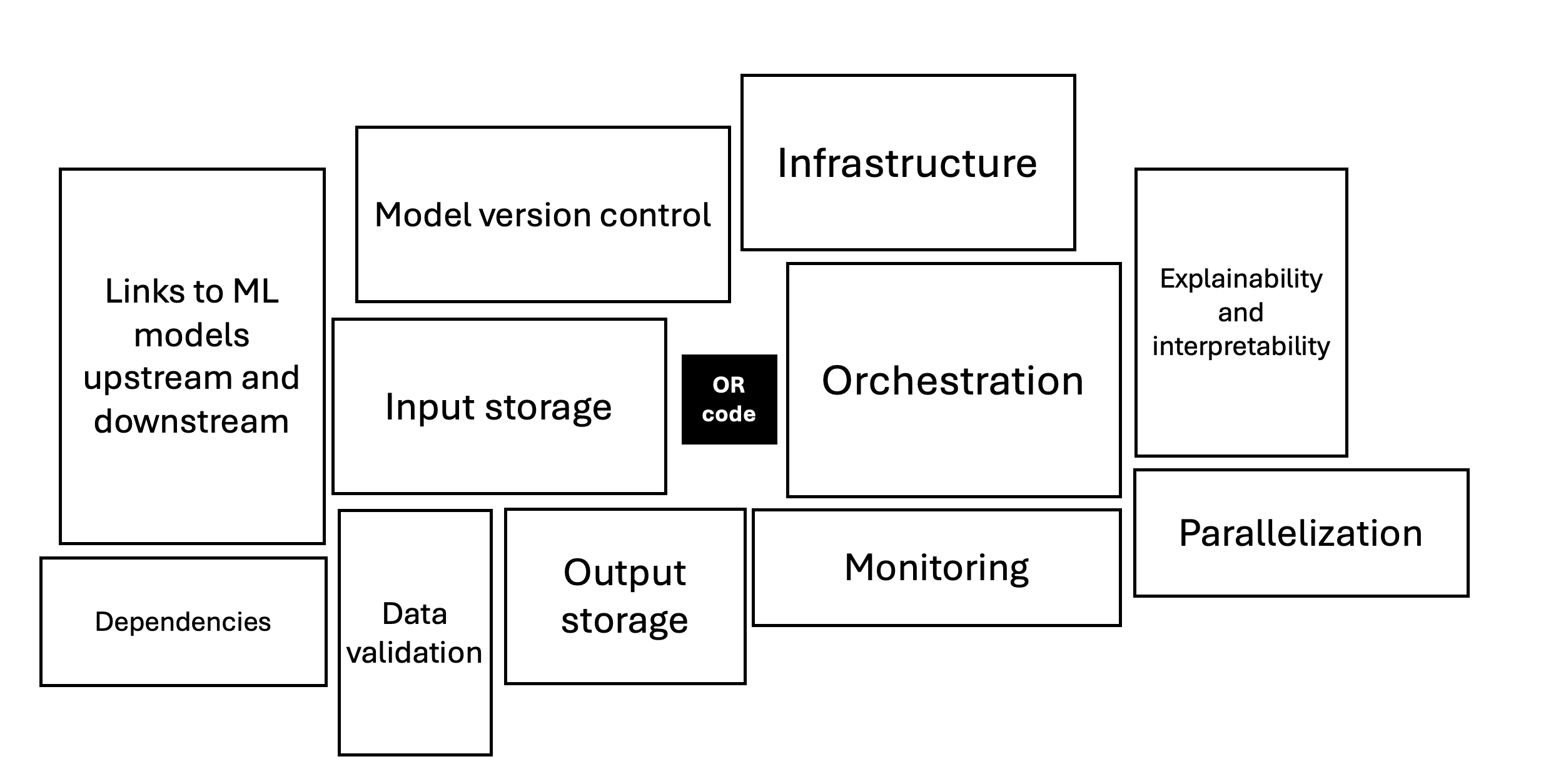

Similar to the famous 2015 paper by Sculley et al. that discussed the “plumbing” concept for machine learning models, the need for plumbing is also real for the production of OR models. As illustrated in Figure 1, only a tiny fraction of the code in many OR systems is dedicated to solving problems. Much of the remainder involves essential infrastructure tasks such as model version control, input/output storage, orchestration, monitoring, parallelization, and ensuring explainability and interpretability. The C3.Optim package is designed to facilitate the productionization of any data science solution involving optimization, specifically OR. It addresses these productionization challenges, making it easier to implement and scale OR solutions effectively.

Figure 1. Only a tiny fraction of the code in many OR systems is actually devoted to solving — much of the remainder may be described as “plumbing.”

Two representative features within the C3.Optim package are OptimFormulation and OptimFlow. These components are designed to aid developers in creating maintainable and scalable productionization paths for optimization solutions.

OptimFormulationconstructs a dependency graph for each component of an OR model, such as constraints, variables, objectives, and data fields. This facilitates structured updates and iterations of the OR model, ensuring that changes in one component automatically propagate to dependent components, thereby maintaining consistency and integrity.OptimFlowhelps developers build end-to-end optimization solutions by orchestrating workflows that include one or multiple steps involving OR models. It efficiently manages data flows and the execution of optimization tasks, enabling seamless integration of OR models within broader business processes.

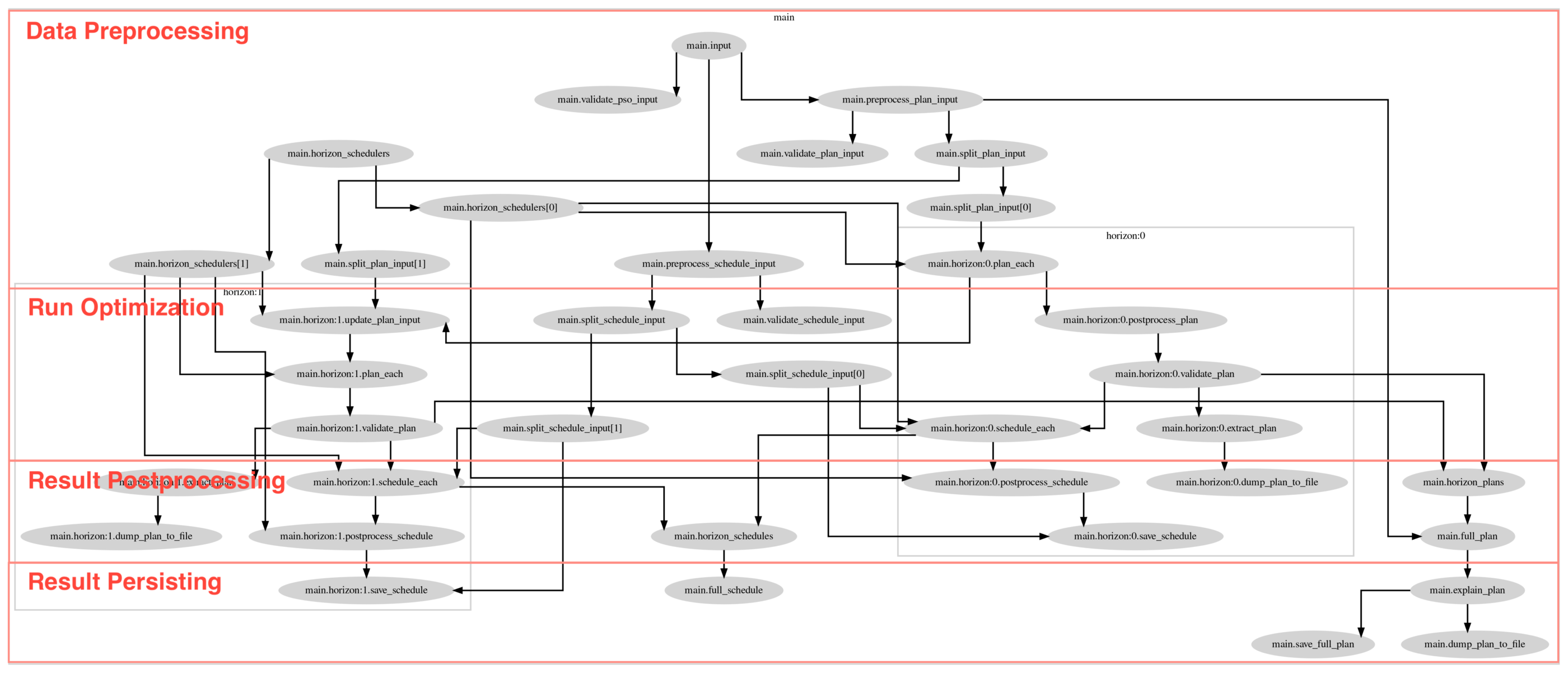

Considering a real-life use case for a productionized OR solution in supply chain management. The process begins with data collection from multiple sources, followed by validation and cleaning. Next, the optimization model created with OptimFormulation is solved in multiple stages, breaking the problem into smaller instances over the time horizon. Finally, the results are concatenated and post-processed. OptimFlow streamlines this entire process, allowing developers to focus on improving the optimization model rather than managing workflow intricacies. Figure 2 below visualizes a complex optimization workflow using OptimFlow, showcasing multiple stages and dependencies required for a comprehensive solution

Figure 2. An optimization workflow visualized via OptimFlow.

OptimFormulation and OptimFlow empower developers to create robust, scalable optimization solutions that can easily adapt to evolving business requirements and operational constraints.

OptimFormulation

As mentioned previously, the productionization of OR models is often difficult due to frequent changes in business requirements, necessitating iterative model adjustments. These changes typically require extensive manual efforts to fine-tune models to meet evolving business expectations. OptimFormulation addresses these challenges by streamlining model iterations through a design based on a dependency graph. This reduces the manual effort needed for fine-tuning and ensures that models remain aligned with business needs, facilitating efficient and scalable productionization of OR solutions.

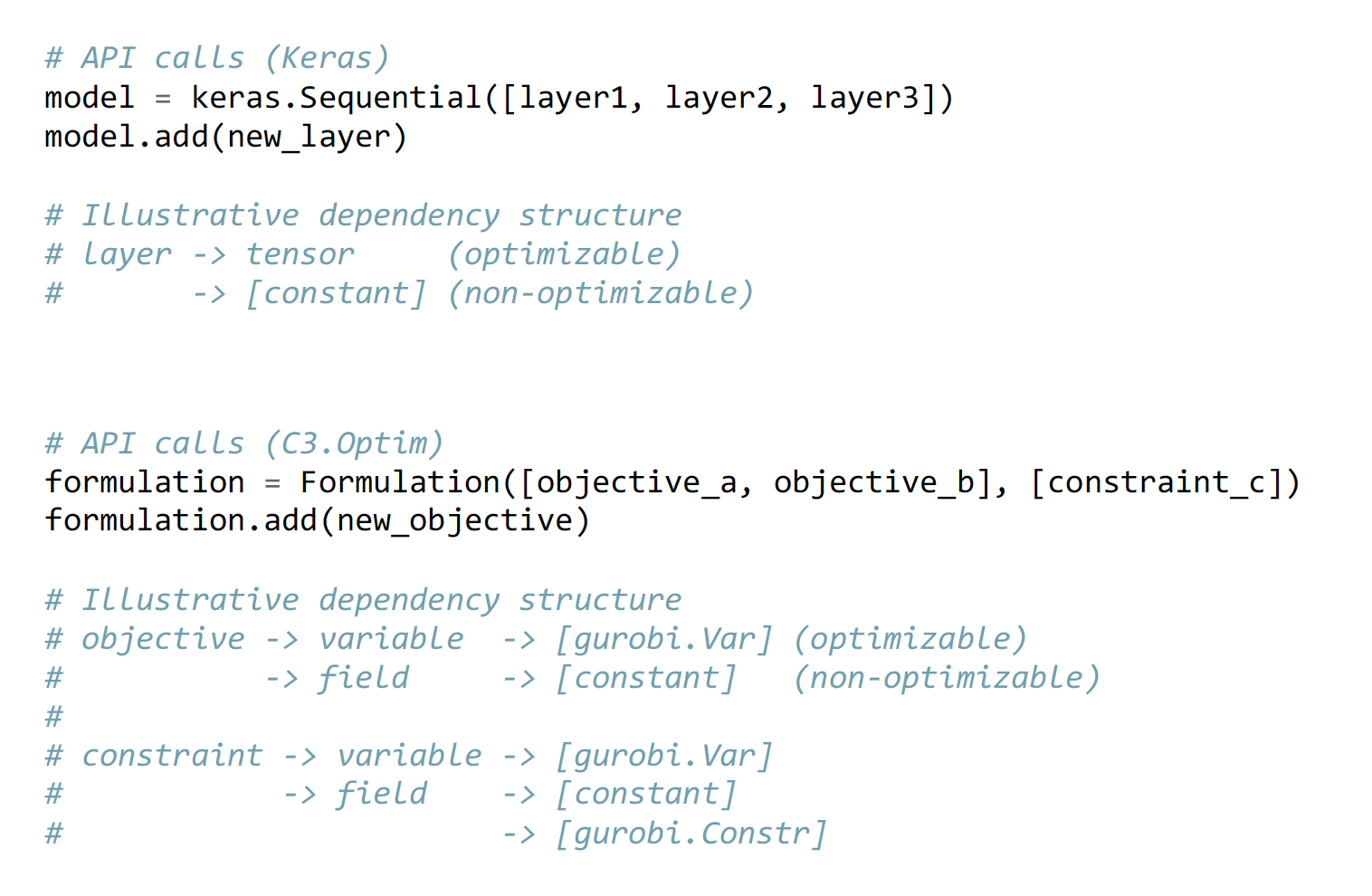

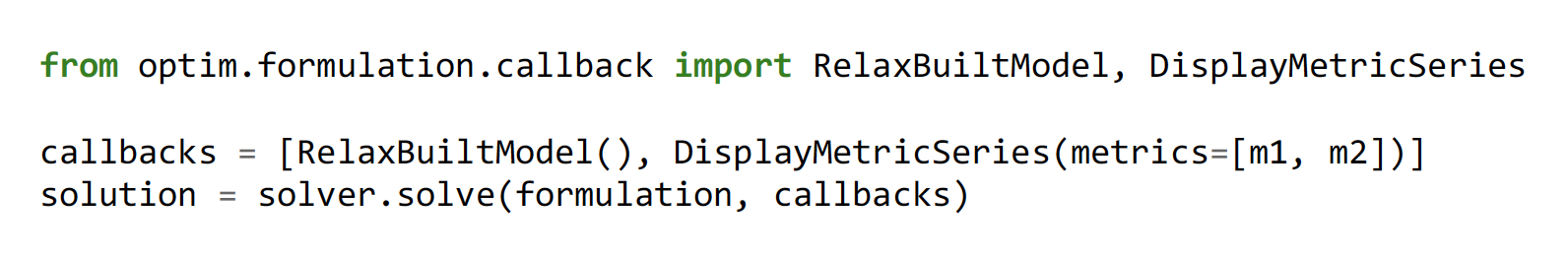

OptimFormulation helps developers build an OR formulation in a way similar to how Keras constructs a deep learning (DL) model. In Keras, the main components of a DL model are represented by layers, such as dense layers, which are stacked to create a neural network. Similarly, OptimFormulation represents the main components of an OR model — data fields, variables, constraints, and objectives — by constructing a dependency graph. This graph ensures structured updates and iterations, making the development process more efficient and scalable.

As illustrated in the code below, Keras builds a sequential DL model by stacking a few pre-built dense layers together with specified activation types. This modular approach allows easy modification and extension of the model. OptimFormulation employs a comparable strategy for OR models. Developers define the OR model by linking pre-built formulation components — objectives, constraints, and variables — together in a structured manner. This authoring process not only facilitates clear and maintainable model definitions, but also ensures that any changes to one component propagate correctly throughout the model, maintaining consistency and integrity.

The benefits of building an OR model in such a structured way via OptimFormulation include the following:

- Dependency graph:

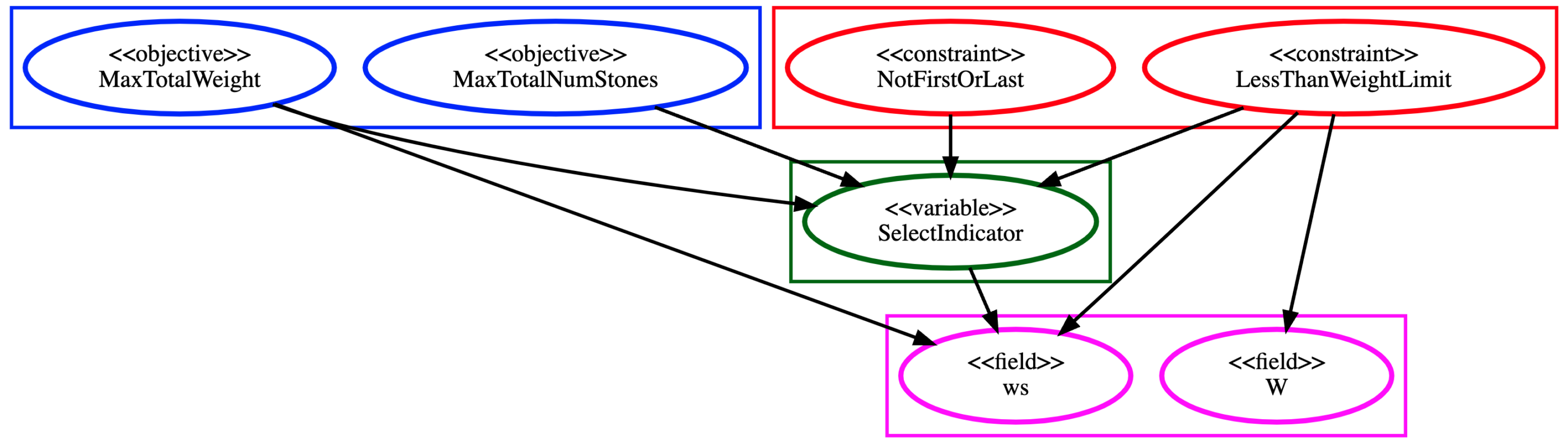

OptimFormulationenables dependency sorting and automatic validation for the components within the graph. It reduces the developer’s workload by ensuring the correctness of the formulation during iterative model adjustments. In the example shown below in Figure 3, the dependency graph illustrates all the formulation components in a simple OR model. The blue nodes represent objectives, the red nodes represent constraints, the green nodes represent variables, and the pink nodes represent data fields.

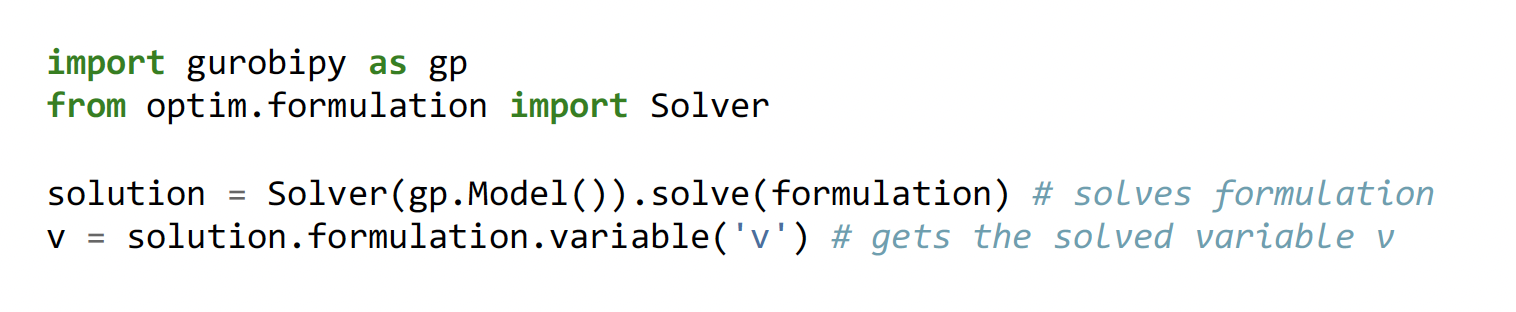

Figure 3. An example of a dependency graph created withOptimFormulation. - Full lifecycle tracking: This feature offers a single point of entry for the developer, which reduces the maintenance and book-keeping efforts needed. The Solution object in the

OptimFormulationframework is linked to the Formulation object, which is in turn linked to Data objects, and these Data objects are linked to Field objects. As a result, fetching the Formulation object is all that is needed for debugging and future maintenance by developers.The example in the code snippet below demonstrates that the developer can solve the formulation, then get the solved variable in the solution in two lines:

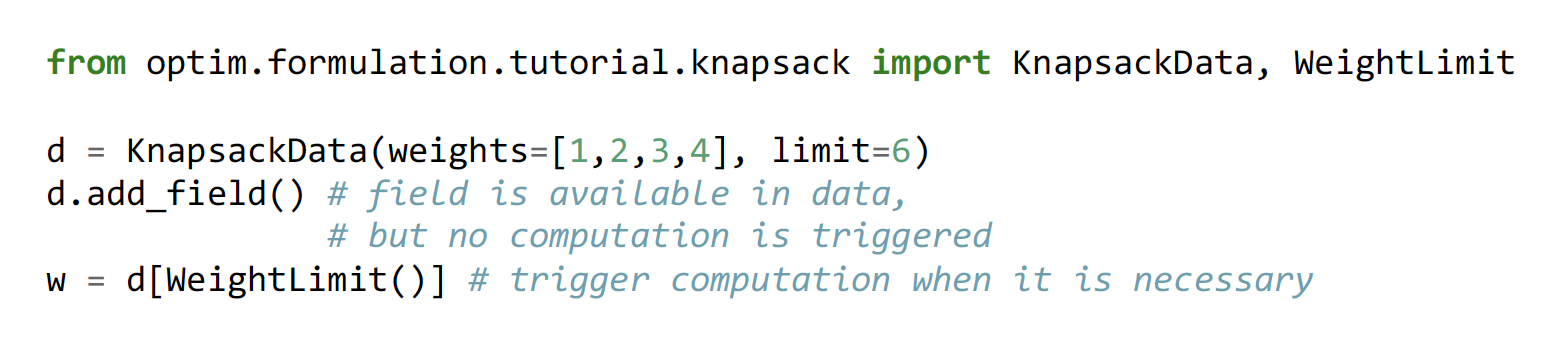

- Lazy computation and caching: This feature cuts down all unnecessary computations during model tuning and reduces the idle time of the developer while adjusting the model. All fields are computed lazily using the data[f] command, where f represents the field instance. The framework only evaluates the field value when it is genuinely needed for downstream computation.

The code snippet below demonstrates this feature with an Knapscak example:

- Complex metric: This feature provides visibility to the developers regarding the improvements on business KPIs during each iterative effort.

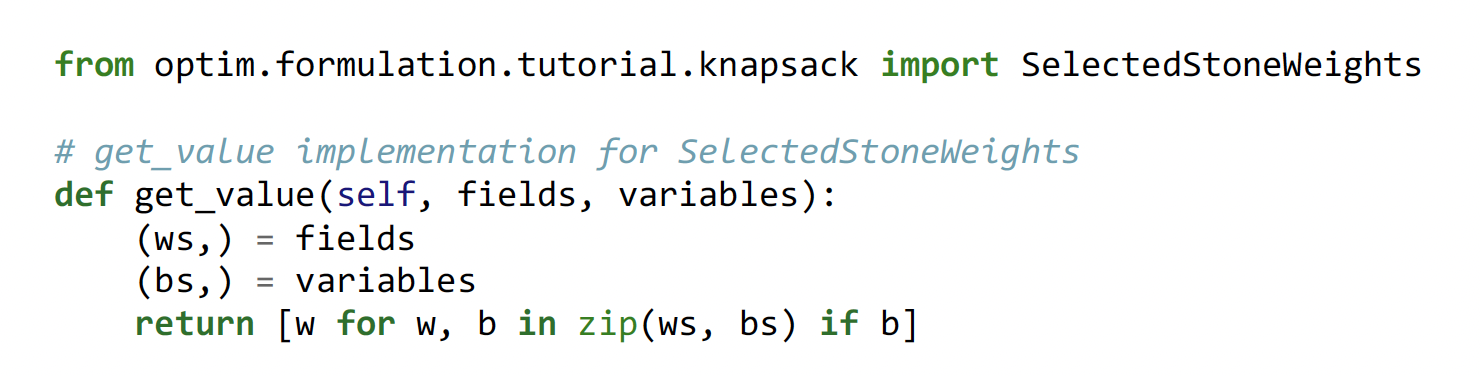

OptimFormulationis not limited to simple objectives or linear expressions commonly used for tracking solution quality. It also supports defining complex metrics that require aggregating several groups of fields and variables. This capability extends to any Python operation needed to compute these metrics, offering flexibility in assessing the business impact of solutions. These complex metrics can be evaluated during the solving process or upon finding the final solution, providing data scientists with immediate insights into the business implications at solve time.The code snippet below demonstrates an example of creating a metric that returns a list of values:

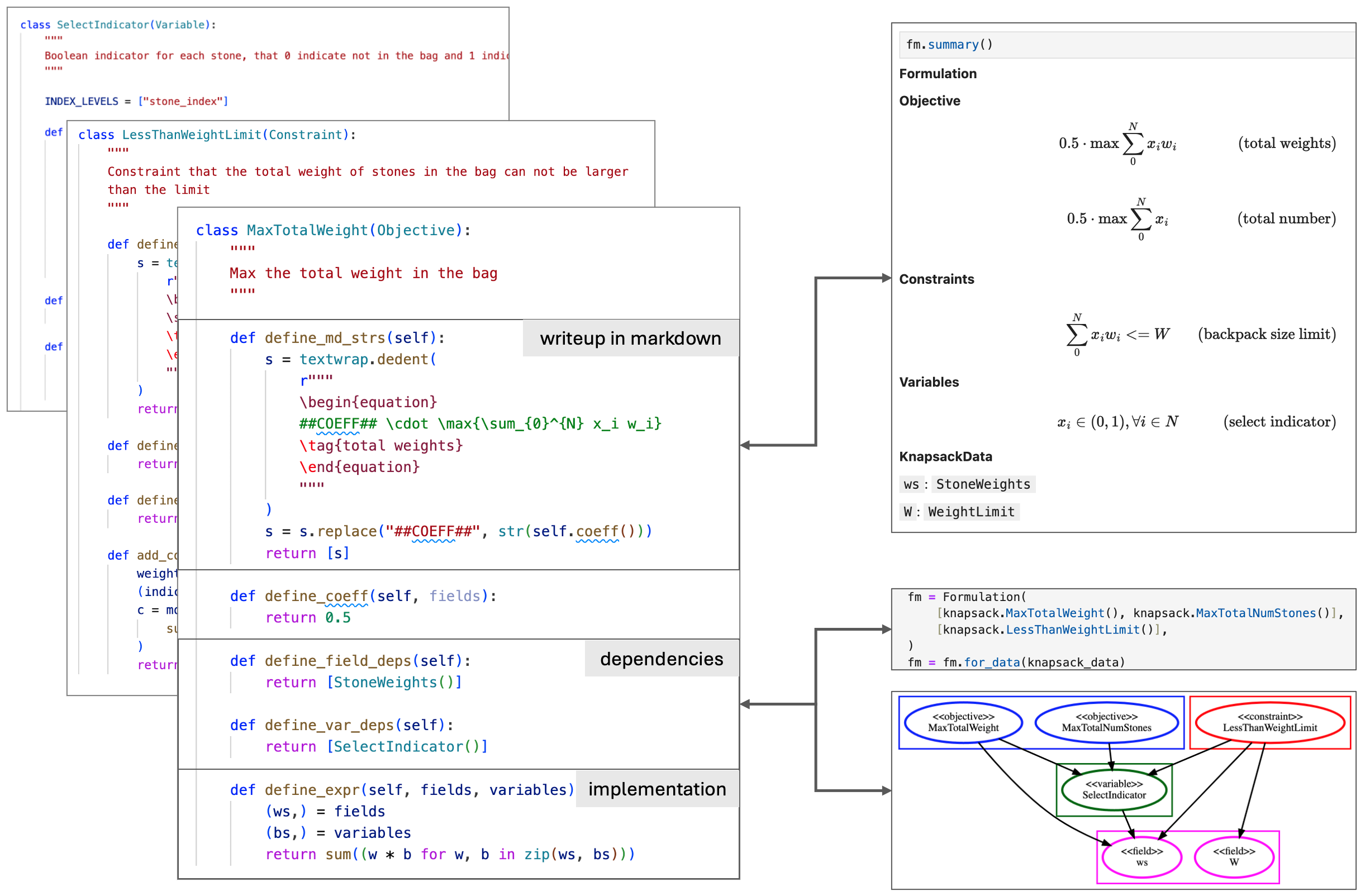

- Callback: This feature offers extreme visibility into The

OptimFormulationsolver supports an extensive range of callback functionalities not limited to the optimization phase. These callbacks can be activated throughout the entire lifecycle of the model — from before its construction to after the solution is extracted. This versatility allows for the implementation of powerful features such as model versioning, conditional model relaxation, and real-time performance monitoring, similar to functionalities seen in tools such as TensorBoard.The code snippet below shows an example of including the callbacks during the solving process:

- Self-documentation with LaTeX:

OptimFormulationautomatically renders your formulation into LaTeX-styled documentation. This feature helps authors write Markdown along with the definitions of the formulation components. Those math expressions can be directly included in the documentation, which increases maintainability. As shown below in Figure 4, the implementations of the formulation components, integrated with all dependencies, are rendered into structured documentation. This self-documentation ensures that optimization models are transparent, well documented, and easy to understand for future reference and maintenance.

Figure 4.

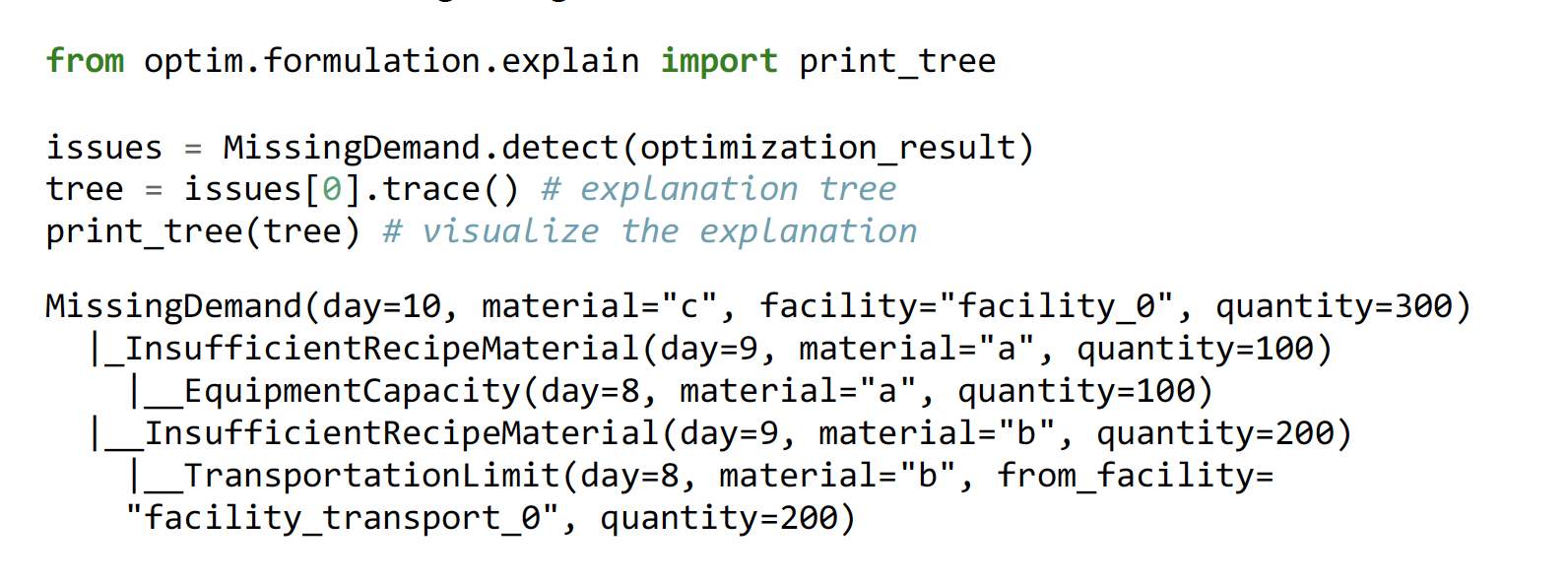

OptimFormulationprovides automatic self-documentation in LaTeX with dependency definitions. - Explainable OR model: The explainability functionality associated with the

OptimFormulationfeature can handle inquiries about the optimization results and helps with the overall maintainability of the work.The example in the code snippet below demonstrates a tree-structured visualization of the explainability feature, explaining why a particular product missed demand on a specific day in a manufacturing setting:

In this example, a missing demand issue is found from the optimization result, and it is caused by insufficient recipe materials “a” and “b,” and the root cause are equipment capacity and transportation limit.

OptimFlow

A productionized optimization workflow is often evolving as each step within the workflow is subject to modification multiple times during its lifecycle. Additionally, beyond adjusting the optimization model itself, there are frequently additions or changes needed in pre-processing or post-processing steps. These constant updates make optimization workflows error-prone and difficult to maintain, leading to high maintenance and development costs for enterprises.

OptimFlow helps resolve these issues with an end-to-end, production-ready OR solution based on a standard workflow template. This ensures the implemented solution is easy to debug, maintain, and check for errors. Often, when it comes to production, an end-to-end solution involves numerous pre-processing and post-processing steps, such as data cleaning, transformation, and result analyses. Additionally, multi-stage solves may be necessary, requiring the optimizer to be called multiple times to achieve a complete solution.

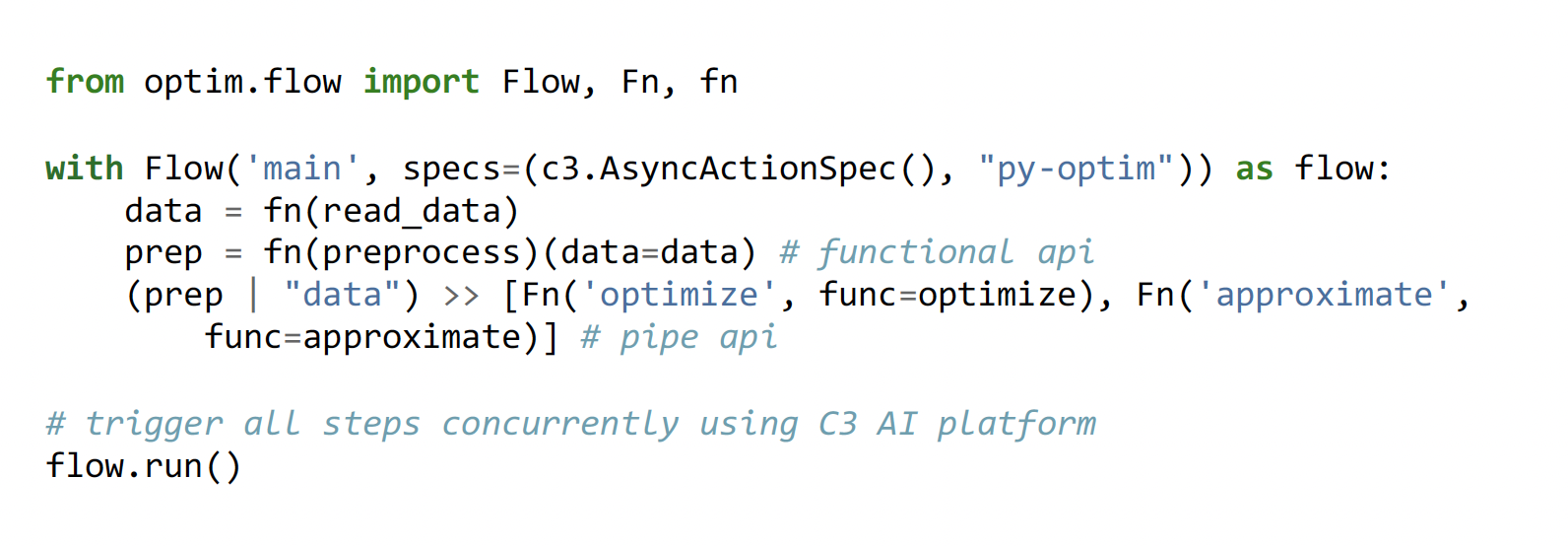

OptimFlow addresses these complexities by providing a structured framework that simplifies the integration of various steps involved in the optimization process. This includes handling data inputs, managing dependencies, executing the optimization models, and processing outputs. By standardizing the steps in the workflows, OptimFlow reduces the risk of errors and ensures that each step is executed correctly and efficiently. In the code snippet and Figure 5 below, we provide a quick illustration of code implementation to wrap data science workflow into OptimFlow and run the workflow with a simple API call.

Figure 5. An example of dynamic visualization offered by OptimFlow.

Moreover, OptimFlow supports the orchestration of complex workflows that require conditional logic and iterative processes for step creation. The flexibility of the authoring experience, efficient computation distribution, and ease of maintenance and debugging mentioned above are critical components to ensure that OR solutions remain relevant and effective over time for the business.

Key OptimFlow features include:

- It allows dynamic graph construction and enables developers to define clear and maintainable optimization workflows that can be easily updated as business requirements evolve.

- The OptimFlow backend dispatches computations to the required hardware resources and supports asynchronous executions.

- OptimFlow provides native Python entry points to assist with debugging if any steps in the workflow encounter issues during execution.

How to Get Started with C3.Optim

C3.Optim package comes with detailed documentation, easy-to-follow tutorials, and interactive notebooks to help you get started quickly. Whether you’re starting new project or integrating existing projects, these resources make it easy to learn and use the C3.Optim framework right away.

Our documentation covers everything from the basics to more advanced features, helping you to develop and integrate smoothly. The tutorials and notebooks provide practical examples to help you apply what you learn directly to your projects.

Figure 6. Documentation included in the C3.Optim package

Why C3.Optim Matters for Businesses

The structured approach provided by features such as OptimFormulation and OptimFlow ensures that optimization models are not only robust and scalable but also maintainable and transparent. This reduces the burden on development teams, allowing them to focus on innovation and continuous improvement.

Several out-of-the-box C3 AI applications, including C3 AI Production Schedule Optimization and C3 AI Process Optimization, are already using and benefiting from the C3.Optim package.

The package can also support customized optimization applications. By using C3.Optim, those applications will see faster deployment times, fewer errors, and more reliable outcomes. Consequently, businesses will enhance decision-making processes — resulting in improved operational efficiency and cost savings, ultimately contributing to better overall performance and competitiveness in the market.

About the Authors

Larry Jin is a Senior Manager in the Data Science team at C3 AI, where he develops machine learning and optimization solutions across a variety of industries. He received his Ph.D. in Energy and Resource Engineering from Stanford University.

Josh Zhang is a Lead Data Scientist at C3 AI, where he develops algorithms and frameworks for multiple large-scale AI applications. He holds an M.S. in Mechanical Engineering from Duke University and a B.S. in Mechanical Engineering from Lafayette College. Before C3 AI, he worked on the development of a large-scale graph deep learning framework as a software engineer.

Thank you to Shivarjun Sarkar and Utsav Dutta in the C3 AI Data Science team for their contribution to this blog.