Decoding the language of time: AI that understands time series as seamlessly as text

By Sina Pakazad, Vice President, Data Science, C3 AI, Utsav Dutta, Data Scientist, Data Science, C3 AI, and Henrik Ohlsson, Chief Data Scientist, Data Science, C3 AI

In our daily lives, we interact with machines equipped with countless sensors, silently capturing the world around us. While humans often struggle to make sense of these readings, they follow fundamental laws of physics, chemistry, society and other sciences — much like a language with its own grammar, providing order and meaning. AI has revolutionized how we process text, leveraging self-supervised learning to create powerful models that adapt and generalize across tasks with minimal additional data. Yet, when it comes to structured data — like sensor readings — AI remains largely task-specific, data-hungry, and rigid in its ability to generalize beyond its training.

The challenge ahead is clear: How do we bridge this gap? How do we develop AI that can learn from time series data as flexibly as it does from text? Unlocking this potential would redefine our relationship with machines, transforming them from passive tools into intelligent partners in discovery, automation, and innovation.

At C3 AI, we’ve spent the past 15 years working with time series data, supporting mission-critical workflows and decision making across a wide range of industries and use cases. This experience has given us unique insights and intuition into how time series data should be leveraged. We’ve used this expertise to rethink time series models, their development, and their role in broader AI-driven systems.

Our new approach fundamentally transforms how we view, analyze, interrogate, and interact with time series data. It enables deeper integration between time series systems and generative AI, providing an interface for agents to interact with time series data — unlocking new possibilities for generating predictions, decision intelligence, and automation.

This blog marks the beginning of a new series where we will share our vision and latest innovations in this domain. These innovations are not only transformative but also beat state-of-the-art performance across many time series tasks established by specialized techniques — by a considerable margin. In this first post, we lay the foundation by exploring the challenges, motivations, and breakthroughs that shape our approach.

Time Series Modeling Today

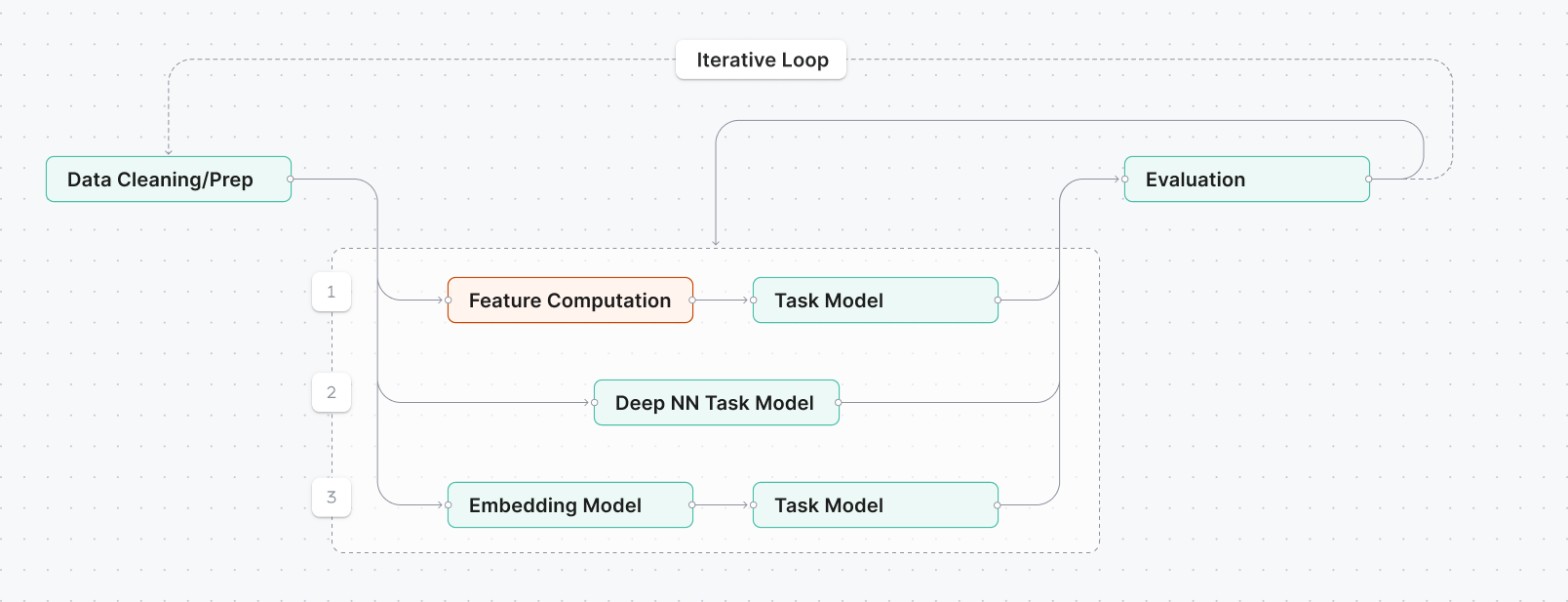

Time series models are designed to address various tasks, including forecasting, anomaly detection, predictive maintenance, classification, and imputation, among others. Traditionally, their development begins with data cleaning and preparation and then follows three primary approaches

- Feature Engineering & Task Model Training: This approach involves manually engineering relevant features from the raw data, followed by training a relatively simple task-specific model on the transformed dataset.

- Deep Neural Networks (DNNs): Instead of explicit feature engineering, DNNs are trained directly on cleaned raw data, leveraging their ability to automatically extract relevant patterns.

- Embedding/Representation Learning & Task Model Training: This two-stage approach first involves learning data representations, typically in a self-supervised or unsupervised manner. The learned representations are then used to train a lightweight task-specific model.

Figure 1: In the traditional method of model development, there are several steps that require significant manual work, including feature computation (highlighted in orange above), making the extremely manual and time consuming.

Regardless of the chosen approach, models are iteratively refined based on task-specific evaluation metrics, as illustrated in Figure 1. While these approaches form the foundation of most AI applications in the industry, they face scalability challenges. Models are often trained from scratch for each new task or asset, limiting knowledge transfer and overall performance. Additionally, they are typically trained only with direct task examples, overlooking tangentially relevant data that could enhance learning.

These challenges become particularly evident when managing fleets of similar assets with slight variations in instrumentation. The rigidity of traditional pipelines, which assume a uniform feature set, often necessitates unique development and deployment processes/pipelines for each fleet member. This leads to significant overhead in management, maintenance, and scalability. Additionally, the need for customized pipelines can hinder our ability to scale effectively, especially for assets or use cases with limited data.

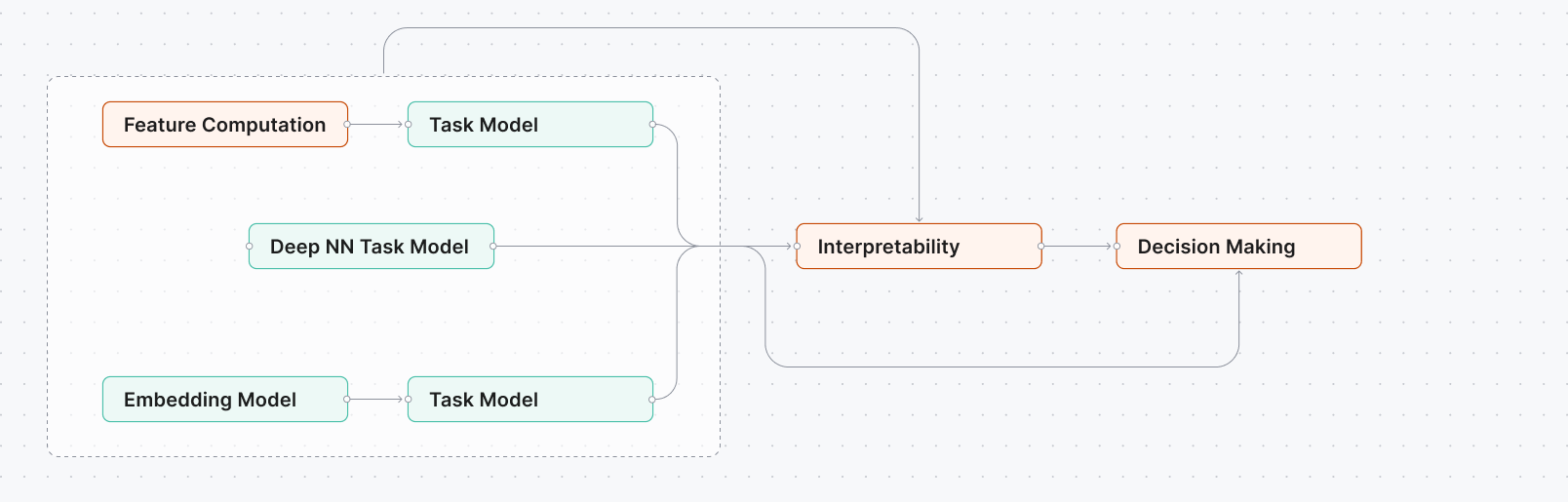

Figure 2: Model deployment, the stage after development, also requires intense manual work during interpretability and decision making, highlighted above.

Once these models are developed, they are then deployed in production to guide real-world actions. Such actions include dispatching teams for anomaly investigations, determining team composition, and ensuring necessary equipment is available. However, for effective decision making, predictions alone are insufficient — they must be accompanied by trustworthy explainability or interpretability insights (see Figure 2 for an example deployment pipeline).

Most interpretability methods are applied post-hoc, leveraging model architecture and predictions to derive insights. While valuable, these approaches can be complex, finicky, and require significant manual effort to integrate into reliable decision making workflows, which worsens scalability challenges.

Inspired by the success of generative AI, we see an opportunity to redefine time series modeling and address the challenges outlined above. If AI could continuously learn and adapt to new use cases or assets with minimal additional data — much like generative models generalize across diverse tasks — it could drive significantly greater efficiency, scalability, and impact. One promising direction is foundation embedding models for time series data.

The Case for Foundation Embedding Models

Traditionally, embedding models are trained for a single dataset or use case, limiting their adaptability and the richness of their representations. However, by training a single embedding model on diverse datasets across different domains and use cases, we can:

- Unlock cross-learning, allowing insights from one asset or domain to benefit another.

- Enhance adaptability, enabling embeddings to generalize across varied asset classes, fleet types, and operational patterns.

- Capture richer representations, improving the robustness and performance of downstream task models.

- Expands the usage of these models to use cases, assets and customers with limited amount of data.

This shift from narrow, domain-specific embeddings to broad, multi-domain embeddings opens the door to more powerful, flexible AI systems — ones that learn holistically and scale effortlessly. We refer to such multi-domain embedding models as foundation embedding models.

While the idea of a foundation embedding model is powerful, training one presents significant challenges. Unlike traditional task-specific models, a foundation embedding model must be designed to handle a wide range of complexities, including:

- Vast differences in channel or sensor count across datasets from different domains.

- Variations in time scales and data quality, requiring robust normalization and alignment.

- Preventing negative transfer, ensuring that insights from one domain do not degrade performance in another.

- Avoiding learning collapse, maintaining meaningful representations across all use cases.

These challenges are some of the main hurdles that have prevented development of foundation time series embedding models and demand careful architectural design, data processing/curation strategies, and optimization frameworks to ensure the model remains general, adaptable, and performant.

Let’s turn to our new approach to time series modeling, and detail how foundation embedding models fit within that picture and how we can overcome these challenges.

A Novel Approach to Foundation Models for Time Series Data

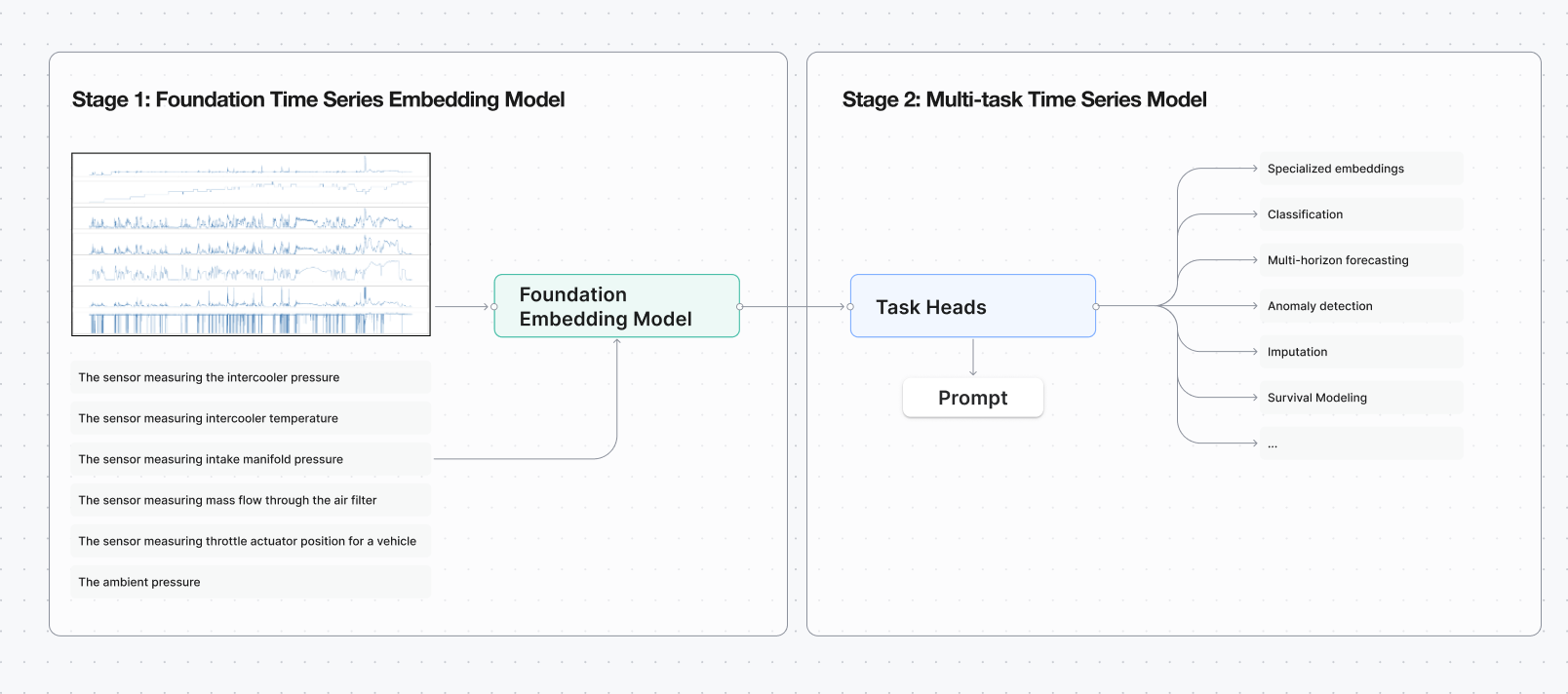

Figure 3: Our new approach to foundation time series models is a completely new way to pull insights from time series data, eliminating the manual work in previously standard model development and deployment processes.

Our approach to foundation models for time series unfolds in two stages. Recognizing the immense potential of foundational time series embedding models, in the first stage, we have developed a model designed specifically for multivariate time series data. To overcome the key challenges previously outlined, we introduced several critical architectural innovations that allow for:

- Granular Textual Context Integration: Just as a subject matter expert can reason across different members of a fleet of assets despite variations in sensor placement, our model leverages descriptions of what sensors are measuring to generalize insights across diverse datasets. By this textual context, the model learns to balance distinguishing between different domains and datasets while allowing for effective cross-learning. This helps prevent negative transfer and learning collapse (see Figure 3 for an overview).

- Invariance to Channel Ordering: he learned representations remain unchanged regardless of how the channels are ordered, ensuring robustness and consistency. This means that even if the sensor order is shuffled, the model produces the same result, recognizing that the underlying process remain unchanged.

- Enhance Model-Level Explainability: The architecture provides greater interpretability of the learned representations, improving trust and usability.

We train our proposed architecture using a self-supervised learning approach, incorporating multiple novel strategies, including:

- Joint Embedding Predictive Architecture (JEPA): A method largely unexplored in time series representation learning, simplifying the training process.

- Advanced Data Processing & Augmentation: Self-supervised pretext tasks and data perturbations, ensuring the learned representations are informative for downstream tasks while remaining robust to real-world variations and imperfections of time series data.

- Custom Loss Function: A newly designed loss function integrates self-supervised objectives with novel regularization terms.

These innovations have enabled us to develop a foundational embedding model for multivariate time series that surpasses state-of-the-art performance on a variety of downstream tasks established by specialized models.

In the second stage, we have developed a multi-task supervised training of a multi-head model built on top of this embedding model (see Figure 3 for an overview). This approach further enhances task model performance, enabling learning not only across datasets but also across tasks.

A New Way for Time Series Modeling

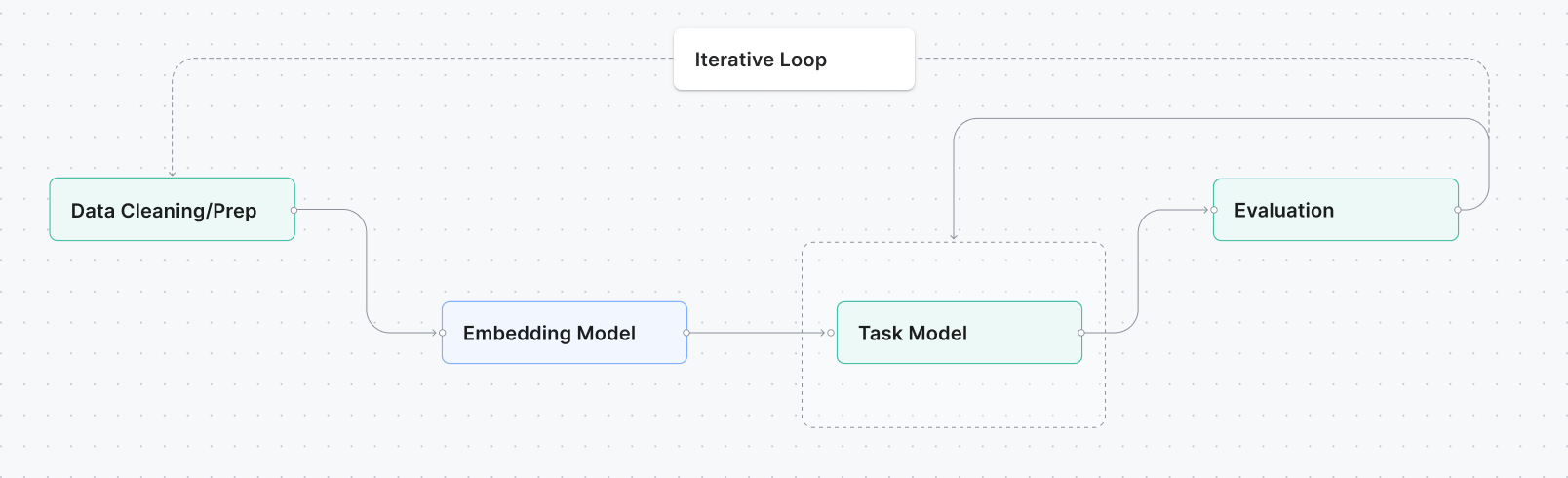

Figure 4: The new, more efficient time series model development process.

The blocks with a blue hue mark components that remain frozen during task model training.

Figure 4 illustrates how our foundation embedding model seamlessly integrates into the task model development process, compare with Figure 1. The blue embedding model block signifies that it remains frozen during task model training — it is not modified but instead serves as a fixed representation layer. This frozen model can be either:

- The base foundation model, trained on diverse time series data, or

- A fine-tuned version, e.g., specialized for a specific fleet of assets or an organization’s entire time series corpus.

Importantly, the embedding model sits outside the dashed box, which encloses the components subject to iterative refinement. This separation streamlines the model development cycle, making iterative improvements faster, easier, and more cost effective.

Beyond this, the approach enables data pooling across assets and domains and solves key scaling challenges in task model development. For instance, consider building a task model for a fleet of assets with different instrumentation suites. Since all assets share the same embedding model for feature extraction, we can:

- Aggregate labeled data across assets, allowing a single model to be trained for the task with more data.

- Leverage data-rich assets and domains to enhance learning for those with limited labeled data.

- Eliminate the need for asset-specific pipelines,as the embedding model unifies development under a single framework.

By acting as a shared representation layer, the foundation embedding model not only simplifies deployment but also enhances generalization and scalability across different assets, fleets, and domains.

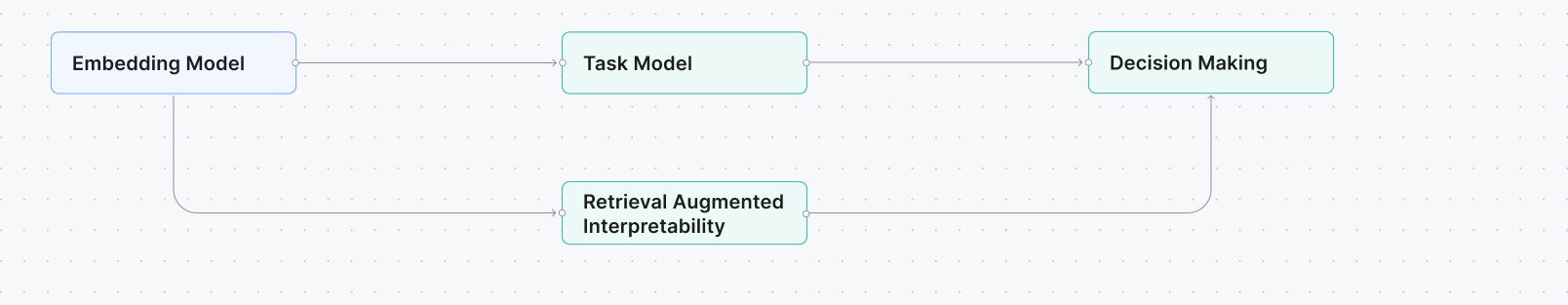

Figure 5: With the new embedding time series model, we also eliminate the manually intensive pieces of the deployment process.

The introduction of foundation embedding models also significantly reshapes the deployment pipeline for task models. Figure 5 illustrates the new deployment pipeline, which can be directly compared with Figure 3 to highlight key structural changes.

One major update is the replacement of the interpretability module from Figure 3 with a new component designed to provide richer explainability insights. We call this new module retrieval-augmented interpretability (RAI), which directly leverages our embedding model to:

- Tap into past data and the corresponding previously underutilized information sources to enhance explainability – similar to how retrieval-augmented generation (RAG) retrieves relevant information to generate responses, we can now retrieve relevant periods and/or events from the past.

- Go beyond standard model interpretation, offering prescriptive recommendations based on historical experiences.

- Identify regime shifts and domain changes, creating a new, agile decision-making paradigm that delivers not just data points, but extremely and easily interpretable insights. More on this in our next post.

- Facilitate more effective interactions with subject matter experts, bridging AI insights with human expertise. More specifically, this process enables more effective collection and curation of relevant insights and information from past events and experts. Once this collection and curation is complete, we can leverage generative AI on top of those insights, letting users get easy access to that information through a simple chat interface.

This innovative approach to delivering insights unlocks new ways for users to interact with their data and the insights. For example, in predictive maintenance, a reliability engineer can ask: “When have we observed similar patterns for assets in the past?” Then, they can replay what happened next, explore related notes and lab results, and analyze historical trends.

By then leveraging generative AI and specialized agents on top of that data, users quickly and efficiently can extract insights from time series data through natural language. Similarly, in demand forecasting, predictions can be enriched by linking them to customer reviews or macro- and microeconomic conditions from comparable periods in the past.

This is not just applicable to predictive maintenance and equipment sensor data — in healthcare, doctors could ask questions about a patient’s medical history, in finance, analysts could explore trends in market behavior to anticipate risks and opportunities.

With this new interpretability module, decision making is more intuitive: workflows are streamlined, automated, and intelligent. Stay tuned for future blogs where we’ll explore these innovations and their real-world impact further.

In our upcoming posts, we’ll dive deeper into:

- Novel systems built on our foundation embedding model, redefining explainability and prescriptive insights.

- Advanced techniques for detecting and addressing domain shifts and regime changes.

- New ways to interact with subject matter experts, enhancing decision making.

- Key innovations that made our foundation embedding model possible, from self-supervised learning to robust optimization.

- Transforming task model development, leveraging multi-task learning and bridging the gap to non-parametric modeling.

About the Author

Henrik Ohlsson is the Vice President and Chief Data Scientist at C3 AI. Before joining C3 AI, he held academic positions at the University of California, Berkeley, the University of Cambridge, and Linköping University. With over 70 published papers and 30 issued patents, he is a recognized leader in the field of artificial intelligence, with a broad interest in AI and its industrial applications. He is also a member of the World Economic Forum, where he contributes to discussions on the global impact of AI and emerging technologies.

Sina Khoshfetrat Pakazad is the Vice President of Data Science at C3 AI, where he leads research and development in Generative AI, machine learning, and optimization. He holds a Ph.D. in Automatic Control from Linköping University and an M.Sc. in Systems, Control, and Mechatronics from Chalmers University of Technology. With experience at Ericsson, Waymo, and C3 AI, Dr. Pakazad has contributed to AI-driven solutions across healthcare, finance, automotive, robotics, aerospace, telecommunications, supply chain optimization, and process industries. His recent research has been published in leading venues such as ICLR and EMNLP, focusing on multimodal data generation, instruction-following and decoding from large language models, and distributed optimization. Beyond academia, he has co-invented patents on enterprise AI architectures and predictive modeling for manufacturing processes, reflecting his impact on both theoretical advancements and real-world AI applications.

Utsav Dutta is a Data Scientist at C3 AI and holds a bachelor’s degree in mechanical engineering from IIT Madras, and a master’s degree in artificial intelligence from Carnegie Mellon University. He has a strong background in applied statistics and machine learning and has authored publications in the fields of machine learning in neuroscience and reinforcement learning for supply chain optimization, at IEEE and AAAI conferences. Additionally, he has co-authored several patents with C3 AI to expand their product offerings and further integrating generative AI into core business processes.