- AI Software

- C3 AI Applications

- C3 AI Applications Overview

- C3 AI Anti-Money Laundering

- C3 AI Cash Management

- C3 AI Contested Logistics

- C3 AI CRM

- C3 AI Decision Advantage

- C3 AI Demand Forecasting

- C3 AI Energy Management

- C3 AI ESG

- C3 AI Health

- C3 AI Intelligence Analysis

- C3 AI Inventory Optimization

- C3 AI Process Optimization

- C3 AI Production Schedule Optimization

- C3 AI Property Appraisal

- C3 AI Readiness

- C3 AI Reliability

- C3 AI Smart Lending

- C3 AI Supply Network Risk – bak

- C3 AI Turnaround Optimization

- C3 Generative AI Constituent Services

- C3 Law Enforcement

- C3 Agentic AI Platform

- C3 Generative AI

- Get Started with a C3 AI Pilot

- Industries

- Customers

- Events

- Resources

- Generative AI for Business

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can the Generative AI Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- What Is Enterprise AI?

- Machine Learning

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download eBook

- All Resources

- Publications

- Customer Viewpoints

- Blog

- Glossary

- Developer Portal

- Generative AI for Business

- News

- Company

- Contact Us

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Problem Scope and Timeframes

- Cross-Functional Teams

- Getting Started by Visualizing Data

- Common Prototyping Problem – Information Leakage

- Common Prototyping Problem – Bias

- Pressure-Test Model Results by Visualizing Them

- Model the Impact to the Business Process

- Model Interpretability Is Critical to Driving Adoption

- Ensuring Algorithm Robustness

- Planning for Risk Reviews and Audits

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download e-Book

- Machine Learning Glossary

Best Practices in Prototyping

Getting Started by Visualizing Data

It is always tempting to dive straight into prototyping and algorithm development activities. But taking some time at the outset to better understand data always leads to more nuanced insights and relevant results because teams are able to identify issues and nuances early on.

The best way to know if data contain useful and relevant information for solving a problem using machine learning is to visualize and study the data. If possible, it is better to work with SMEs who are familiar with the problem statement and the business nature while visualizing and understanding the relevant data sets.

In a customer attrition problem, for example, a business SME may be intimately familiar with aggregate data distributions such as higher attrition caused by pricing changes and lower attrition for customers who use a particularly sticky product. Such insights, observed in the data, will increase confidence in the overall data set or, more importantly, will help data scientists focus on data issues to address early in the project.

We typically recommend various visualizations of data in order to best understand issues and develop an intuition about the data set. Visualizations may include aggregate distributions, charting of data trends over time, graphic subsets of the data, and visualizing summary statistics, including gaps, missing data, and mean/median values.

For problem statements that support supervised modeling approaches, it is beneficial to view data with clear labels for the multiple classes defined, for example customers who have left versus existing customers.

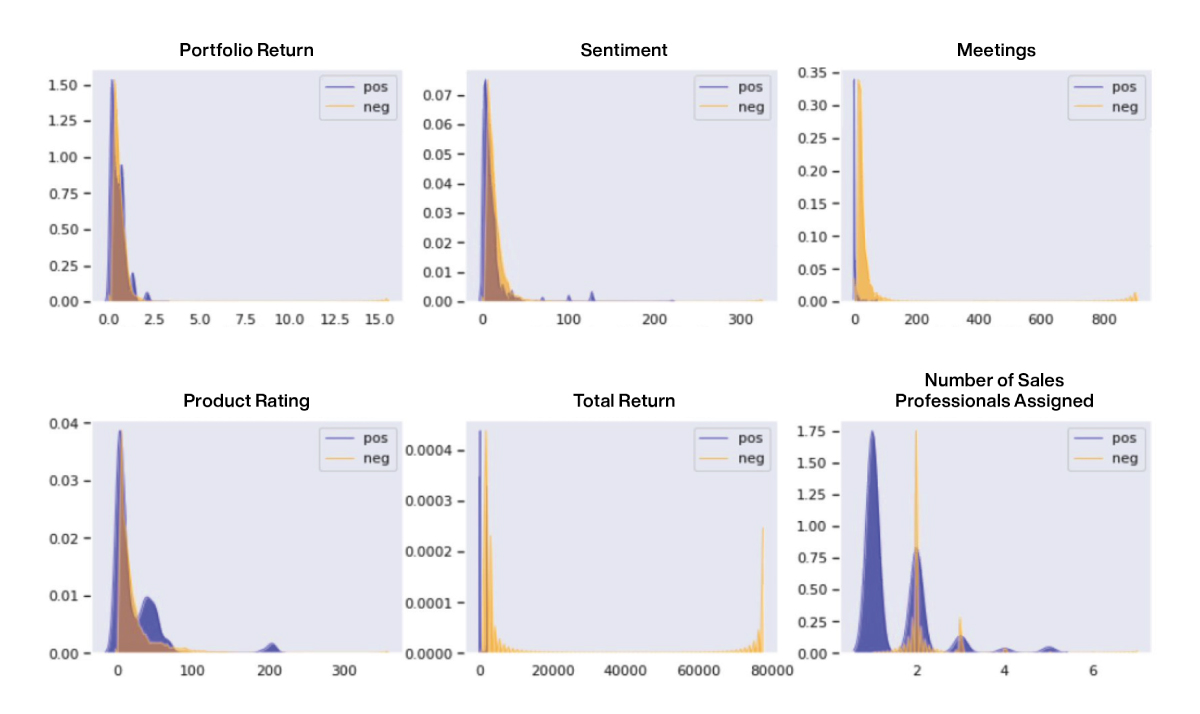

For example, when examining data distributions across multiple classes, it is helpful to confirm that the classes display differences. If data differences, however minor, are not apparent during inspection and manual analysis, it is unlikely that AI/ML systems will be successful at discovering them effectively. The following figure shows an example of plotting data distributions across positive (in blue) and negative (in orange) classes across different features in order to ascertain whether there are differences across the two classes.

In customer attrition problems, those customers who have left may represent a disproportionate number of inbound requests to call centers. Such an insight during data visualization may lead data scientists to explore customer engagement features more deeply in their experiments.

Figure 29: Understanding the impact of individual features on outcomes or class distributions can offer significant insight into the learning problem

We also recommend that teams physically print out data sets on paper to visualize and mark up observations and hypotheses. It is often incredibly challenging to understand and absorb data trends on screens. Physical copies are more amenable to deep analysis and collaboration, especially if they are prominently displayed for team members to interact with them, for instance on the walls of a team’s room. Using wall space and printed paper is more effective than even a very large projector. The following figure shows a picture of one of our conference room walls that is covered in data visualizations.

Figure 30: Conference room walls covered in data visualizations to facilitate understanding and collaboration during model development

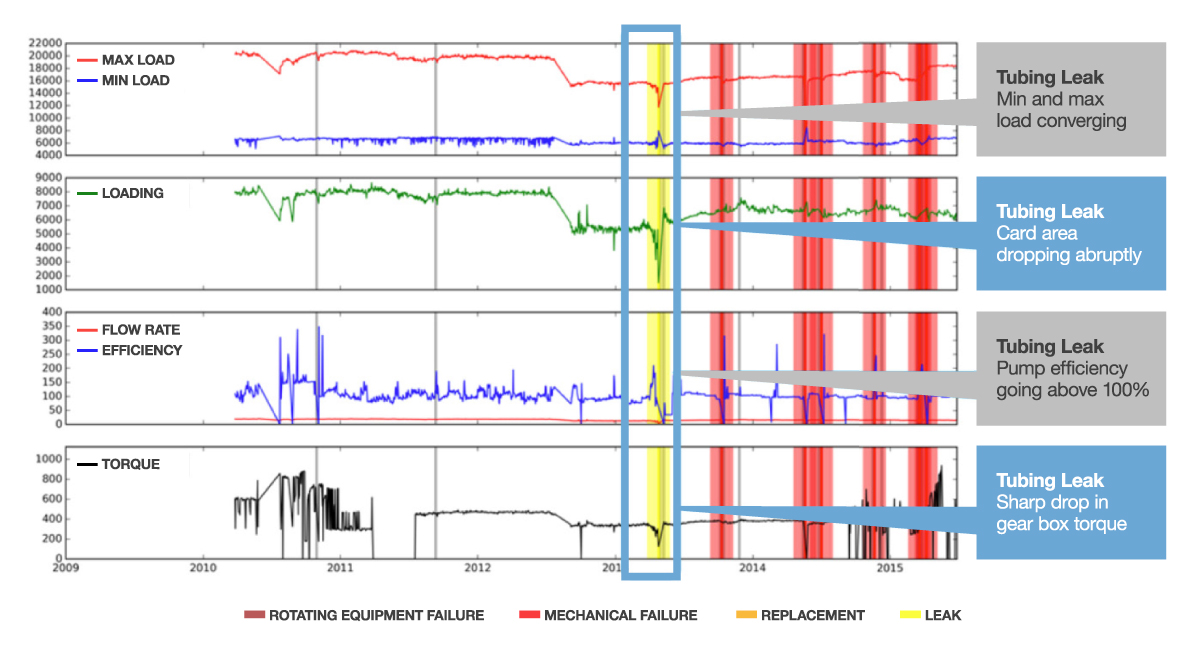

In the following figure from an AI-based predictive maintenance example, aligning individual time series signals makes it possible to rapidly scan them for changes that occur before failures.

Figure 31: Example of a time series data visualization exercise as part of an AI-based predictive maintenance prototype.

By visualizing these data, scientists were able to identify small patterns that can later be learned by algorithms.