By Sina Pakazad, Vice President, Data Science, C3 AI, Yang Song, Lead Data Scientist, Data Science, C3 AI, Anoushka Vyas, Senior Data Scientist, Data Science, C3 AI, Peter Wei, Senior Data Scientist, Data Science, C3 AI, Henrik Ohlsson, Chief Data Scientist, Data Science, C3 AI

Enterprises make thousands of operational decisions every day — from scheduling staff to allocating resources — each constrained by time, data complexity, and human bandwidth. Traditional optimization workflows, while powerful, are slow, fragmented, and heavily dependent on iterative back-and-forth between domain experts and technical teams.

Alchemist reimagines this process. An agentic AI system for decision optimization, Alchemist fuses autonomous agents with natural language interaction, enabling subject matter experts to define, simulate, and optimize complex decision systems directly, without waiting on development cycles. In essence, Alchemist transforms expert intuition into executable intelligence, empowering organizations to move from ideas to validated solutions in minutes rather than weeks.

Alchemist’s modular, agent-based architecture streamlines the traditionally slow, handoff-heavy optimization workflow. Each specialized agent — the decision process extractor, simulator, solver recommender, and optimizer — manages a distinct stage of the workflow, collaborating to produce transparent and verifiable solutions. This design replaces weeks of coordination between SMEs and developers with minutes of interactive iteration. In both human-in-the-loop and fully autonomous modes, Alchemist demonstrates exceptional accuracy across standard benchmarks, redefining how enterprises convert operational expertise into executable, data-driven decision systems.

Challenges in Decision Optimization

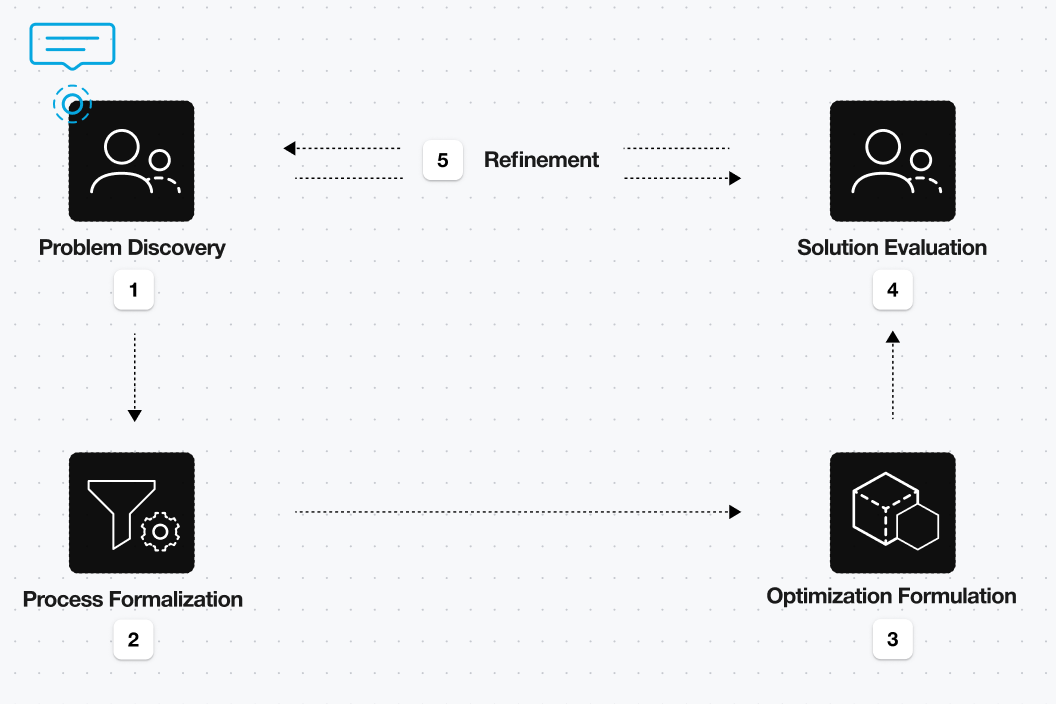

In helping customers improve their decision-making processes, we consistently observe the following familiar, iterative patterns of collaboration:

- Problem Discovery — Discussion and analysis to understand the customer’s operational challenges, business constraints, and data landscape.

- Process Formalization — Synthesizing the discovery learnings into a clear, structured representation, validated through iterative feedback from stakeholders.

- Optimization Formulation — Translating the formalized process into a solvable mathematical model, and selecting appropriate algorithms or solvers.

- Solution Evaluation — Presenting optimization results to the customer, who evaluates by comparing against their mental model and internal heuristics or simulators.

- Refinement — Iterating on the formulation based on observed discrepancies or evolving business needs.

Figure 1: The traditional decision optimization workflow and collaboration loop consists in several feedback loops

While this methodology can produce high-impact outcomes, it is also time-consuming and resource-intensive. In particular, feedback loops that involve delivery teams, developers, and subject matter experts (SMEs) often require substantial coordination and latency, ranging from days to weeks.

To overcome this bottleneck, we built Alchemist — a system that keeps the rigor of traditional optimization but removes the friction of human handoffs.

Introducing Alchemist: Modular, Agent-Driven Optimization Workflows

Recent advances in large language models (LLMs) and autonomous coding agents have created new opportunities to streamline decision optimization workflows. To address this opportunity, we developed Alchemist, a modular architecture composed of specialized AI agents designed to dramatically reduce turnaround time for these workflows, enabling business users to directly manage and iterate on optimization pipelines. These agents leverage LLMs, persistent memory, and novel methods for focused engagement with autonomous coding systems. The guiding objective of Alchemist is to accelerate development cycles by eliminating reliance on traditional delivery and engineering teams. This empowers domain experts and SMEs with full control over the development, validation, and deployment of decision-optimization solutions, creating iterative, responsive tools that are well-suited for complex business challenges.

Alchemist’s Architecture: Four Specialized Agents

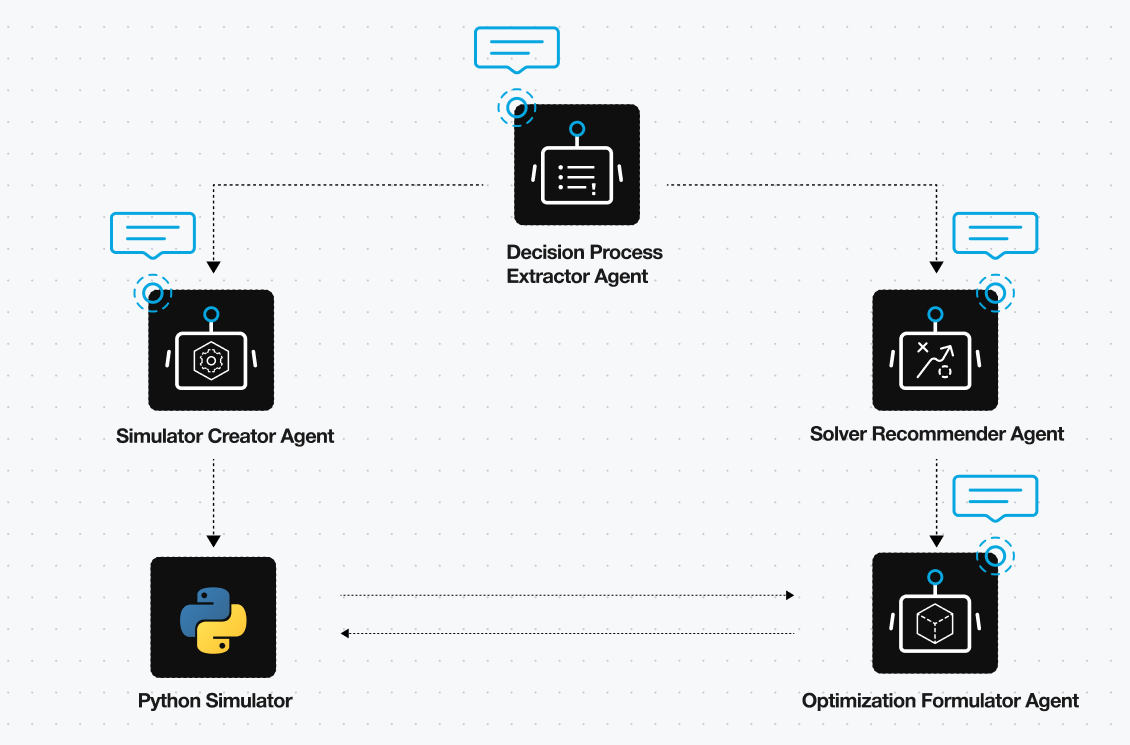

Figure 2: Alchemist consists of four specialized agents with the ability to interact with the user and one another, and in turn leverage their own autonomous coding agents to execute tasks.

Alchemist’s system architecture, illustrated in Figure 2, is composed of four autonomous and cooperative agents, each with a clearly defined scope and purpose.

1. Decision Process Extractor Agent

This agent identifies the core components of a decision process, including:

- Decision variables (the choices we make to influence outcomes)

- External and uncertain variables (outside factors that can’t be controlled or predicted)

- State variables (the current condition of the system at any point in time)

- Transition functions (rules that show how the system moves from one state to the next)

- Objective functions and constraints (the goals we aim for and the limits we need to follow)

These elements are presented in clear, user-friendly terms, enabling SMEs to easily validate and refine the model.

2. Simulator Creator Agent

Once the decision process is defined, this agent builds a fully functioning simulator, a code-based replica of the SME’s internal model of their process. It leverages general-purpose autonomous coding agents with well-crafted prompts to:

- Ensure modular, testable, maintainable code

- Adhere to software development best practices

- Allow users to run “what-if” scenarios by adjusting decision, external, and uncertain variables

3. Solver Recommender Agent

After receiving the formalized decision process, this agent recommends appropriate solver techniques or optimization solvers. This agent can be customized to assess:

- Preferred solver families (e.g., MILP, constraint programming)

- Enterprise-approved tools and licenses to aid in achieving solutions

- Performance or interpretability requirements

4. Optimization Formulator Agent

This agent creates the optimization model and generates the corresponding solver code. It works closely with the Simulator Creator Agent by:

- Using the simulator to validate optimization results that make sense in practice

- Identifying and resolving discrepancies between optimized solutions and simulated outcomes

- Presenting outcomes in a way that subject-matter experts can easily understand and act upon

The four agents composing Alchemist’s architecture are designed to create a feedback loop that mirrors the human SME’s internal reasoning. The agents self-correct and ensure consistency between the optimization engine and the modeled process. A use case involving shift scheduling at a hospital sheds more light on how Alchemist enables swift and simple decision optimization.

Let’s walk through an example, to provide more intuition on how these agents can help.

Case Study: Optimizing Nursing Staff Schedule with Alchemist

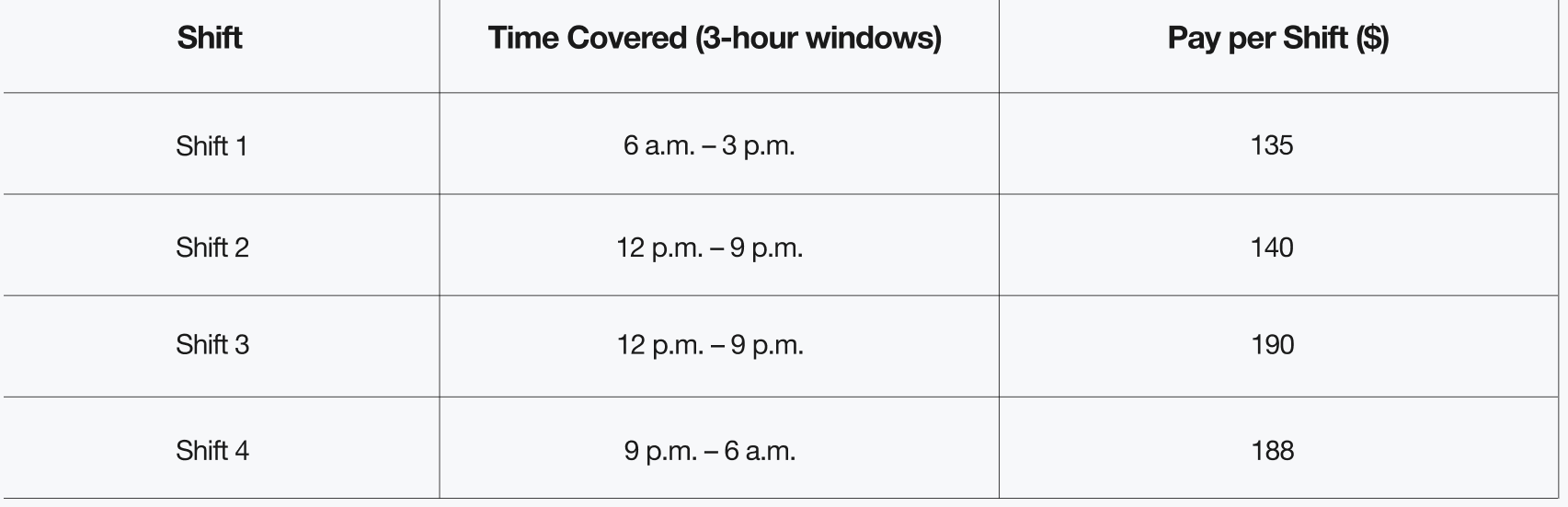

Hospitals operate continuously 24 hours a day, seven days a week, with staff rotating through on different shifts. Scheduling the workforce is crucial in ensuring smooth and effective hospital operations. Consider the following nurse scheduling scenario: a hospital’s day is divided into eight consecutive, non-overlapping time windows, each lasting three hours. Every nurse works a nine-hour shift spanning three consecutive windows. The hospital must schedule enough nurses in each time window to meet the required staffing levels for patient care. There are four shifts in total, each with a different pay rate for the nine-hour period.

Figure 3: Overview of hospital shift periods, with pay allocated per shift.

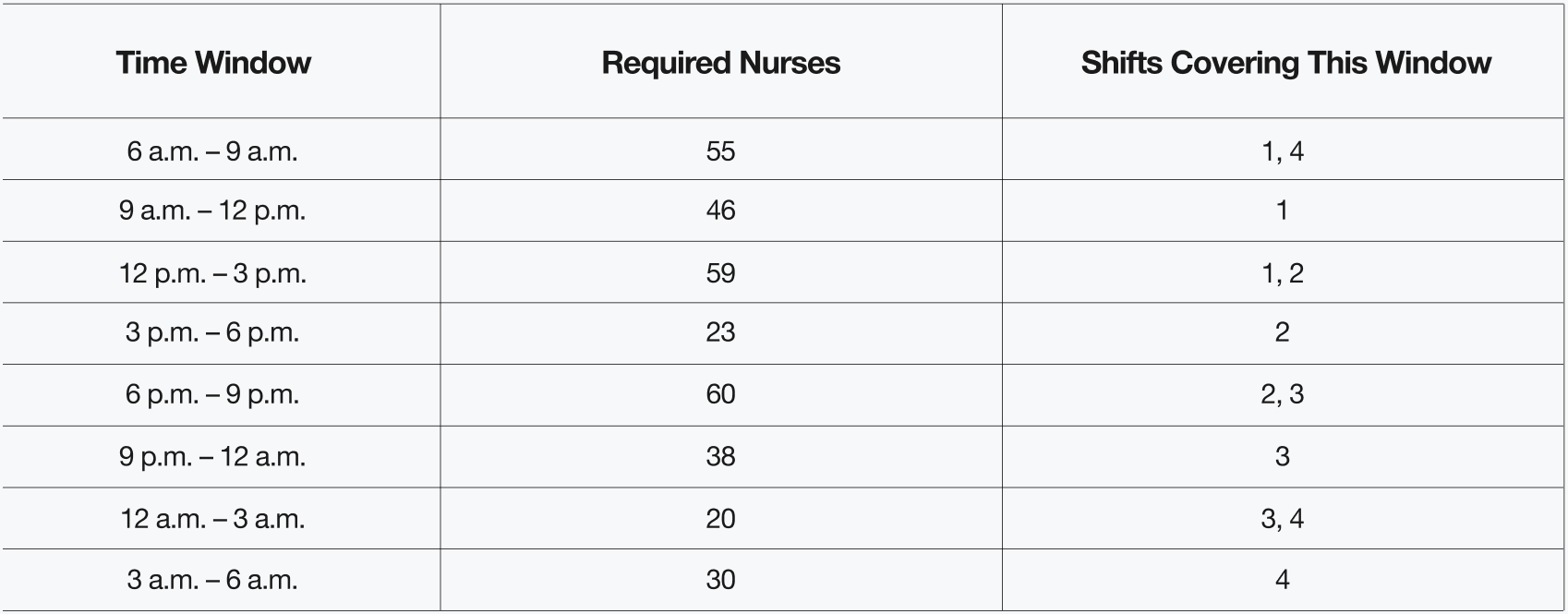

The number of required nurses per three-hour time window varies across the day and night, as seen in Figure 4.

Figure 4: Number of nurses required for each three-hour time window, showing which shifts cover each period.

The goal here is straightforward: assign the correct number of nurses to each shift so all time windows are covered, and avoid overscheduling and overspending on labor costs. By leveraging Alchemist’s agents, the hospital scheduler can build a rich, realistic model that reflects the complexity of hospital operations. The process begins with the Decision Process Extractor Agent, which works with the user in defining layers in the problem scenario. The clip below in Figure 5 shows how this interaction unfolds in practice.

Figure 5: The scheduling model is progressively refined, adding features like controlled under- or over-staffing and incorporating uncertainty in nurse availability, resulting in a more realistic and flexible schedule.

As shown in the above clip, the user gradually builds on the initial optimization formulation, introducing additional layers of complexity such as allowing limited understaffing or overstaffing, and adding stochastic variables to better capture the uncertainty in nurse availability and real-world behavior. These enhancements make the model more realistic and create a more robust and flexible schedule.

To explore these ideas further, the next step is to create a simulator for the scheduling problem using the Simulator Creator Agent. The simulator allows the user to experiment with different staffing strategies to observe how they perform under a variety of conditions, understanding how small adjustments in scheduling can impact overall outcomes. The example below in Figure 6 demonstrates how the user can engage with the simulator in natural, conversational language.

Figure 6: A single command starts the Simulator Creator Agent, which automatically generates the full simulator. Users can then explore scenarios, test outcomes, and refine the system through a conversational interface.

As shown in the above clip, the Simulator Creator Agent can be launched with a simple command. Behind the scenes, this triggers an autonomous coding agent that automatically generates a complete software package for the simulator. The initial build may take five to ten minutes, as the system constructs the package from scratch (including the code and tests). Once ready, the user can conversationally engage with the Simulator Creator Agent, testing different scenarios, exploring outcomes, and making modifications.

Figure 7: The user calls in the Solver Recommender Agent, which analyzes the scenario and recommends various optimization solvers, with reasoning provided for each recommendation.

While this agent is invaluable for evaluating and understanding schedules, it doesn’t yet produce an optimized one. To bridge that gap, the user can leverage the available optimization solvers suitable for their problem, provided by the Solver Recommendation Agent (see Figure 7 for a clip showing this process). For many users, this step is the most technical in the entire workflow, as it offers valuable transparency into the system’s reasoning process in leveraging appropriate tooling. For users familiar with optimization techniques, this stage also provides an opportunity to interactively guide or refine the solver selection, tailoring the approach to their specific goals.

At this point, the user is ready to invoke the Optimization Formulation Agent. The system automatically formulates the scheduling task as an end-to-end optimization problem and works toward generating an optimized nurse schedule that balances cost, coverage, and operational flexibility.

Figure 8: With a single command, the Optimization Formulation Agent creates and runs an optimization engine that produces nurse schedules balancing cost, coverage, and flexibility. Users can interactively review results, explore scenarios, and adjust the model to account for factors like nurse fatigue or warden-specific demands.

As shown in Figure 8, the optimizer can be invoked just as easily as the simulator, through a simple, direct command. Behind the scenes, an autonomous coding agent enhances the existing scheduling package by integrating an optimization engine capable of generating the best possible nurse schedules. From there, the user can interactively explore results, perform “what-if” analyses, and even refine the underlying formulation, for example, by adding rules that account for nurse reliability, fatigue, or the unique demands of each ward’s operations.

Throughout this process, the Optimization Formulation Agent is proactively collaborative, checking with the user before embedding any suggested changes into the main package. Every step (testing, refining, and extending the system) can be done entirely through conversational interaction, or by simply sharing files and documents with the agents.

By this point, the user has a complete, reusable software package for nurse scheduling, one that can be continuously improved and adapted by authorized users. What once required a specialized development team can now be designed, tested, and iterated directly by the domain expert within minutes.

Alchemist in Fully Autonomous Mode*

*Note: this section is intended for technically inclined readers. Feel free to skip ahead if you’re focused on system-level concepts or use cases.

Although Alchemist’s agents are primarily designed to collaborate with users, we also evaluate their performance when operating in a fully autonomous mode, where the agents coordinate seamlessly without any user input. In this scenario, Alchemist’s agents independently interpret natural-language problem descriptions, formulate mathematical optimization models, and implement complete solutions end-to-end. To assess the system’s performance in an autonomous setting, we rely on a set of established benchmarks that test a model’s ability to translate high-level optimization problems into accurate, executable formulations. These benchmarks include ground-truth optimal solutions and objective values, allowing us to assess the accuracy of Alchemist’s outputs. The table below in Figure 9 summarizes Alchemist’s performance across these benchmarks, compared against the current state-of-the-art (SOTA) optimization models and agents.

| Benchmark | Alchemist Accuracy | SOTA Accuracy |

|---|---|---|

| OptiBench | 91.27% | OptimAI: 76.3% |

| NL4OPT | 100% | LLMOPT: 97.31% |

| NLP4LP | 94.9% | LLMOPT: 86.49% |

| BWOR | 86.49% | OR-LLM-Agent (DeepSeek-R1): 82.93% |

| IndustryOR | 59% | LLMOPT: 44% |

| MAMO-Easy | 96.11% | LLMOPT: 95.31% |

| MAMO-Complex | 67.68% | LLMOPT: 85.78% |

| ComplexOR | 100% | LLMOPT: 76.47% |

| OptMath | 53% | LLMOPT: 40% |

Figure 9: Across a range of benchmarks, Alchemist delivers superior accuracy compared with leading systems.

As the results show, Alchemist outperforms state-of-the-art systems, often by a wide margin. While many leading approaches rely on custom LLMs trained specifically for optimization tasks, Alchemist achieves superior results using a general-purpose commercial model, Anthropic’s Claude Sonnet 3.7, a testament to the strength of its agentic design and reasoning architecture.

Autonomous Performance with Transparent, Human-Centric Design

Alchemist’s agents can work within a shared codebase or operate independently on modular components. In each case, users retain full observability, and the system remains fully steerable, with no black boxes and no surprise outcomes. This transparency builds trust and accelerates adoption among users. It also clarifies ownership by shifting the power to the SME, who can directly oversee development, deployment, and refinement of optimization workflows in their business processes. Beyond saving time, Alchemist transforms how organizations approach decision-making, enabling faster iteration, smarter solutions, and greater operational agility without compromising clarity or accountability.

Alchemist transforms decision optimization from a slow, expert-heavy process into an accelerated, transparent, and fully controllable system. By bridging simulation, optimization, and feedback in a seamless loop, it empowers domain experts to turn intuition, operational expertise, and natural language insights into accurate, actionable solutions. With modular, agent-driven workflows, users can rapidly test scenarios, refine models, and implement robust process execution strategies, all without specialized development teams. The result is not just faster and more efficient decision-making, but smarter, more adaptable, and fully auditable systems that put human expertise at the center of enterprise operations.

Learn how C3 AI’s agentic AI solutions optimize complex workflows — read more here.

About the Authors

Henrik Ohlsson is the Vice President and Chief Data Scientist at C3 AI. Before joining C3 AI, he held academic positions at the University of California, Berkeley, the University of Cambridge, and Linköping University. With over 70 published papers and 30 issued patents, he is a recognized leader in the field of artificial intelligence, with a broad interest in AI and its industrial applications. He is also a member of the World Economic Forum, where he contributes to discussions on the global impact of AI and emerging technologies.

Sina Khoshfetrat Pakazad is the Vice President of Data Science at C3 AI, where he leads research and development in Generative AI, machine learning, and optimization. He holds a Ph.D. in Automatic Control from Linköping University and an M.Sc. in Systems, Control, and Mechatronics from Chalmers University of Technology. With experience at Ericsson, Waymo, and C3 AI, Dr. Pakazad has contributed to AI-driven solutions across healthcare, finance, automotive, robotics, aerospace, telecommunications, supply chain optimization, and process industries. His recent research has been published in leading venues such as ICLR and EMNLP, focusing on multimodal data generation, instruction-following and decoding from large language models, and distributed optimization. Beyond this, he has co-invented patents on enterprise AI architectures and predictive modeling for manufacturing processes, reflecting his impact on both theoretical advancements and real-world AI applications.

Yang Song received the Ph.D. degree in Electronic and Information Engineering from The Hong Kong Polytechnic University (Hong Kong, China) in 2014, specializing in space-time signal processing. From 2014 to 2016, he was a Post-Doctoral Research Associate at Universität Paderborn (Germany), where he worked on structure-revealing data fusion for neuroscience applications. He then joined Nanyang Technological University (Singapore) as a Senior Research Fellow, leading research in SLAM, robust deep learning and graph neural networks until 2022. Currently, he is a Senior Research Scientist at C3 AI, driving innovations in AI and large-scale data analytics.

Anoushka Vyas is a Senior Data Scientist at C3 AI. She holds a master’s degree in computer science from Virginia Tech and a bachelor’s degree in electronics and communication engineering from IIIT Hyderabad. She has a strong background in graph neural networks, geometric deep learning, and optimization-integrated machine learning, and has authored publications on hyperbolic graph neural network architectures at The Web Conference (WWW) and on graph-based forecasting for precision agriculture in the IJCAI-ECAI AI for Good Track. Her current work focuses on developing scalable AI and optimization solutions for complex operational decision-making in enterprise environments.

Peter Wei is a Senior GenAI Data Scientist at C3 AI. He holds a Master’s degree from the University of California, Berkeley, with a background in Engineering and Statistics. Before joining C3 AI, he worked as a quantitative analyst on Wall Street. Peter has contributed to risk and portfolio management systems for some of the world’s largest reinsurance institutions. His work on machine learning for high-frequency trading has been adopted by leading financial organizations, helping to improve liquidity in the corporate bond market. He has also been recognized with awards in the Citi Financial Innovation Competition and the Economic Information Service Trading Competition. His recent research focuses on agentic AI systems that enhance operational efficiency and decision-making in finance, including agentic fraud detection, document generation, and automatic prompt optimization.