Transform your critical software systems from hard-to-maintain assets into modern, well-documented solutions that preserve expertise and enable future innovation

By Alexander Glynn, Data Scientist, C3 AI and Ravi Sharma, Lead Data Scientist, C3 AI

Legacy codebases remain core to national infrastructure, scientific research, and defense systems yet are increasingly difficult to maintain as expertise in languages such as in Fortran, C, and COBOL fades. And manually modernizing these codebases would take months of developer time that could be used for more productive work such as building new products.

Instead, organizations can turn to generative AI tools for more efficient code translation: with C3 AI Generative AI for Code Translation, enterprises can create new documentation for legacy code, empower new developers to understand the codebase, and ultimately help the team modernize the software. By translating these codebases into modern languages, enterprises can unlock immense value in their codebases with the ability to now build multi–AI agent workflows.

Building a Bridge Between Legacy Code and Developers

Maintaining legacy systems in the government and in business can cost billions of dollars a year. Many legacy systems are written in outdated languages (COBOL first appeared 66 years ago and is pervasive in key financial and governmental systems), lack proper documentation, and are understood by only a handful of developers nearing retirement. Organizations face a harsh dilemma: these systems are critical and outdated, yet too complex and poorly documented to safely update.

Making new documentation, purely from the code of a program, is often too time-consuming. Generative AI software can significantly reduce these costs and facilitate these processes. C3 AI’s approach to creating documentation leverages our expertise in agentic process automation and distributed computing to create new documentation grounded in fact and traceable code snippets.

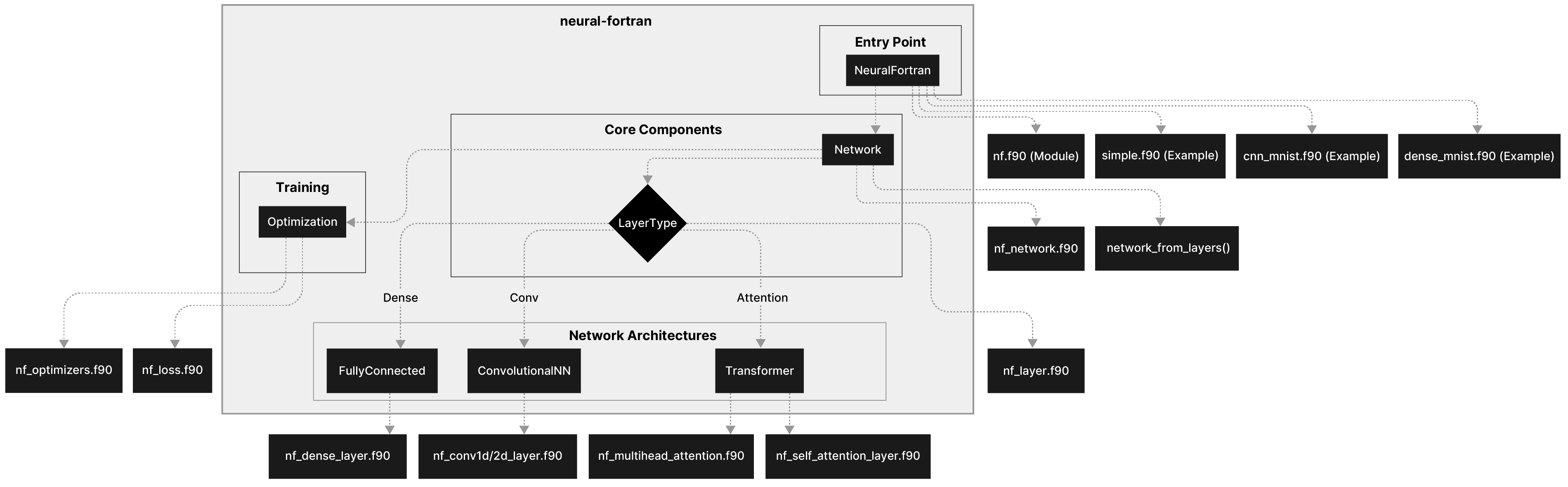

To give you a glimpse of C3 AI Code Translation in use, we have some examples of artifacts produced as we document and translate a Fortran neural network repository. We choose this repo as a moderate-sized framework that has some complex, modern components, a need for precision and interoperability in the implementation of its components and can lead to interesting demonstrations.

How We Built C3 Generative AI for Code Translation’s Approach to Documentation

C3 AI’s documentation workflow combines generative AI, static analysis, and agentic automation to systematically deconstruct complex software systems, map their relationships, and produce accurate, traceable documentation. The following steps outline how this process operates within the application:

1. Parse the codebase, turning it to a structured representation:

- Ingest code as an abstract syntax tree.

- Map internal module dependencies and call graphs, tracing the control flow.

- Identify external library dependencies, API calls, and system integrations.

- Track data flow between functions and modules.

- Catalog logical blocks of functionality and their boundaries, determined by the language of the source code and the representation created so far.

Figure 1: Summary of the high-level architecture of the neural Fortran library in documentation created by C3 Generative AI for Code Translation.

2. Create initial drafts and file-level comments:

- Generate architectural summaries describing overall system design, including a high-level summary of the main control flow and the key components (example in Fig. A).

- Create component-level descriptions for major modules.

- Draft user stories based on code analysis to understand intended functionality.

- Document public APIs, interfaces.

- Identify key algorithms, business rules, and domain-specific logic.

3. Expand to comprehensive documents:

- Extract examples of core APIs being used, explaining their position and importance in the code.

- Document functional requirements based on actual code behavior and intended use cases, creating user stories for developers or the translation pipeline to consider.

- Dive deeper into explanations of complex algorithms and business logic, focusing on the core components of the library.

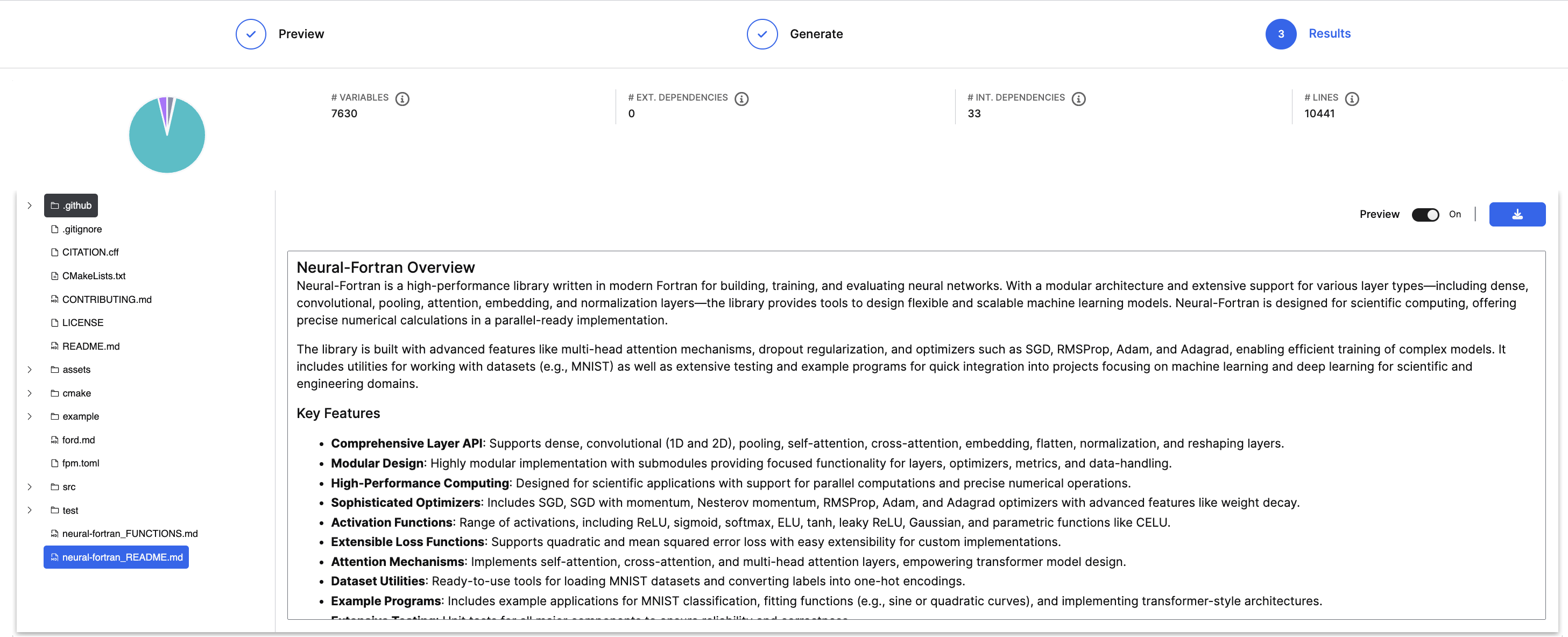

Figure 2: Generated README of the Fortran library, beginning with a high-level overview, by C3 Generative AI for Code Translation, viewed within the application.

Agents iteratively refine documentation for consistency, cross-reference against actual code, and generate examples. C3 AI’s agents can interact with their environment through writing code, which is used here to help them understand the file structure and search for information. Then, the documentation is sent to humans for review and editing. When complete, it can be downloaded or added to the repository. In testing, generated documentation is accurate, reflects key features, and does not rely on the presence of comments or READMEs already in the repo.

Redesign and Refine: Translating the Code

Traditional approaches to code translation are time intensive and error prone. Manual rewriting requires deep expertise in both source and target languages, extensive testing, and careful preservation of business logic. Many of these previous efforts have been shown to take months or years for substantial codebases and costing millions of dollars. Automated translation tools have historically struggled with producing readable code, improving speed or stability, and typically don’t leverage modern frameworks or design patterns.

This is where large language models (LLMs) represent a breakthrough. Modern LLMs, with the right framework, demonstrate strong cross-language understanding and can handle complex translation tasks that would stump traditional tools. Of course, verification, human-in-the-loop engagement, and robust processes remain essential for trust in the translated repositories.

Modernizing code is challenging even with good documentation. A key goal of C3 Generative AI for Code Translation is to reduce the burden on humans while creating modern, well-structured code in the target language. Language models are rapidly becoming better generalist coders — top foundation models on popular coding benchmarks have dramatically improved over the past few years and can take on longer and more complex tasks.

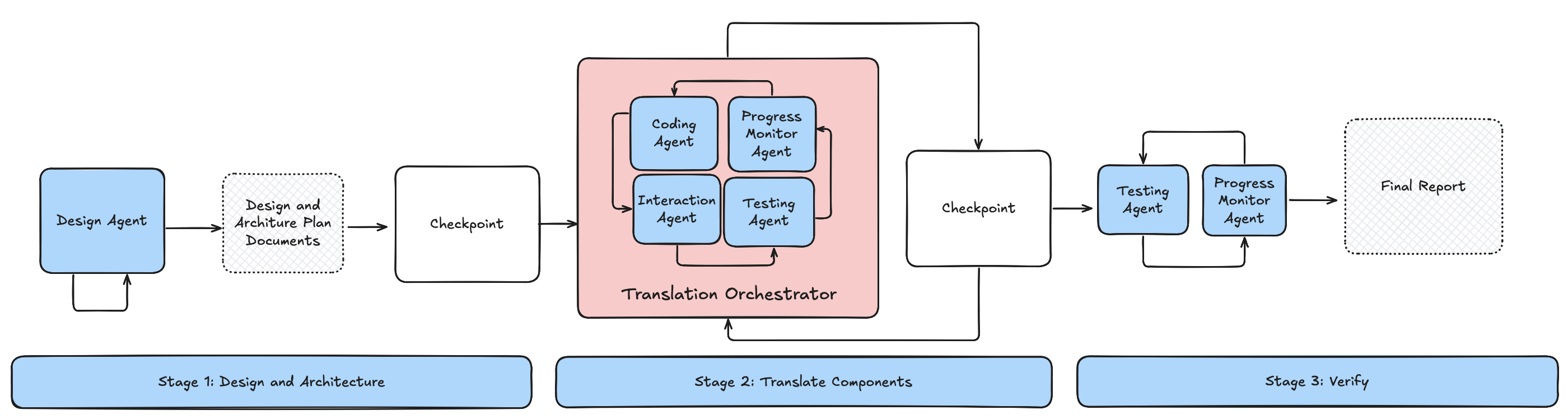

However, as task size and complexity grow, even the best language models struggle to maintain consistency across large contexts such as entire repositories. Just opening your favorite code agent and tasking it to translate the repo alone is not going to produce a viable result. We’ve tested a variety of in-editor and headless coding agents and believe a more specialized approach is needed. To address this, our process uses a mix of agents and workflows. This approach is more structured than just letting agents and subagents run in a loop, through the imposition of additional guardrails, but flexible enough to allow substantial debugging, revisions, and feedback.

Why Use Agents?

C3 AI’s agent-based workflow introduces structure, oversight, and continuous validation into the code translation process, ensuring that large-scale tasks remain accurate, explainable, and adaptable as they evolve. The following capabilities highlight how agents enable structure, oversight, and adaptability during translation:

- Continuous validation: Our approach enables continuous error checking and correction throughout complex tasks, through testing, static verification tools, and with opportunities for human feedback and review.

- Early error detection: Frequent feedback loops through compiling (using common build pipelines like cmake), running tests, either directly or through testing frameworks like GoogleTest, as well as other developer tooling.

- C3 AI agentic framework: Uses C3 AI’s agentic framework for both unit test generation and code translation. This framework coordinates specialized agents with communication between them and defined roles, enabling them to collaborate efficiently on complex repositories while maintaining accuracy, consistency, and traceability.

- Progress mapping: The system periodically generates mapping between unit tests and user stories to track completed versus outstanding work, creating checkpoints. It also traces what part of the codebase is currently being worked on and overall progress. Users can interact with the generated codebase at checkpoints, keeping the human-in-the-loop and allowing for feedback or changes.

Multi-agent Versus Single-agent

As code translation tasks grow in scale and complexity, a single coding agent often falls short. C3 AI’s multi-agent framework introduces structure, oversight, and collaboration, enabling more reliable and adaptable translation across large repositories.

Let’s first lay out the limitations of using a single coding agent. Single-agent approaches struggle with large codebases, often losing critical information due to context compaction. This can lead to misinterpretations and inconsistent outputs, especially when translating across modules. Without external feedback, a lone agent relies solely on its own assessment, which limits error detection and correction.

All these limitations can be addressed with multi-agent frameworks. Multi-agent systems coordinate specialized agents with defined roles, enabling tactical planning and modular implementation. Continuous feedback loops between agents improve validation and debugging throughout the process. The orchestrator enforces guardrails and manages context transitions, ensuring consistency and traceability across stages. These are the systems C3 AI employs across all our agentic solutions.

Figure 3: The architecture of C3 Generative AI for Code Translation’s agentic workflow: mixing structured control and agentic action.

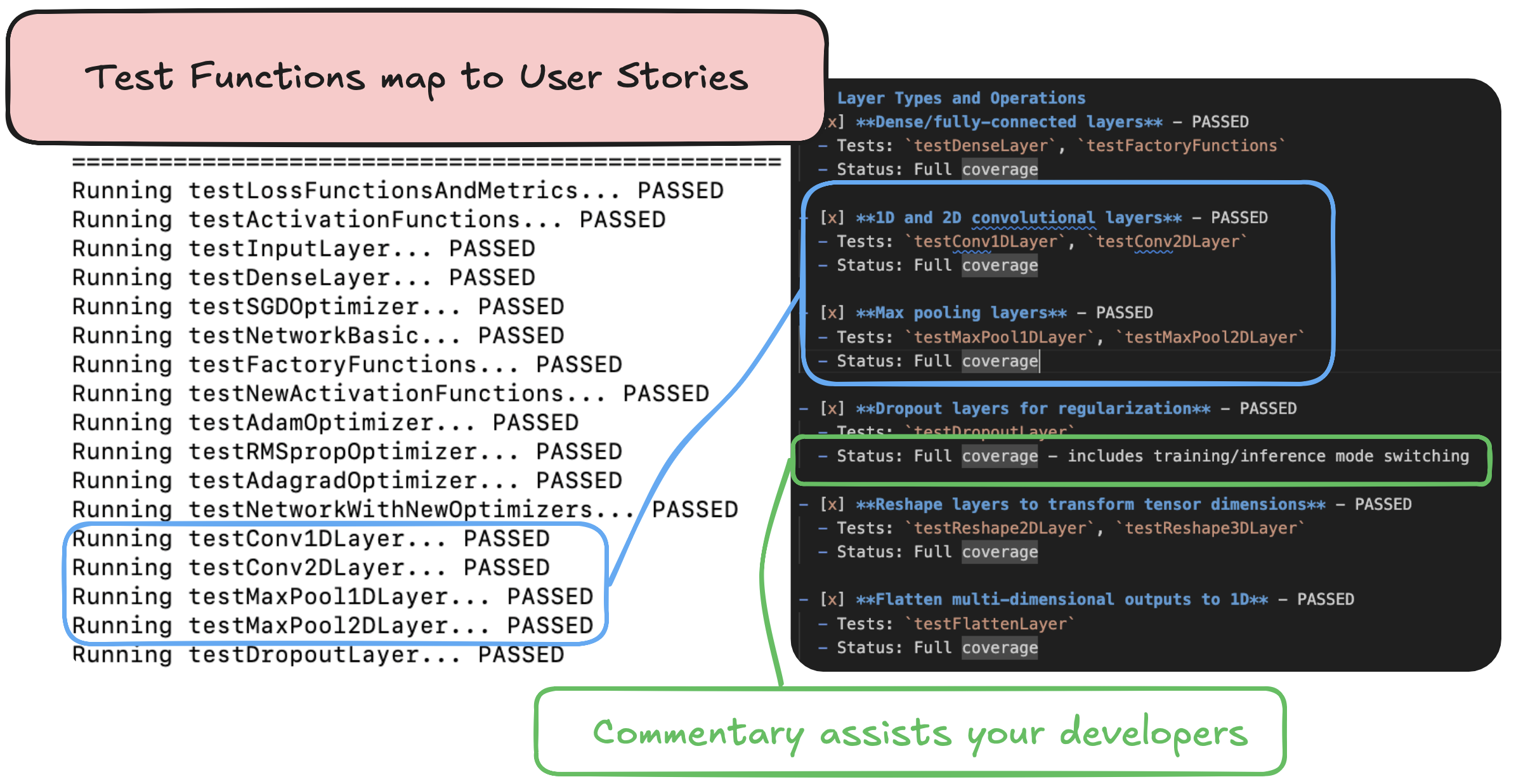

Trust and Verify: Unit Testing and Feedback

The iterative process of code translation requires feedback with a clear signal that indicates whether the generated code aligns with the intended functionality. This starts with the system generating user stories from the documentation alongside the repository overview. During the translation process, users can modify these stories to better prioritize critical test cases and coverage goals. For instance, a user might remove a story about “C interoperability” for a C++ codebase or add one that captures the logic of a critical algorithm. These user stories and the original codebase form the basis for unit test generation. The main coding agent then iterates on each component until it passes the tests — essentially a test-driven development approach.

The system’s architecture separates responsibilities between agents. This helps to address the common issue of context overflow and AI optimism: a common issue with code agents is how agents, especially when running for a long time, overestimate the quality of their own output. When the same model writes, tests, and evaluates them, it’s prone to missing flaws. By dedicating the verifier specifically to finding problems in tests and code — rather than creating them — we catch more issues that would otherwise go undetected. The model can become its own worst critic.

This separation creates better test quality, which provides clearer signals about code correctness and ultimately improves the quality of the translated repository. Humans can jump in at checkpoints, edit tests, update code, or resolve blockers, before sending the code back to the translation system to keep working.

Figure 4: Real outputs demonstrating the mapping of test functions to user stories, showing (a subset) of passing tests along with the user stories covered.

Libraries, Games, and Simulations: A Variety of Modernizations

C3 AI Code Translation can be used for a wide variety of tasks. Of course, there are many varieties of codebases out there, targeting different goals and integrating with different systems. We have worked on making sure we cover many of these differences, from handling display and UI/UX to building libraries ready for downstream use, to integrating with other system dependencies.

To demonstrate the effectiveness of our multi-agent translation to various types of programs, and to test solutions to these challenges, we have applied it to the Fortran neural network library discussed above, complex simulations, and programs like battleships or other games.

Figure 5: An example screen for a translated battleship game.

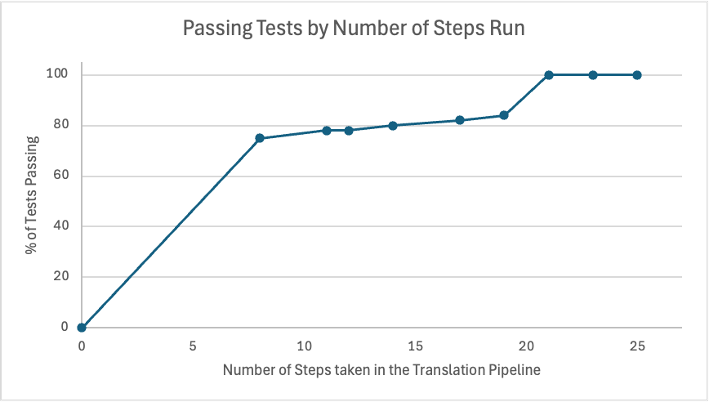

The translation process autonomously converted the entire codebase to C++, preserving the library’s API structure and mathematical operations while adapting to C++ conventions for memory management, templating, and object-oriented design. Though we believe human-in-the-loop is essential to long-running tasks, we wanted to test the system with no human feedback: 100% agentic. Using the architecture and training mechanisms in the translated code, C3 AI Code Translation was tasked with creating a MNIST digit classifier using the code it translated autonomously.

Figure 6: C3 Generative AI for Code Translation autonomously translates a neural network library from Fortran and trains on MNIST, achieving 85% accuracy.

The resulting classifier, with a minute of training on CPU, achieves a reasonable 85% accuracy on this task. All done autonomously, done with a version from another language. Of course, a human might want to swap in some of the other layers implemented during the translation process, like 2D convolution or self-attention — no need to use only the simplest architecture.

We can watch the progress of this translation over time by watching the percentage of user stories with accompanying unit tests passing after each run of the agent (called a “Step”). Initially, a surge of progress is accomplished, followed by a period of debugging, before the application resolves the remaining blockers and moves to verifying the passing tests.

Figure 7: The percentage of user stories with passing unit tests, tracked by the number of steps taken by the translation pipeline.

With C3 Generative AI for Code Translation, organizations can modernize legacy systems while maintaining functionality and improving code quality. Expansions to this framework will let us modernize further languages and integrations — offering a path forward for legacy systems, empowering teams to move towards the future with confidence.

Ready to get started? Learn more about how you can start seeing value from C3 Generative AI in our free two-day workshops.

About the Authors

Alexander Glynn is a Data Scientist on the Generative AI team at C3 AI, where he works with industry and government clients to implement systems for exploring, summarizing, and transforming unstructured information. Alex holds a bachelor’s degree in applied mathematics from Harvard University and has previous publications on information retrieval and fair machine learning at conferences including NeurIPS.

Ravi Sharma is a Lead Data Scientist at C3 AI overseeing generative AI projects and applications for U.S. government and government-adjacent customers. He holds a doctorate in materials science and engineering from the University of Michigan where he focused on characterization and empirical modeling of soft condensed matter. Prior to joining C3 AI, he performed generative AI research for the Department of Defense.

Special thanks to Michael Brady and Steven Ponchio for their leadership as Solution Managers on this project, and Jeffrey Schneider for his contributions.