Enterprise AI represents a powerful new category of business software that is a core enabling technology of digital transformation. Over the next several years, the typical large organization will deploy dozens or hundreds of AI-enabled software applications across every part of its operations. Shell, for example, has 280 AI software projects in flight.

Just as in prior software innovation cycles, many companies today are taking a “do it yourself” approach and trying to build the new technology themselves – with no success. We saw this same pattern back in the 1980s, when Oracle introduced relational database management system (RDBMS) software to the market. Many CIOs tried building their own database software. None succeeded. Eventually those CIOs were replaced and the new CIO installed a working system from Oracle, IBM, or another provider.

The pattern repeated itself in the 1990s, when enterprise application software including ERP and CRM was introduced. Organizations spent hundreds of person-years and hundreds of millions of dollars on failed attempts to build their own ERP or CRM system. After those CIOs were replaced, the new CIO deployed a viable product from SAP, Siebel Systems, or other providers.

Today, many IT organizations are trying to internally develop a general-purpose AI platform, using open source software with a combination of microservices from cloud providers like AWS and Google. None have succeeded. The reasons for failure are the same as in prior cycles: Organizations grossly underestimate the complexity of building, testing, operating, and maintaining an enterprise-class AI software platform.

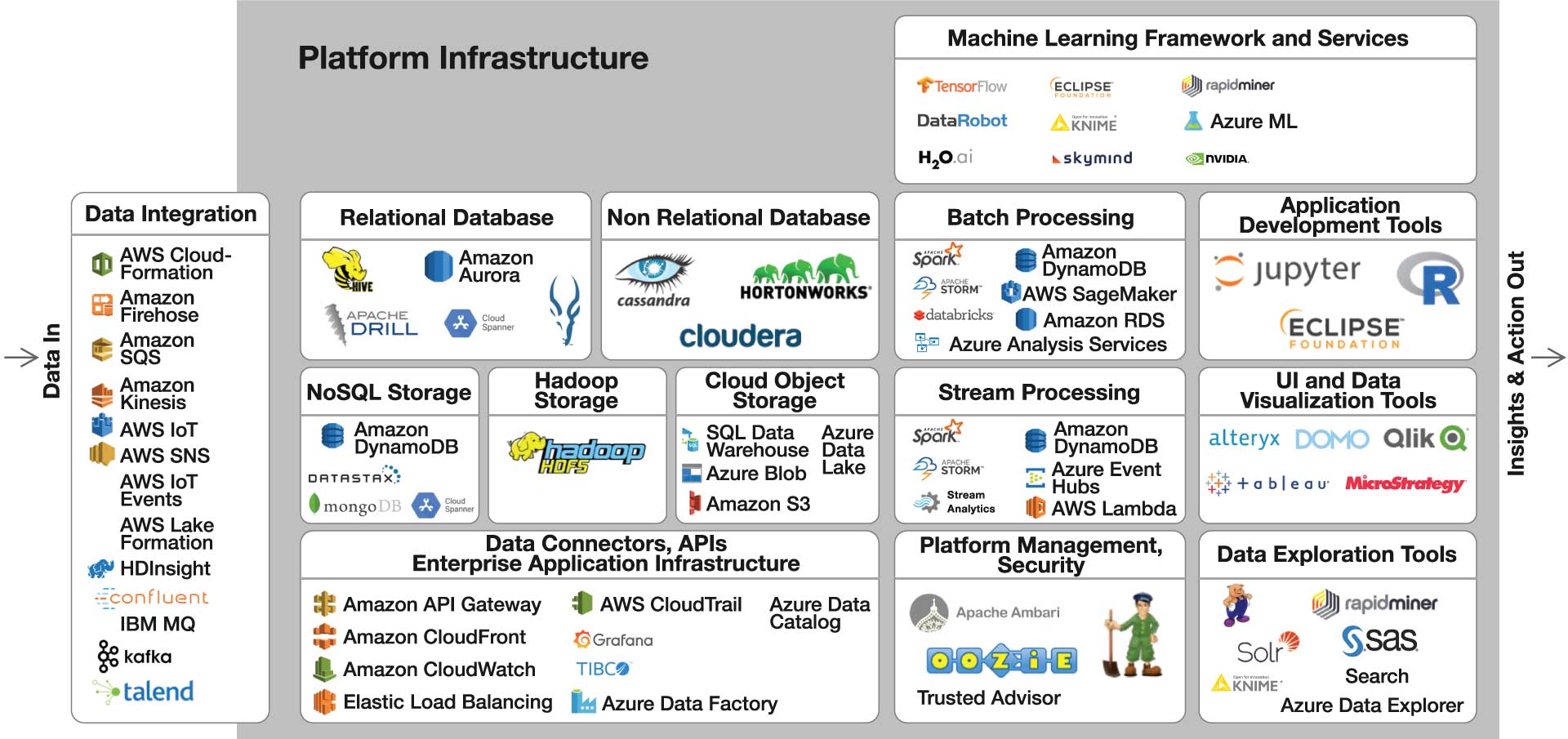

To make matters worse, developing an AI software platform is far more complex than building a business application like CRM or ERP. Why? The technology stack required to develop, test, and operate enterprise-class AI applications resembles the figure below. Few organizations have the internal resources and expertise required to assemble, integrate, and maintain this extensive set of technology components and services.

The AI Software Stack: The “build it yourself” approach requires stitching together dozens of disparate open source components from different developers with different APIs, different code bases, and different levels of maturity and support.

20 Questions to Ask Your IT Department

Going down the internal build path can be a very costly mistake, with long-term damage to an organization’s competitiveness and economic performance. CEOs, CFOs, and board members need to understand their IT department’s approach to enabling the organization’s AI strategy. Here are 20 key questions to ask your IT department.

- 1. What is our AI roadmap?

- 2. How are we prioritizing use cases on the roadmap?

- 3. For each identified use case, what is the expected value within 1 year?

- 4. What are the “quick win” AI use cases that have been identified?

- 5. What is the projected annual economic value of our current AI roadmap?

- 6. What are we doing to accelerate time to value with our AI strategy?

- 7. Are we leveraging pre-built AI applications for any use cases?

- 8. Have we set standards for our AI technology stack that ensure a consistent approach across our enterprise?

- 9. Can we run our AI applications on any cloud platform (e.g., AWS, Azure, Google, IBM)?

- 10. Can we run our AI applications on a combination of cloud platforms?

- 11. How easy is it to replace one component with another (e.g., replace Oracle database with Postgres)?

- 12. What are we doing to future-proof our AI applications to take advantage of ongoing advances in cloud services, open source software, AI algorithms, and other innovations?

- 13. Does our approach enable rapid development and deployment (e.g., 3-6 months to develop and deploy an AI application)?

- 14. Does our approach enable the consistent use of an enterprise-wide data model that can be leveraged across all AI applications?

- 15. Are we able to efficiently and effectively aggregate, correlate, and normalize data of any type, volume, and velocity from all required data sources, both internal and external, into a unified, federated data image?

- 16. What are we doing to minimize the amount of code that needs to be written and maintained for each AI application being developed?

- 17. What are we doing to minimize the resources (data scientists, architects, developers, analysts, etc.) required to design, develop, and deploy AI applications?

- 18. What are we doing to minimize the complexity of our AI applications without sacrificing effectiveness?

- 19. What are we doing to ensure the quality of data being ingested by our AI applications?

- 20. What is our strategy to ensure the ongoing operability of our AI applications as their underlying open source components are updated?

Avoiding the Internal Build Fiasco

History has a way of repeating itself. Unfortunately, many organizations will continue down the internal build path and end up wasting money – and, even more important, valuable time – on failed attempts to build their own AI platform. But just as no organization today builds its own database, ERP, or CRM software, the “build it yourself” AI platform will be a distant memory in five or 10 years.

For those CEOs, CFOs, and board members who avoid that costly route today, it will also be a painless memory.

Learn more about Tom Siebel’s views by checking out his Wall Street Journal Best Seller book, Digital Transformation: Survive and Thrive in an Era of Mass Extinction.