- AI Software

- C3 AI Applications

- C3 AI Applications Overview

- C3 AI Anti-Money Laundering

- C3 AI Cash Management

- C3 AI Contested Logistics

- C3 AI CRM

- C3 AI Decision Advantage

- C3 AI Demand Forecasting

- C3 AI Energy Management

- C3 AI ESG

- C3 AI Health

- C3 AI Intelligence Analysis

- C3 AI Inventory Optimization

- C3 AI Process Optimization

- C3 AI Production Schedule Optimization

- C3 AI Property Appraisal

- C3 AI Readiness

- C3 AI Reliability

- C3 AI Smart Lending

- C3 AI Supply Network Risk – bak

- C3 AI Turnaround Optimization

- C3 Generative AI Constituent Services

- C3 Law Enforcement

- C3 Agentic AI Platform

- C3 Generative AI

- Get Started with a C3 AI Pilot

- Industries

- Customers

- Events

- Resources

- Generative AI for Business

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can the Generative AI Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- What Is Enterprise AI?

- Machine Learning

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download eBook

- All Resources

- Publications

- Customer Viewpoints

- Blog

- Glossary

- Developer Portal

- Generative AI for Business

- News

- Company

- Contact Us

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Problem Scope and Timeframes

- Cross-Functional Teams

- Getting Started by Visualizing Data

- Common Prototyping Problem – Information Leakage

- Common Prototyping Problem – Bias

- Pressure-Test Model Results by Visualizing Them

- Model the Impact to the Business Process

- Model Interpretability Is Critical to Driving Adoption

- Ensuring Algorithm Robustness

- Planning for Risk Reviews and Audits

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download e-Book

- Machine Learning Glossary

Tuning a Machine Learning Model

Loss Functions

A loss function serves as the objective function that the AI/ML algorithm is seeking to optimize during training efforts, and is often represented as a function of model weights, J(θ). During model training, the AI/ML algorithm aims to minimize the loss function. Data scientists often consider different loss functions to improve the model – e.g., make the model less sensitive to outliers, better handle noise, or reduce overfitting.

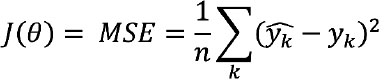

A simple example of a loss function is mean squared error (MSE), which often is used to optimize regression models. MSE measures the average of squared difference between predictions and actual output values. The equation for a loss function using MSE can be written as follows:

Where represents a model prediction,yk represents an actual value, and there are n data points.

It is important, however, to recognize the weaknesses of loss functions. Over-relying on loss functions as an indicator of prediction accuracy may lead to erroneous model setpoints. For example, the two linear regression models shown in the following figure have the same MSE, but the model on the left is under-predicting while the model on the right is over-predicting.

Loss Function is Insufficient as Only Evaluation Metric

Figure 13 These two linear regression models have the same MSE, but the model on the left is under-predicting and the model on the right is over-predicting.