In a previous blog post, we talked about what an enterprise AI application is and what constitutes a holistic enterprise AI strategy. In this post, we will dive deeper into the process of developing and operating enterprise AI applications.

As discussed earlier, enterprise AI applications represent the convergence of four major disruptive technologies: elastic cloud, big data, IoT sensor networks, and AI and machine learning (ML). But ultimately an enterprise AI application is in many ways like a typical piece of enterprise software. From an operational perspective an enterprise AI application will span the same stages across its lifecycle: application development, deployment and operations, and ongoing maintenance. Yet, in each of those phases an enterprise AI application will present certain peculiarities and challenges.

Unlike rules-based software AI and ML systems learn from actual history, improve their performance continuously, and adapt to changing conditions and requirements. Enterprise AI applications can significantly outperform rules-based systems across a wide range of business use cases such as for medical image diagnostics, predictive equipment maintenance, and customer churn detection. At the same time, it can also be more difficult to develop and operate enterprise AI applications due to their distinct requirements. Developing and operating enterprise AI applications at scale require a novel approach, new skillsets and teams, different sets of tools and systems, a new technology stack, and a powerful enterprise AI platform.

Application Development

Data is a foundational element for any enterprise software, but more so for an enterprise AI application. Without complete and representative data to learn from an AI system cannot generate meaningful insights or outputs. Therefore, having a unified, current, and clean data image across the enterprise is a prerequisite for any enterprise-scale AI development.

Today, a typical enterprise has multiple distinct operational systems, dozens of disconnected teams and processes, hundreds of disparate data sources, and thousands of production applications. It is a massive challenge to ingest data from across these disparate systems and sources and then integrate and maintain them on a common, unified, current, and clean data image across the enterprise. The C3 AI® Platform – our software platform for developing, deploying, and operating enterprise-scale AI applications – is designed to address these challenges. Thanks to its model-driven architecture, pre-built connectors, and rich library of enterprise object models, the C3 AI Platform significantly accelerates and simplifies data integration across disparate systems. The unified data image is persisted on the C3 AI Platform and is kept current to serve as the foundation for a scalable enterprise AI strategy.

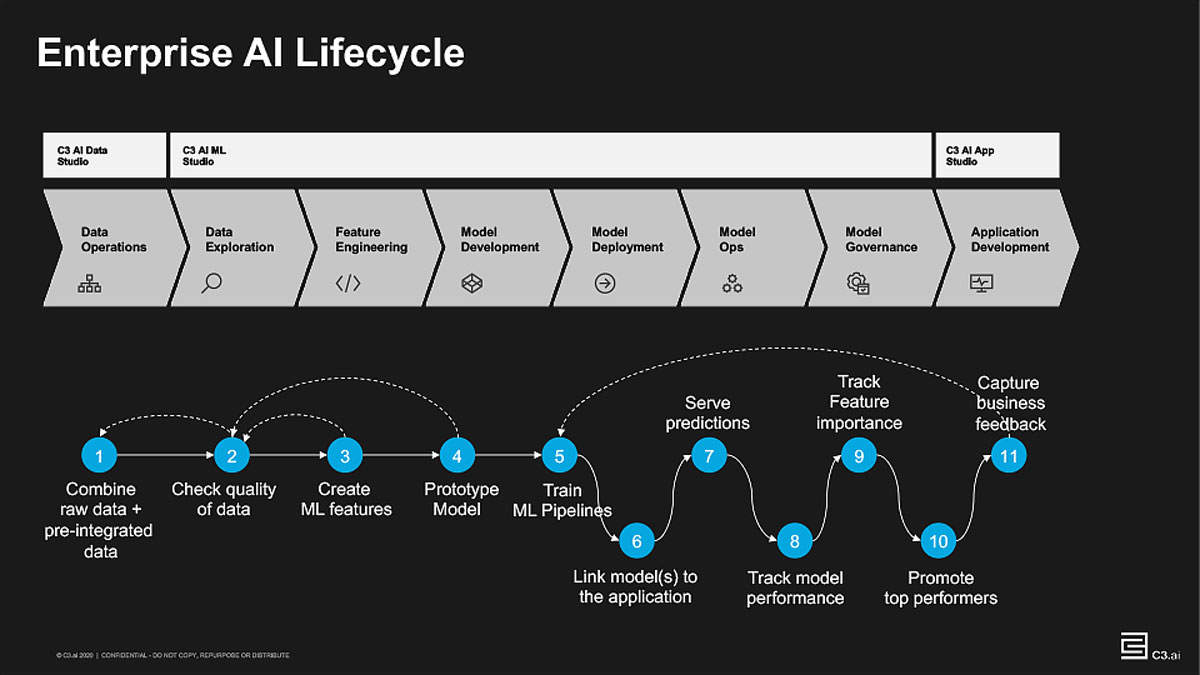

The next important step across the enterprise AI development lifecycle is ML model development. Using the unified data image, data science teams start with early data exploration and feature engineering efforts to identify relevant signals to feed into AI and ML models. They then use these high potential signals and machine learning features for model prototyping, training, and ongoing development.

Model development is the most iterative phase of the enterprise AI application lifecycle. Data science teams will frequently develop a wide range of models, experiment with different machine learning features, test multiple modeling techniques, and employ a range of third-party libraries (e.g., Keras, TensorFlow, PyTorch), languages (e.g., R, Python, JavaScript), and services (e.g., Jupyter) as they try to develop the best performing machine learning models.

An enterprise AI platform needs to address the iterative nature of model development and the wide-ranging needs of development and data science teams. The C3 AI Platform offers a range of code, low-code, and no-code options for ML development, along with unmatched flexibility and an open platform for developers and data scientists with a rich library of third-party integrations and support. These include native Jupyter Service, multi-language support (R, Phyton, JavaScript, Visual Studio Code), and integrations to commonly used libraries such as TensorFlow, Keras, Prophet, SciPy, and more.

The remaining development steps are similar to a traditional software development workflow. Developers define the application logic, setup critical workflows, define alerts, and develop the user interface and related APIs to serve users and embed the application into target business processes. To help accelerate application development, the C3 AI Integrated Development Studio, or C3 AI IDS, offers an intuitive low-code / no-code development environment and rich library of components and building blocks for developers. Developers can use the drag and drop visual canvas to define application logic, create alerts, build workflows, and develop the user interface in a single cohesive environment.

As opposed to typical enterprise software development, enterprise AI application development requires a high degree of collaboration across different teams, including data scientists, data engineers, developers, and subject matter experts. Due to the highly iterative nature of development, cross functional teams need to collaborate across the entire development lifecycle – including data integration and exploration, feature engineering, model prototyping, and UI development – to build a high-performance enterprise AI application and ensure adoption across business users.

Figure 1: Enterprise AI application development lifecycle is highly iterative

Deployment and Operations

The next major phase in the enterprise AI application lifecycle is deployment. Production deployment is significantly more complex for an enterprise AI application compared to typical rules-based software. A single enterprise AI application typically includes multiple (sometimes tens of thousands) machine learning models and may require complex deployment schemas. An enterprise AI platform should support different deployment strategies to test competing modeling approaches such as champion-challenger deployments, randomized A/B tests, or shadow models to ensure continuous performance.

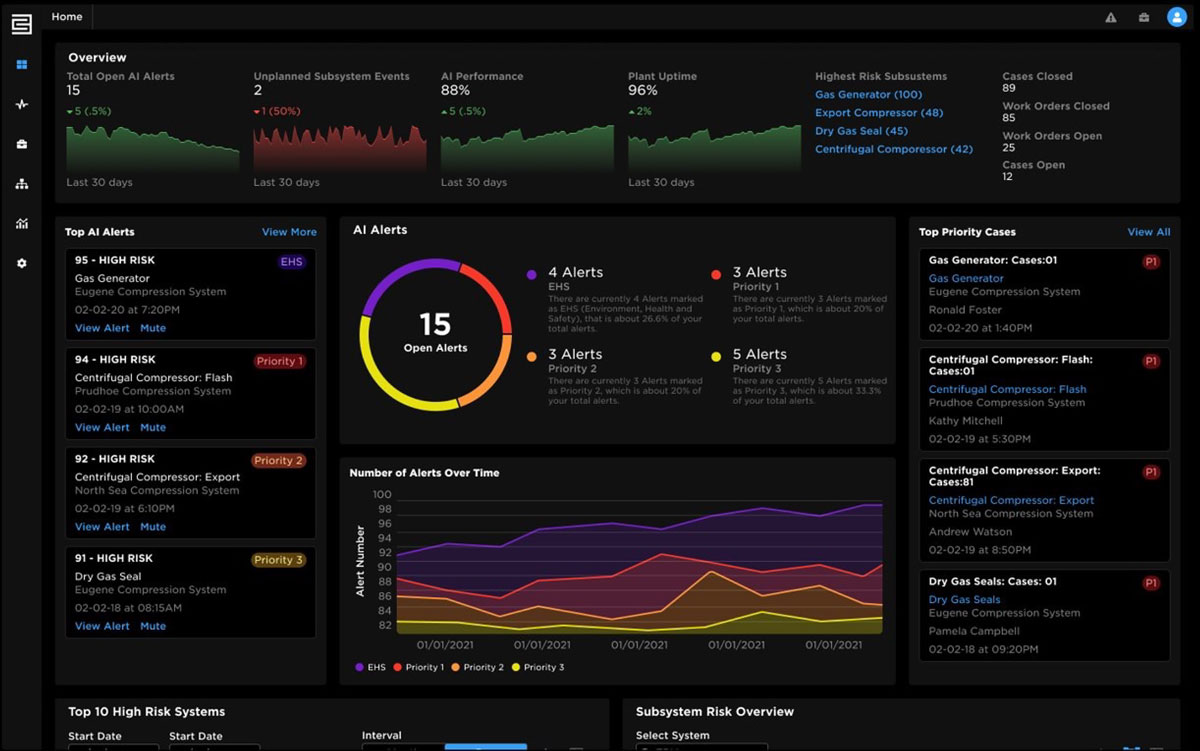

Once in production, AI and ML models generate ongoing inferences (e.g., predictions, forecasts, classifications) based on new data and trigger alerts or downstream actions. An enterprise AI platform should support continuous analytic processing, manage the inference workload demanded by the business use case, and offer a flexible distributed architecture to support changing requirements (such as data volume, model inference frequencies, or number of end users).

Unlike typical enterprise software, enterprise AI applications require much more attention and ongoing rigorous monitoring once in production. Model performance (defined as prediction accuracy, precision, or recall) generally doesn’t stay constant and will decay over time, a phenomenon called model drift. The importance of machine learning features might change due to changes in the underlying data, and business requirements might also evolve over time. To sustain a high level of performance, an enterprise AI platform should offer a comprehensive ML Ops framework to monitor model performance, detect model drift, track importance of machine learning features, capture business feedback from end users, and trigger model re-training automatically.

Lastly, each organization has unique deployment requirements as defined by their underlying cloud strategy, country-specific regulation, and security requirements. An enterprise AI application might need to use data stored in a private cloud, invoke microservices from a third-party, or publish insights to another public cloud. An enterprise AI platform should offer utmost deployment flexibility and support multi-cloud, hybrid-cloud, private-cloud, and edge deployments to address varying requirements across the enterprise.

Maintenance

Enterprise AI applications are dynamic and need ongoing maintenance and modifications. As new data becomes available, new modeling techniques emerge, and business problems evolve; data science and development teams need to continuously work on maintaining and enhancing their enterprise AI applications.

An AI model can only perform as well as the quality of the underlying data it was trained and developed on. Therefore, a common enhancement for any enterprise AI application is expanding the data foundation it was built on. To extend the existing data model, data engineers and data scientists need to connect to the new data stores, persist data on the enterprise AI platform, define new entities and interdependencies between the existing data model objects, and set up continuous data ingestion for production deployments.

Additional data elements also require revisiting the model development process described earlier. New machine learning features can be tested to improve the performance of existing models or develop new algorithms and models. These high performant models can then be deployed to production to complement or replace the older models.

Lastly, user interface and application logic are also dynamic and require modifications and extensions over time. As new user personas are added, user requirements evolve, or business rules change, the enterprise AI application needs to adapt by extending or modifying the user interface and application logic.

Figure 2: C3 AI Reliability user interface presents key metrics and highlights impending process and equipment risks

Enterprise AI applications pose many challenges compared to traditional software, but they offer outsized benefits in return. As enterprises increasingly experience these challenges and enjoy the benefits of enterprise AI applications, the need for a new approach and a new technology stack is becoming abundantly clearer. The C3 AI Platform presents an integrated approach to an effective enterprise AI strategy and is the only comprehensive platform available to develop, deploy, and operate enterprise AI applications.

About the author

Turker Coskun is a group manager of product marketing at C3 AI where he leads a team of product marketing managers to define, execute, and continuously improve commercial and go-to-market strategies for C3 AI Applications. Turker holds an MBA from Harvard Business School and a bachelor of science in electrical engineering from Bilkent University in Turkey. Prior to C3 AI, Turker was an Engagement Manager at McKinsey’s San Francisco office.