CHARM your time series — and let the insights charm you back

By Utsav Dutta, Senior Data Scientist, C3 AI, Sina Pakazad, Vice President, Data Science, C3 AI, Henrik Ohlsson, Chief Data Scientist, Data Science, C3 AI

Time series data is the backbone of modern systems — capturing the behavior of machines, infrastructure, markets, and more. But making sense of this data has remained stubbornly task-specific: one model for forecasting, another for anomaly detection, none of them reusable across domains.

At C3 AI, we set out to change that.

In our first blog, we asked what it would take to build a foundation model for time series — one as general purpose and transformative as those reshaping language and vision. In our second blog, we explored how such a model could power decision systems that retrieve, compare, and explain what is truly needed for informed decision making.

Now, we introduce CHARM, the Channel-Aware Representation Model. CHARM is a general-purpose time series embedding model that transforms messy multivariate sensor streams into dense, structured representations that are ready to be used for forecasting, anomaly detection, retrieval, and more. We developed CHARM to answer one question: How do you build a reusable, interpretable representation of time series data that works across domains, tasks, and configurations?

In this post, we’ll walk through how CHARM works, what sets it apart, and how it redefines what’s possible in time series modeling.

Understanding embedding

Imagine a seasoned physician reviewing a patient’s vital signs. She is not memorizing every heartbeat or data point. Instead, she builds a mental model, a high-level representation, that lets her reason about what is happening, what might come next, and whether anything is out of the ordinary. That is the role of an embedding.

CHARM does this for time series sensor data. It monitors how a system evolves across channels and over time, and compresses that behavior into a meaningful, task-agnostic vector. This embedding captures the essential dynamics of the system without overfitting to a single task or dataset. Once learned, it can be reused to forecast a variable, detect anomalies, or retrieve similar historical behavior, all with minimal to no additional training.

What makes CHARM different

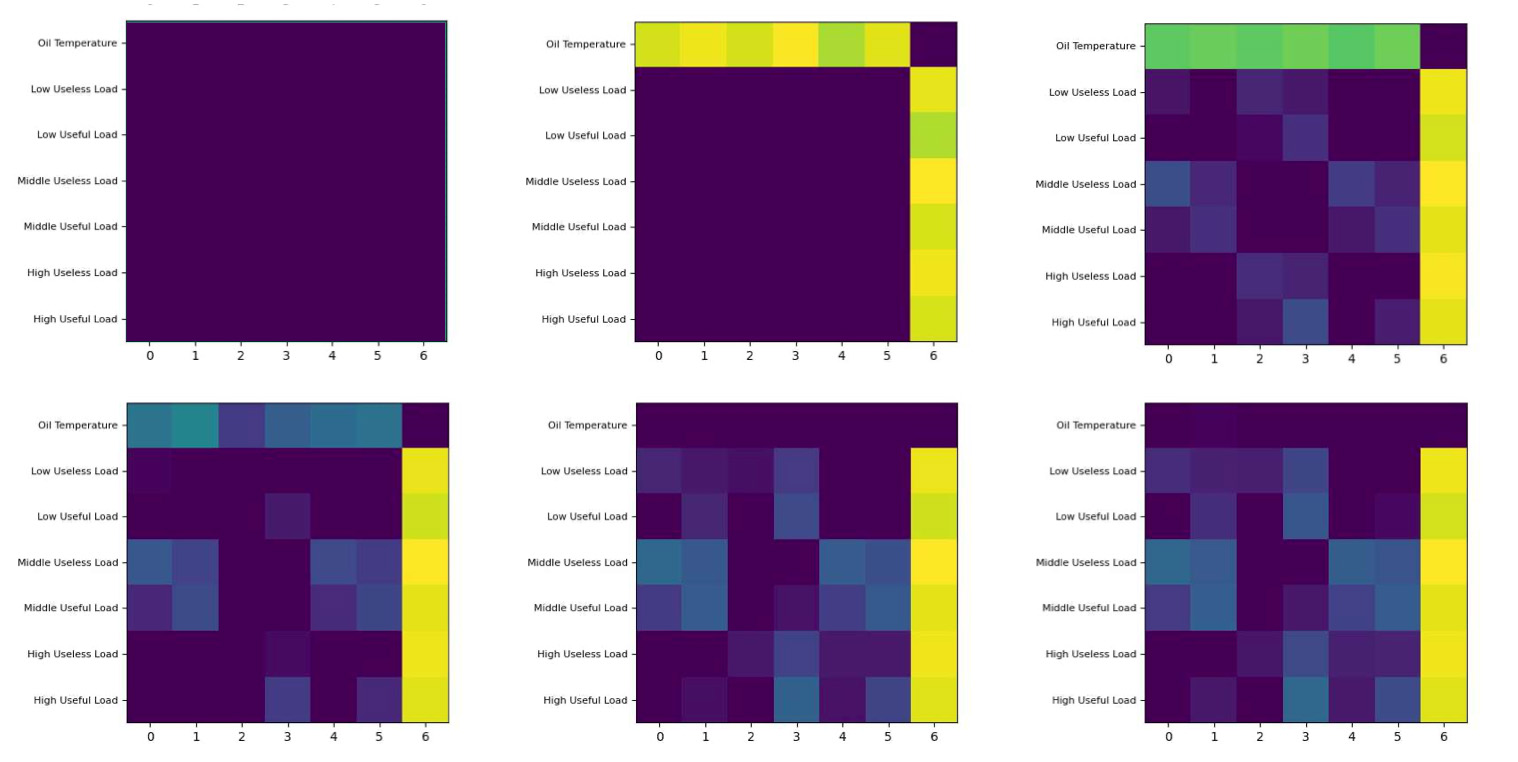

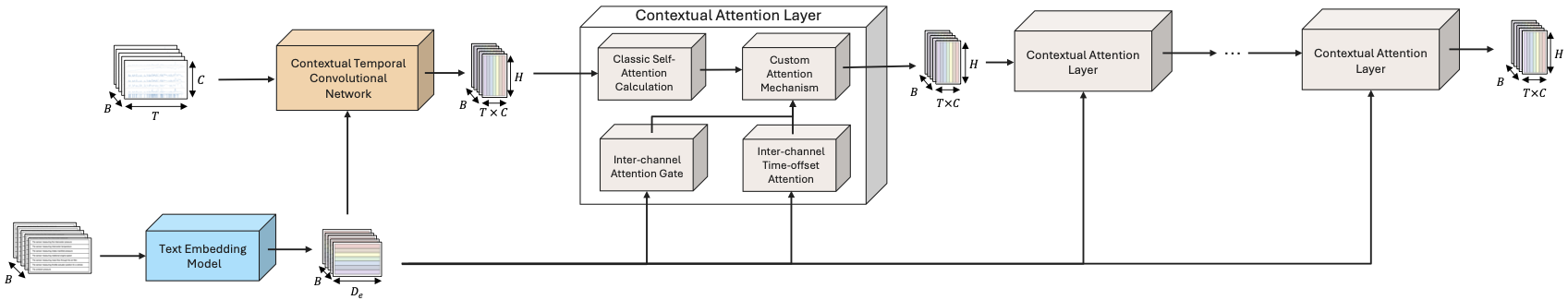

Figure 1: CHARM architecture. CHARM fuses sensor signals with their textual descriptions to produce structured embeddings. Contextual convolutions and attention layers enable the model to reason about each channel’s role and its interactions, capturing both temporal dynamics and cross-channel semantics.

CHARM is built leveraging both convolutional neural networks and transformers (see Figure 1 for a high-level representation of CHARM architecture). It leverages recent advances in self-supervised learning, adapted specifically for multivariate time series. The first core innovation is that CHARM does not treat each sensor channel as just a stream of numbers, but also it considers what that sensor is measuring or detecting, not just the readout. Each channel is paired with a textual description like “Turbine Vibration,” “Rotor RPM,” or “Compressor Discharge Pressure.” These natural-language descriptions are embedded and used to guide the model’s internal convolutions and attention layers. This gives CHARM an awareness of the role and identity of each channel, in context of other accompanying channels, not just its values.

The second innovation lies in how CHARM models relationships between channels. In a real-world system, sensors interact: pressure changes with temperature, current lags behind voltage, and heart rate often rises with fever. CHARM includes custom attention mechanisms that learn which channels influence each other, and with what time offset. These interdependencies are sparse, asymmetric, and interpretable. The result is a learned graph of channel interactions that adapts to the system it is observing. Unlike models that passively absorb correlations, CHARM’s architecture is designed to resist spurious links and instead highlight relationships that are more likely to reflect underlying causality.

Finally, CHARM is trained using a JEPA-style self-supervised learning objective. Rather than predicting raw future values, CHARM learns to predict representations of future segments, essentially predicting how the system will behave rather than exactly what it will do. This approach is more robust to noise and better suited for generalization.

Interpretability comes standard

One of the key features of the model is that you can see CHARM think. Its learned channel gating matrices are not just tensors, but they are also readable. You can visualize them, trace dependencies, and see how cross-channel influences evolve over time and over the course of model training. By analyzing attention weights and gating structures, CHARM produces interpretable heatmaps showing which channels attend to others.

For example, consider a typical power transformer. Sensors monitor variables like load current, ambient temperature, input voltage, and oil temperature. In a healthy system, oil temperature is often a dependent variable, and it rises in response to increased current or external heat but does not directly affect those inputs. When trained on such multivariate signals, CHARM naturally learns this asymmetry: oil temperature attends to other variables but is not attended to in return. That is not a coincidence, and it is a data-driven reflection of physical causality (see Figure 2 for a visual representation).

These attention patterns do not just validate the model’s reasoning, but they also provide engineers and operators with interpretable insight into system dynamics, revealing which variables are driving change and which are responding.

How it performs and why it matters

CHARM has been evaluated across dozens of public benchmarks and real-world datasets that span a range of critical tasks. In classification, it outperforms specialized models across a suite of 15 multivariate tasks. In anomaly detection, it achieves state-of-the-art performance that is well above traditional methods. In forecasting, CHARM often reduces error by more than half compared to strong baselines, even for long horizon scenarios.

But the real value of CHARM is not just in beating benchmarks. It is in what it unlocks for enterprise applications:

- Flexibility across domains: A single pretrained CHARM model can be used across manufacturing, healthcare, energy, and finance. There’s no need to redesign the architecture or retrain from scratch for every use case.

- Interpretable outputs: Operators can visualize which channels are influencing model decisions. That is especially important in regulated environments, where black-box predictions are not acceptable.

- Data-efficient fine-tuning: Downstream models can be trained on a few hundred labeled examples, often just a small linear head, because the embeddings already capture the system’s structure.

- Adaptability: New sensors or updated configurations? Just describe them. CHARM is equivariant to channel order and uses text to generalize intelligently.

Where to go from here

You can explore the full model architecture, training pipeline, ablations, and results in our latest article. We have also integrated CHARM into the C3 Agentic AI Platform, allowing developers to embed, retrieve, and fine-tune via APIs in our future releases of our platform. In our next post, we will explore how we can build on CHARM to support multi-task learning, that is building a single model that can handle forecasting, classification, anomaly detection, and more. We will share the architectural and training innovations that make this possible, and how this second stage in our foundation model vision moves us closer to universal, task-flexible time series intelligence.

About the Authors

|

Utsav Dutta is a Senior Data Scientist at C3 AI and holds a bachelor’s degree in mechanical engineering from IIT Madras, and a master’s degree in artificial intelligence from Carnegie Mellon University. He has a strong background in applied statistics and machine learning and has authored publications in the fields of machine learning in neuroscience and reinforcement learning for supply chain optimization, at IEEE and AAAI conferences. Additionally, he has co-authored several patents with C3 AI to expand their product offerings and further integrating generative AI into core business processes. |

|

Sina Khoshfetrat Pakazad is the Vice President of Data Science at C3 AI, where he leads research and development in Generative AI, machine learning, and optimization. He holds a Ph.D. in Automatic Control from Linköping University and an M.Sc. in Systems, Control, and Mechatronics from Chalmers University of Technology. With experience at Ericsson, Waymo, and C3 AI, he has contributed to AI-driven solutions across healthcare, finance, automotive, robotics, aerospace, telecommunications, supply chain optimization, and process industries. His recent research has been published in leading venues such as ICLR and EMNLP, focusing on multimodal data generation, instruction-following and decoding from large language models, and distributed optimization. Beyond this, he has co-invented patents on enterprise AI architectures and predictive modeling for manufacturing processes, reflecting his impact on both theoretical advancements and real-world AI applications. |

|

Henrik Ohlsson is the Vice President and Chief Data Scientist at C3 AI. Before joining C3 AI, he held academic positions at the University of California, Berkeley, the University of Cambridge, and Linköping University. With over 70 published papers and 30 issued patents, he is a recognized leader in the field of artificial intelligence, with a broad interest in AI and its industrial applications. He is also a member of the World Economic Forum, where he contributes to discussions on the global impact of AI and emerging technologies. |