While interest in AI among business leaders has never been higher, fueled in large part by the launch of ChatGPT in November 2022, most companies are struggling to deploy AI across their organizations. In a recent survey of senior data and technology executives, only 26% of respondents said they have AI systems in widespread production. The question is why, especially considering that AI increases operational efficiencies, reduces costs, and leads to new sources of growth.

The answer largely lies in how AI applications are architected.

Most companies build custom AI applications using open-source software components and microservices available from the cloud-service providers — Azure, AWS, and Google Cloud. Such AI applications require assembly of numerous bespoke components and microservices to do data engineering, and to build and maintain AI models. As new AI technologies, such as large language models (LLMs), are introduced, they need to be integrated with existing data infrastructure and systems. Not only is custom assembly expensive and time consuming, but it also leads to complex and brittle code that breaks easily and is hard to maintain. Building modern AI applications requires a fresh approach and a modern architecture.

A Contemporary Approach for the AI Age

A model-driven architecture (MDA) is a refinement of developments in computer programming that started with structured programming and continued through object-oriented design. This concept, also called model-driven engineering, is a software development methodology that creates conceptual models of real-world objects and software components. In a model-driven architecture, the structure and functionality of a set of objects (which make a model) are described at a higher level than code in any particular language.

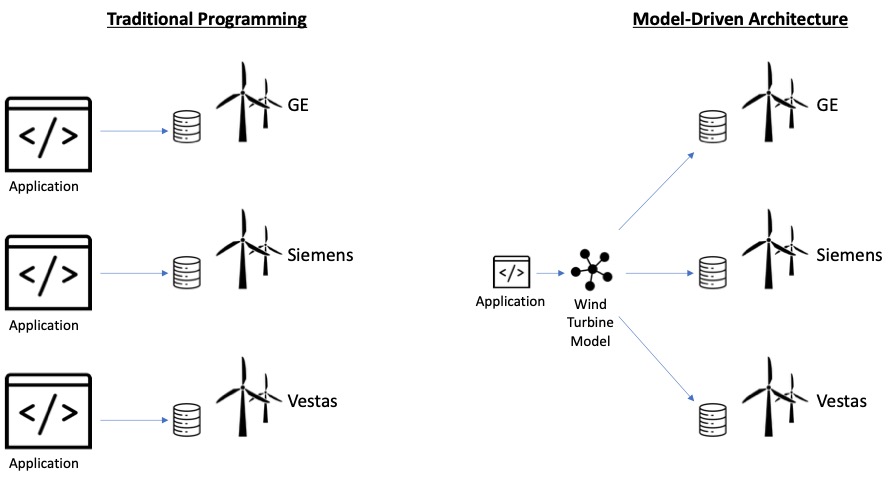

Each real-world object is a rich source of data, but its interfaces are ad hoc and manufacturer specific. Take, for example, electric wind turbines. Numerous manufacturers make wind turbines, and each turbine is slightly different from the next. If one were to build a system that worked with General Electric wind turbines, all software that used the data would need to be rewritten (or at least expanded) to handle wind turbines made by Vestas. By creating a model of a wind turbine and building software that interacts with this model, instead of individual turbines, we ensure that the code is no longer tightly coupled to the specifics of a manufacturer. Further, new uses can be made of the wind turbine model without the need for completely re-writing the code.

Software components such as databases, workflows, and machine-learning pipelines are much the same. A solution to a complex problem will likely use several different components from different companies, open-source projects, and homegrown systems. By creating a model for each component, it will exist apart from the implementation, allowing usage across programming languages and multiple applications.

Instead of creating code that directly addresses each real-world object or software component, the model-driven architecture creates models for each type of entity. Objects and components are modeled in terms of the data they produce and the operations that can be performed on them. These models can then be operated on using declarative programming instead of writing code specific to the syntax and particular implementation of each object and component.

An Essential Element to Design Enterprise AI Applications

A higher-level abstraction is especially important for large enterprise software systems because such systems are composed of parts written in different languages by different companies as well as open-source projects. The structure and documentation for any single part is clumsy to access and may not even be relevant from other languages. Tight coupling to each component also causes problems as the parts version over time or are swapped out for different implementations. This creates brittleness in software implementations, causing frequent breakage.

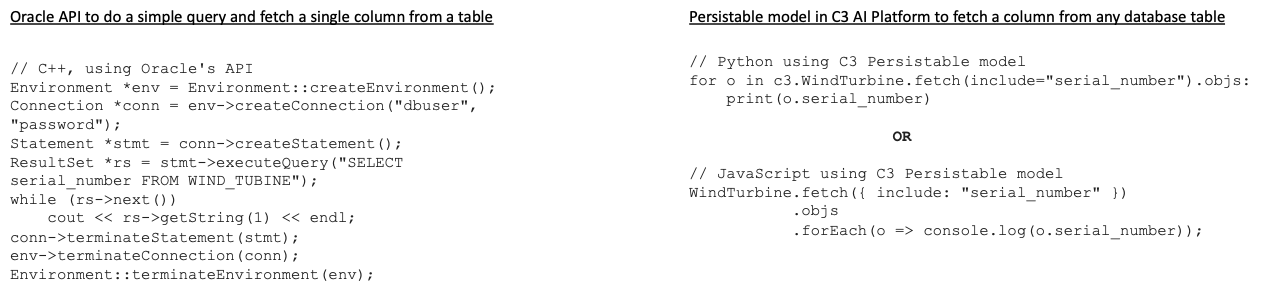

For example, the heterogeneity of data present in an enterprise system makes it impractical to custom code the relationship between each database and each consumer of data. Having software components such as databases represented by a model removes the need for custom development to use them. Code to read and write objects to a modeled database is at least standardized and at best completely transparent.

Turning again to wind turbines. A wind farm operator will likely have dozens of turbines that are all collecting data in various databases and SCADA systems. Making use of that data will be nearly impossible if each source system requires custom code. Efforts to use advanced analytics, such as machine learning, to analyze wind turbine data can be stymied by the effort required to write tens of thousands of lines of code just to pull the necessary data.

Machine learning and more advanced AI techniques require data to be organized into features. This means lining up data values from different systems, with different interfaces, data values, and even units of measure. This task, called feature engineering, takes significant effort, and generally dwarfs that of applying the AI/ML technique itself. By creating a model for the data sources, feature engineering goes from a large programming task to a small declarative task.

Most AI/ML projects flounder in the feature engineering stage because cleaning and organizing the data is done in various pieces of code that are fragile and tightly coupled to a particular data source. Defining a model for the data source or store is an essential foundation for building AI/ML applications. Additionally, modeling data stores requires that the data is clearly documented and organized from the beginning. Moreover, adding new data sources and new uses of the existing data sources becomes easy.

Model-Driven Architecture, the C3 AI Way

While the term model-driven architecture has been around for some time, its use in the C3 AI Platform is unique. The benefits of C3 AI’s proprietary model-driven architecture are many.

- The speed advantage of models. Each component within the C3 AI Platform is represented by a model, which is made of one or more “types” that are roughly equivalent to a programming language class in that they have data and behavior. These conceptual models can be reused by many applications, thereby accelerating development of new applications.

- Language-agnostic model APIs make code super flexible and adaptable. The APIs are naturally language agnostic and calling between languages and deployment locations is performed transparently.

- An approach current developers will recognize. Unlike the object management approach (OMG) of unified modeling language (UML) diagrams, C3 AI’s model-driven architecture is familiar to developers who use modern programming languages, captures the nuances of each distinct business situation, and ensures that the models are dynamic pieces of code. The benefits of MDA structuring, documentation, and encapsulation are maintained without losing the development approaches and languages familiar to developers.

- Models are represented as text. LLMs can understand and seamlessly interact with them, and thus data and components in the platform.

- Custom code is rarely required. Because the C3 AI Platform understands the models, a feature store can access data coming from any sort of system without the need for custom coding. Not only does this eliminate the risky effort of custom data-access programming, but it also insulates the system from changes to the specific components used. Data can come from a heterogeneous set of systems without adding complexity to components which access that data.

- A rich set of out-of-the-box AI/ML tools. The AI/ML tools that come with the C3 AI Platform can be pointed to the models defined for any real-world objects and software component that exist in the enterprise without the need for custom interface coding. This takes the vast majority of the data extraction and manipulation work out of the process and reduces cost and risk accordingly.

- Futureproofing. As the rapid emergence of LLMs has proven, the technological landscape is changing faster than ever. C3 AI’s model-driven architecture provides a way to represent any type of new or emerging LLM algorithm as a model and rapidly add its functionality to other software components as part of enterprise AI applications. Model-driven architecture can be applied to generative AI tools today — and, importantly, innovations yet to emerge.

The AI revolution has moved into hyper speed, with business and government leaders eager to take advantage of the benefits AI can produce. The benefits of representing real-world objects and software components in a model-driven architecture stretch across functions of any large enterprise, enabling transformations of business processes that would simply not be possible (or would be highly unlikely) if custom code was required to interact with the array of systems in place throughout an organization. If change is the only constant, a model-driven architecture is the only sure path to building scalable and sustainable enterprise AI applications.