Our new agentic time series system accelerates critical operational decisions while understanding context, providing channel expert knowledge, and adapting to changes

By Sina Pakazad, Vice President, Data Science, C3 AI, Utsav Dutta, Data Scientist, Data Science, C3 AI, and Henrik Ohlsson, Chief Data Scientist, Data Science, C3 AI

Enterprise systems generate vast streams of time series data — yet extracting actionable, trustworthy insights from that data remains a challenge. In a previous blog, we shared our foundational approach solving this: foundation embedding models purpose-built for multivariate time series. These models offer a powerful new way to structure and retrieve temporal insights.

In this post, we go further. We introduce two cutting-edge systems — retrieval-augmented interpretability (RAI) and expert companion (EC) — built on these foundation models. Together, they bring interpretability, context-awareness, and continuous learning to the forefront of decision support.

Reimagining Time Series Decision Support

Before diving into technical specifics, it’s important to understand the broader goal. RAI and EC are designed to address fundamental limitations in how machine learning models are deployed and used in operational settings. These systems aim to:

- Deliver insights from time series models with enhanced retrieval mechanisms that surface relevant contextual information dynamically.

- Naturally and seamlessly codify expert knowledge, ensuring that domain expertise is integrated into model behavior for more intuitive and explainable decision making.

- Detect and adapt in the face of regime shifts, leveraging continuous learning and adaptation to respond to changing conditions and evolving patterns in time series data.

The Challenge: Interpretability in Practice

Most time series models provide useful outputs — anomaly flags, forecasts, classifications. But without interpretability, those outputs are often difficult to act on. For example, identifying a pump failure is useful; knowing it was caused by low pressure and flow makes it actionable.

To bridge this gap, predictions are often paired with interpretability insights that help explain the reasoning behind the model’s outputs. These explanations typically take the form of feature or input contributions and are mostly generated post-hoc. They are then combined with predefined rules or heuristics — often designed by domain experts — to drive decisions.

Consider, for instance, the task of monitoring the health of a pump and we have identified an anomaly. If the interpretability layer highlights that the anomaly is driven by unusual patterns in pressure and flow readings, this adds meaningful context. When paired with rules created by a subject matter expert (e.g., low pressure + low flow = blockage), the user is better equipped to take corrective action.

However, designing effective interpretability methods and crafting the corresponding rules and heuristics often requires significant manual effort and is commonly bottlenecked as it requires close collaboration with domain experts. While this approach can be highly effective, it presents a major barrier to scaling, and limits the ability to establish standard, repeatable and effective best practices. Additionally, post-hoc interpretability methods are often known to be fragile — lacking the consistency and robustness required for high-stakes decision making. They also tend to fall short in handling more complex, nuanced scenarios that require subjective judgment.

Another challenge lies in the cultural and operational shift required to adopt these machine learning-based processes. Traditional decision-making in organizations has long relied on human intuition and interpersonal communication, even though such approaches may have performance limitations. Transitioning to model-driven systems demands time, organizational buy-in, and change management — often slowing down adoption despite the promise of improved outcomes.

Introducing Retrieval Augmented Interpretability (RAI)

At the core of our new framework lies our foundation embedding model. This framework operates similarly to retrieval-augmented generation (RAG), but with a crucial distinction that instead of retrieving document passages relevant to a query, it retrieves time periods that are similar to a specific time period associated with the query. We refer to this framework as retrieval-augmented interpretability (RAI). While the embedding model is central to RAI, it is just one of several key components that enable its functionality.

How RAI Works

Figure 1: RAI enjoys a modular design that enables effective and robust retrieval of past experience. The modular design enables users to decide on the level of retrieval sophistication required.

As with RAG, the first step in setting up RAI involves chunking and indexing time series data — embedding each time chunk/period and storing it in a vector store.

In RAI, we differentiate between historical time periods that include informative annotations (typically curated by domain experts) and those that do not. All time chunks are stored in a general time series vector store, while annotated chunks are also stored in a dedicated annotated time series vector store. This dual-vector store design allows for more nuanced and targeted retrieval by enabling the system to surface both similar time periods and relevant expert annotations in response to a query. During retrieval, RAI dynamically combines results from both vector stores to compile the top-k most similar time periods along with their associated contextual annotations. Together, these vectorstores and the embedding model constitute the retrieval system at the core of RAI.

Once the top-k time periods are retrieved — along with their similarity scores and annotations — they are passed to the uncertainty quantification and processing module. This module estimates the likelihood that each annotation is applicable to the query period by analyzing the frequency of annotations among the retrieved results, nonlinearly weighted by similarity. We achieve this by employing non-parametric approaches. This probabilistic assessment allows RAI to:

- Quantify the system’s confidence in the retrieved results by comparing the resulting annotation distribution to a uniform distribution baseline. This enables RAI to identify knowledge gaps and regime shifts effectively and robustly and avoid providing misleading information to the user (more on this later).

- Re-rank the retrieved time periods based on both similarity and annotation probability, enhancing the interpretability and actionability of the output.

These insights enable more informed downstream decision-making by both users and other components in the RAI pipeline.

To further enhance the output, RAI can also invoke our multi-task, multi-head model. By activating the relevant task-specific heads, the model can generate verbalized predictions — combining retrieved annotations and observed patterns — to offer clearer, context-rich guidance to the user.

At this stage, the enriched insights and the user’s original query can be forwarded to specialized supporting agents. These may include:

- A retrieval agent that searches unstructured internal corpora (e.g., manuals, documentations) for additional relevant context.

- A web search agent that supplements results with external information to support more comprehensive decision making.

Finally, the curated outputs from all agents are composed and refined by a large language model (LLM), which synthesizes the information and delivers a coherent, user-friendly response for the provided user query. Figure 1 illustrates this end-to-end process in detail. Notice that RAI is a modular system, and depending on the users’ need, some of its modules can be enabled or disabled.

A More Natural Interaction with Time Series

Figure 2: RAI allows for interaction and interrogation of your time series data through natural language and in a conversational manner.

RAI enables a fundamentally new way to interact with, interrogate, and derive insights from time series data — directly through natural language in a fluid, conversational format. An example of such an interaction is shown in Figure 2.

What makes this interaction model particularly powerful is how closely it aligns with familiar decision-making workflows. Unlike traditional machine learning systems that may require specialized interfaces or tools, RAI integrates seamlessly into how teams already operate — delivering not just improved performance but also reducing the friction typically associated with onboarding new technology. In fact, the only learning curve is like that of using ChatGPT, making adoption intuitive and accessible.

Evaluating Retrieval Performance of Raw Embeddings

Note: this section is intended for technically inclined readers. Feel free to skip ahead if you’re focused on system-level concepts or use cases.

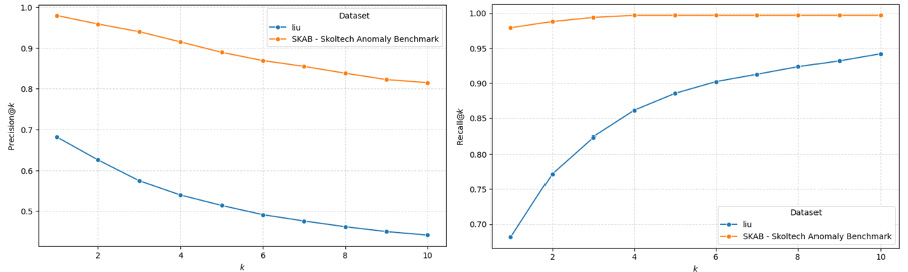

Figure 3: Precision@k and Recall@k for time series retrieval based on two major anomaly detection and classification benchmarks shows the effectiveness of even the raw embeddings.

As previously discussed, our foundation embedding model plays a central role in RAI by enabling the retrieval of relevant time periods. Although this model is trained in a fully unsupervised manner — without access to any annotations or class labels associated with specific time segments — it still demonstrates strong retrieval capabilities.

To provide a preview of its effectiveness, we evaluated the retrieval system using three standard metrics, namely Recall@k, Precision@k, and mean reciprocal rank (MRR), across two benchmark datasets — SKAB, which captures data from a water circulation system, and LiU, based on internal combustion engine operations.

As shown in Figure 3, the system achieves consistently high performance on the SKAB dataset. Recall@k approaches 1.0 even for small values of k, indicating that highly relevant time periods are retrieved near the top of the ranked list. Precision@k remains above 0.8 across all values of k, suggesting that the majority of retrieved segments are indeed relevant.

Performance on the LiU dataset is more moderate — yet still encouraging. Recall@k increases steadily with k exceeding 0.95 at k=10. However, Precision@k declines more rapidly, falling below 0.5 at higher values of k. This behavior likely reflects greater variability and annotation complexity in the LiU dataset, which features subtler fault regimes and more nuanced expert annotations. These characteristics make LiU a more challenging benchmark, as expected. The results are further corroborated by the MRR scores reported in Table 1.

| Dataset | Mean Reciprocal Rank |

|---|---|

| SKAB – Skoltech Anomaly Benchmark | 0.98 |

| LiU | 0.77 |

The high MRR for SKAB indicates that relevant time chunks are typically ranked very highly, while the lower MRR for LiU suggests that relevant examples may appear deeper in the retrieved list.

These results confirm that the retrieval system within RAI performs exceptionally even based on the raw embeddings from the foundation model. Notice that these only leverage the results directly out of the retrieval system and does not reflect the additional improvements that is provided by the leveraging the full RAI system.

The Role of the Expert Companion

Figure 4: EC allows for effective interaction with the domain experts at inference and allows for comprehensive and efficient cataloging of their experience and knowledge.

A fundamental component of RAI is the annotated time series vector store, which comprises indexed time series segments enriched with expert-generated annotations, contextual metadata, and domain-specific commentary. This corpus serves as a critical foundation for delivering insights grounded in prior expert experience relevant to the user’s query or operational context. However, such annotated data is often limited in scope, sparse in availability, and subject to significant informational gaps. Moreover, there is typically no standardized process for identifying and systematically addressing these gaps through expert engagement.

To address this limitation, we introduce the expert companion (EC) system. This system builds upon key elements of the RAI framework to facilitate structured interaction with domain experts for the purpose of augmenting the annotated vector store. For example, given an initial set of annotated time series segments, the system can process historical data and assess the system uncertainty associated with retrieved chunks and their corresponding annotations.

In instances where this uncertainty exceeds a predefined threshold, the system flags the absence of sufficiently similar and relevant annotated segments. At such junctures, EC engages a domain expert to review the identified period, provide annotations, and enrich the vector store with new metadata. Repeating this process across the historical data allows for the progressive construction of a high-fidelity, expert-augmented knowledge base — effectively forming a non-parametric expert companion that supports RAI’s interpretability and insight generation.

Although EC plays a pivotal role in the offline preparation and calibration of RAI, its utility extends to real-time operational settings as well. In such cases, EC can be employed to detect and respond to regime changes in the underlying system or data. When novel behavior is observed, EC facilitates expert interpretation and annotation of these events, thereby enabling the vector store to evolve dynamically in response to shifting conditions.

Looking Ahead

Our foundation embedding model unlocks new possibilities for innovations when dealing with time series data. Systems like RAI and EC show how time series models can become more interpretable, more interactive, and more adaptive. In future posts, we’ll explore the architecture and training methods behind these embeddings, and how they generalize across diverse domains.

These innovations are part of a broader shift toward agentic systems that blend machine intelligence with human expertise — not replacing the expert but empowering them with better tools and deeper insights.

About the Authors

Henrik Ohlsson is the Vice President and Chief Data Scientist at C3 AI. Before joining C3 AI, he held academic positions at the University of California, Berkeley, the University of Cambridge, and Linköping University. With over 70 published papers and 30 issued patents, he is a recognized leader in the field of artificial intelligence, with a broad interest in AI and its industrial applications. He is also a member of the World Economic Forum, where he contributes to discussions on the global impact of AI and emerging technologies.

Sina Khoshfetrat Pakazad is the Vice President of Data Science at C3 AI, where he leads research and development in Generative AI, machine learning, and optimization. He holds a Ph.D. in Automatic Control from Linköping University and an M.Sc. in Systems, Control, and Mechatronics from Chalmers University of Technology. With experience at Ericsson, Waymo, and C3 AI, Dr. Pakazad has contributed to AI-driven solutions across healthcare, finance, automotive, robotics, aerospace, telecommunications, supply chain optimization, and process industries. His recent research has been published in leading venues such as ICLR and EMNLP, focusing on multimodal data generation, instruction-following and decoding from large language models, and distributed optimization. Beyond this, he has co-invented patents on enterprise AI architectures and predictive modeling for manufacturing processes, reflecting his impact on both theoretical advancements and real-world AI applications.

Utsav Dutta is a Data Scientist at C3 AI and holds a bachelor’s degree in mechanical engineering from IIT Madras, and a master’s degree in artificial intelligence from Carnegie Mellon University. He has a strong background in applied statistics and machine learning and has authored publications in the fields of machine learning in neuroscience and reinforcement learning for supply chain optimization, at IEEE and AAAI conferences. Additionally, he has co-authored several patents with C3 AI to expand their product offerings and further integrating generative AI into core business processes.