How C3 AI addressed common enterprise AI challenges with an innovative technology stack

By Mehdi Maasoumy, Vice President, Data Science, C3 AI

For 10 years I have worked in the enterprise technology business, dedicating my efforts to helping companies build effective, value-driven enterprise AI programs. Our customers come to us in various stages of digital transformation, all with one goal in mind: use AI to make better decisions faster. The spectrum of companies we work with at C3 AI is wide: there are those in the process of migrating their data to the cloud and selecting a vendor for their data lake to the ones that have tackled a few AI use cases and have built prototypes of AI models, to the more advanced ones who have a few applications in production.

Working with some of the most complex companies across world has afforded not only me, but C3 AI, the opportunity to continuously evolve and offer the most advanced solutions for our customers. Throughout those years, there is one credence where C3 AI remains steadfast: AI applications are the future of enterprise AI, and where our customers get the most value.

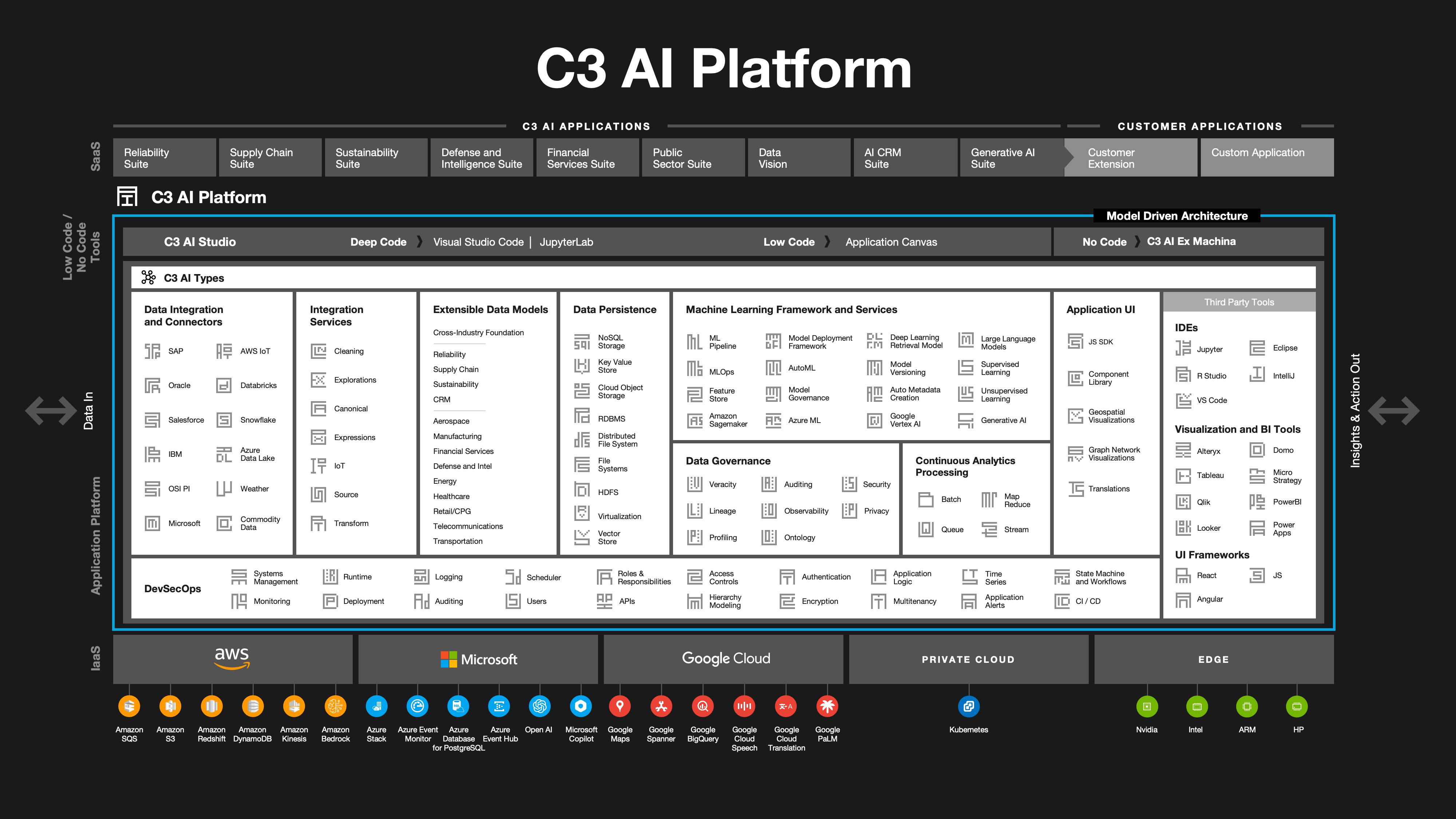

To support this mission, we have spent over 15 years developing and refining the C3 AI Platform: a robust architecture that streamlines the design, development, deployment, operation, and maintenance of advanced AI applications. Running on the C3 AI Platform, our applications are designed to bring enterprise AI to life and turn it into an everyday tool that is baked into the operations of organizations; they can easily digest the complex, vast datasets that enterprises deal with, process those data and run them through AI/ML models, and integrate results into the enterprise’s decision-support systems.

Throughout the years, I have noticed that — agnostic of the industry or use case — organizations don’t just share a goal, but they also share common struggles that prevent them from successfully realizing maximum value from enterprise AI. Each company’s needs and data are unique; however, C3 AI’s technology and methodology consistently proves effective in addressing the common challenges companies face as they strive to establish a resilient enterprise AI program and scale it.

An All-Too-Common Approach: Fragmented AI

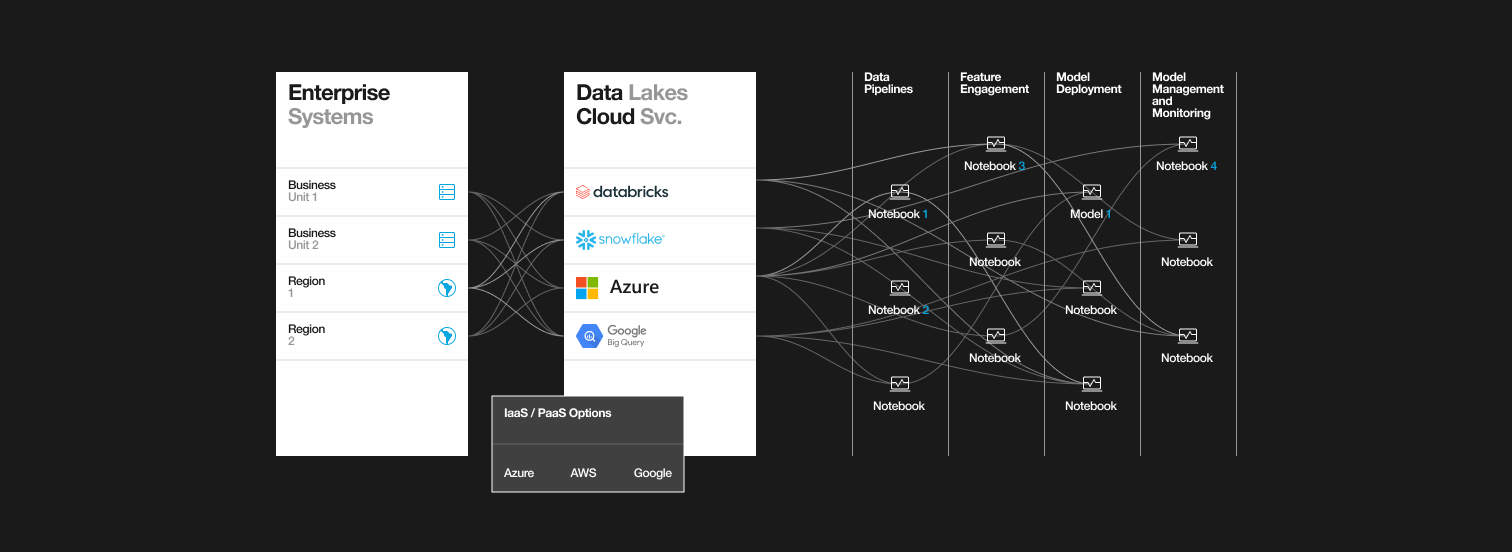

When working to adopt AI, many organizations start small and work to expand, but soon fall into a cycle of repetition. Many times, companies approach enterprise AI in a piecemeal way, resulting in lengthy science projects that seldom see the light of the day as a production use case that delivers value to the business. While data science teams are critical in framing and solving business problems at the prototype phase, their “productionizing Jupyter notebook” approach has failed to scale their solutions across the enterprise.

In this conventional approach, data scientists and developers working together on the same use-case or working separately on different use-cases need to tap into the same data stores. And each data scientist ends up developing their own data and AI pipelines every time from scratch on every new project. The complexity of dealing with tying together so many disjointed software services — from Oracle and SAP to Databricks and Snowflake to Azure and AWS — makes teams lose track of the end goal of building an AI application. Soon, they end up with a tangled, spaghetti-like situation where the wheel is reinvented on every project, and sometimes, even multiple times on the same project.

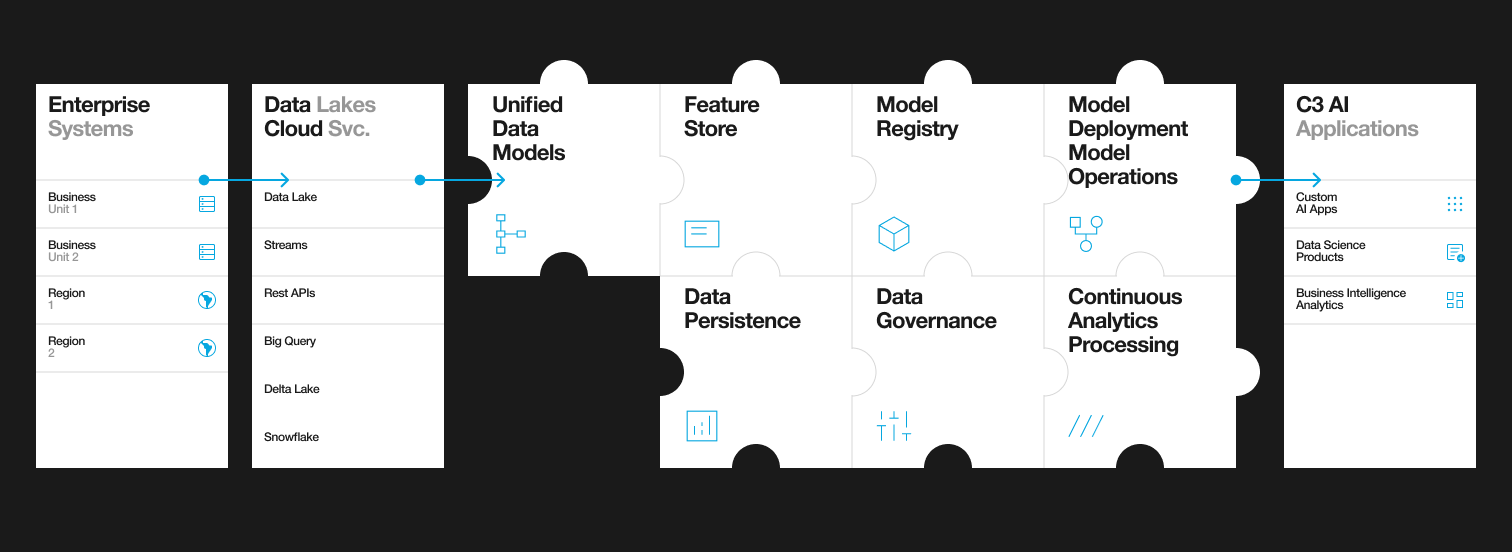

Figure 1: Today’s approach to enterprise AI using cloud AI/ML offerings lead to intractable complexity as the number of AI projects and use cases grow. While these cloud solutions are enablers of AI experimentation, they are not suitable for building AI applications across the enterprise.

That was the case with one C3 AI customer in the energy sector: a utility company founded in the 1990s that has modernized with hardware and IoT but has not invested in robust digital architecture to support AI and ML projects. This company operates on a grid that was not designed or built to handle the continuously growing volume of appliances in homes today. With residents adding even more devices such as heat pumps and electric vehicle chargers, that will only increasingly intensify the stress on the network.

We also worked with an oil and gas company that approached us after they had spent a few years building four AI applications by cobbling together their existing IT infrastructure and other open-source tools. Even though they were successful in developing those applications, they hit a wall when working to scale them across facilities, data types, databases, and business operations — and then understood that the cost, time, and complex work required to maintain those applications was not feasible internally.

The Solution: The C3 AI Platform and Model-Driven Architecture

At C3 AI, we solved this problem with the C3 AI Platform. Building a way for organizations to design, develop, and deploy AI applications, scale them efficiently, and realize value quickly.

Our solution revolves around enabling different teams to work on the same codebase, building highly configurable, reusable, and integrated software components. This is made possible by implementing a robust architecture that streamlines the design, development, deployment, operation, and maintenance of advanced AI applications through a novel abstraction layer, called model-driven architecture. The C3 AI Platform sits on top of cloud providers such as AWS, Google Cloud, and Azure, providing an abstraction layer to build AI applications efficiently and effectively. At C3 AI, we employ the C3 AI Type System as an abstraction, which binds all the components required for an AI application into one unified, standardized environment to work in. Abstraction allows users to work in any language they are comfortable with; for example, the C3 AI Platform facilitates smooth interoperability of Java, Python, and R.

Think of building an AI application like working on a jigsaw puzzle. C3 AI lays down the border: the C3 AI Platform. Then, organizations can fill in the rest, working in parallel or sequentially.

To get started, one person can lay the pieces out on a table, flipping them right-side-up. Let’s think of this person as data engineer A: she will initiate the project, begin grouping pieces that fit together and start completing the puzzle. Now, when the next person, say data scientist B, comes along and wants to work on the puzzle, hecan begin to assemble a new section — building off the work data engineer A completed.

Figure 2: Building AI applications on C3 AI Platform can be analogous to solving a jigsaw puzzle: the C3 AI Platform acts as a framework and each piece of the puzzle has a place. Once a contributor places a piece in its right location, that contribution will never get lost. Users can also come in and exchange one piece for another of the same shape, that process would be like taking a data persistence technology on the C3 AI Platform (e.g., ORACLE) and swapping it with a new one (e.g., Postgres), or taking a default Python method and replacing it with your own algorithm.

The C3 AI Platform provides an abstraction layer that sits on top of the micro-services offerings from cloud solutions and enables reusability of connected software components via its object-oriented programming (model-driven architecture) designed to facilitate and expedite AI application development.

As new workers such as new data engineers, data scientists, and UI engineers come into the picture, they can make their own contributions — without needing to start from scratch — by leveraging all the work from previous contributors. And if only one piece — or data source or ML model —needs to be changed, it can be removed, upgraded, and replaced without affecting the remainder of the application, which is running on the C3 AI Platform. Teams can rely on full re-usability of what they build, not only across their team, but also the entire organization — even when working on seemingly different use-cases. This leads to huge productivity gains for the organization.

Enterprise AI Success Requires a Shift in Perspective

There’s a simple reason why we at C3 AI came to approach AI in a way that is different from the approach needed with traditional software systems: AI-based software systems are completely different.

The C3 AI Platform was built to account for this difference; it is the answer to managing the complexity of designing, developing, and deploying such AI/ML-based software systems.

Traditional software systems are composed of code and the running system, requiring only unit tests, integrations tests, and system monitoring; ML-based software systems have two new components, namely data and AI models. Introducing these two entities has not only created more sophisticated systems but has also forced a dramatic shift in the underlying architecture, one that requires a much more comprehensive approach to construction.

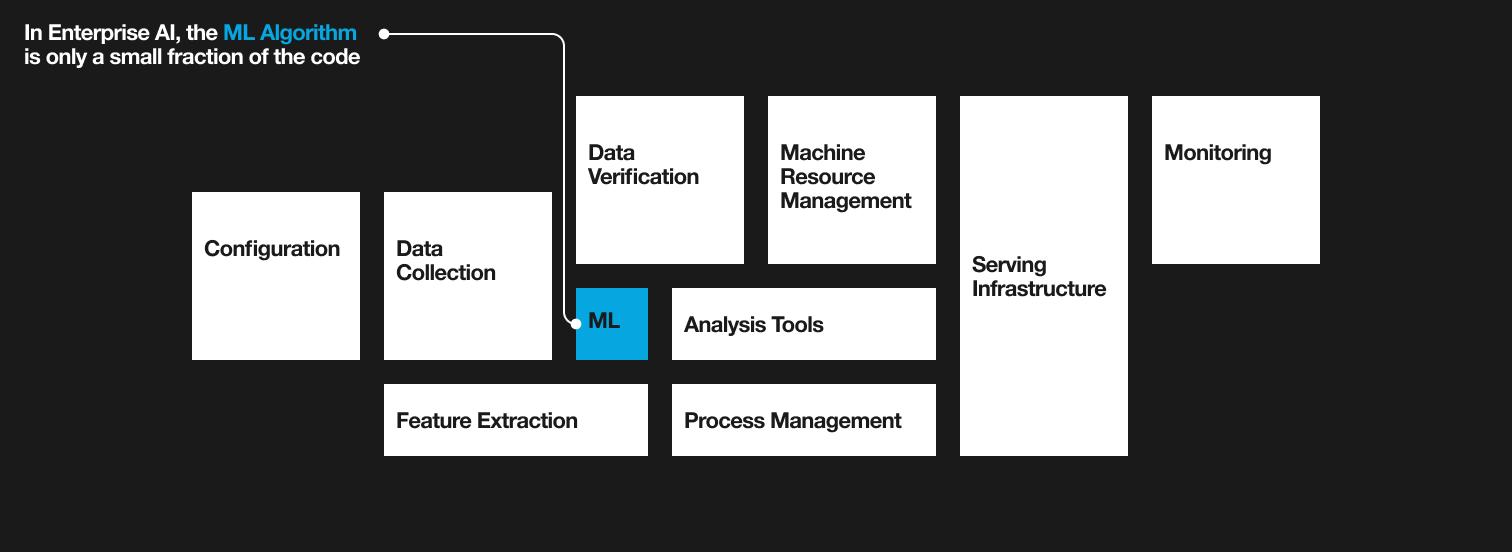

Now, these systems require us to perform much more complex workflows as you can see in Fig. 3: we must check for data skewness, data quality and completeness, have data monitoring systems in place, ML infrastructure, containers, runtimes, and ML pipelines need to be tested within the CI/CD pipeline, models need to be tested, and predictions out of the AI model need to be monitored. The integration tests now include data and ML infrastructure.

Figure 3: Machine learning software systems are fundamentally different from traditional software systems.

(Source: E. Breck, S. Cai, E. Nielsen, M. Salib and D. Sculley, “The ML test score: A rubric for ML production readiness and technical debt reduction,” 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 2017, pp. 1123-1132, doi: 10.1109/BigData.2017.8258038.)

In the software world, abstractions are critical as they allow for separation of responsibilities and enable independent innovation. Abstractions have provided the pillars upon which many great software achievements have been built.

For example, let’s explore some key abstractions that have enabled the training of large language models (LLMs). In the timeline below we have mapped significant abstractions that have played a part in enabling the development of present-day LLMs: starting with x86, which abstracts machine code and enabled the development and mass production of personal computers and servers.

The abstractions provided by these technologies have led to countless innovations in the history of computer science, including the most recent one, LLMs (imagine if we had to train LLMs using punch cards!). There has been a consistent shift within the enterprise software market toward application-centric operations. And in this age of AI, the sector is signaling that AI applications are the end game in digital transformation and the enterprise AI journey.

Beginning in the 1970s, the IT revolution took root when companies started using mainframe computers for tasks like data processing, payroll, and accounting. By the 1990s, enterprise resource planning systems emerged, offering integrated suites of applications for managing core business processes such as finance, human resources, supply chain, and manufacturing. This led to the rise of software giants like SAP and Oracle, streamlining workflows and improving data accuracy. In the 2000s, companies embraced a mix of custom-built and packaged applications, including CRM, SCM, and BI tools.

With evidence that AI applications — and the significant value they deliver — are the goal for companies, it reasons that a new abstraction layer is needed that will give rise to large scale development, adoption, and expansion of AI applications across enterprises; the C3 AI Platform offers that abstraction layer needed for building scalable, value-driven AI applications.

Why Should I Invest in the C3 AI Platform?

Collaboration and Reusability

These new requirements create complexity in the system, calling for simplification. This is why we have spent 15 years developing and refining the C3 AI Platform to solve these issues organizations face around efficiency, scalability, collaboration, and reusability when it comes to building and deploying large-scale AI solutions.

We’ve already discussed how the C3 AI Platform enhances collaboration, providing a framework that allows users across the business to easily work in the same environment. Reusability is another key feature that overlaps with collaboration. The cost and time to build applications for additional use cases drops dramatically using the C3 AI Platform, thanks to the reusability of software components and the resulting improved productivity. Hence, it makes sense to think of the C3 AI Platform as an enterprise-wide abstraction layer for building AI applications scaled across the organization, versus yet another vendor to solve one specific problem.

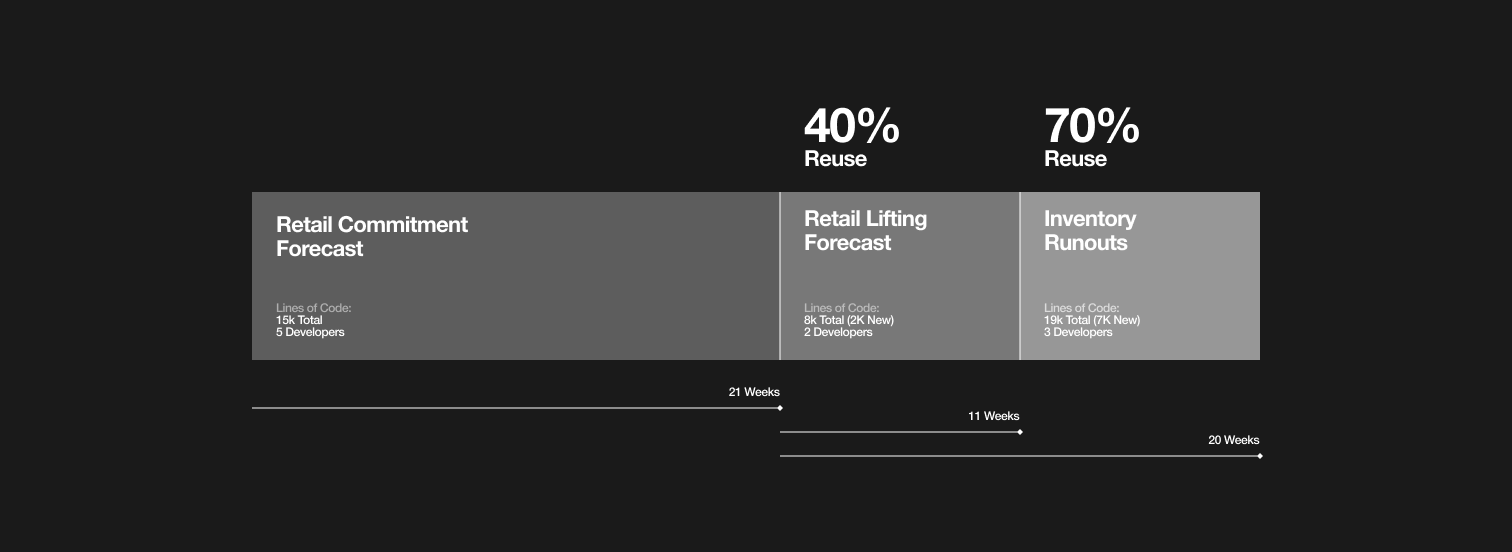

While we always start by solving a single business problem with our customers, that first use case is usually just the beginning. Many of our customers end up scaling to many more use cases or assets (scaling up and out), building an entire enterprise AI program. In one case, illustrated in Fig. 4, a customer was able to reuse 40% and 70% of the initial work in their second and third use cases, respectively. This is a benefit of using a comprehensive platform: it is easy to re-use application elements such as APIs, data, and AI pipelines and packages that are pre-designed to solve a specific problem.

Figure 4: One customer was able to reuse 40% and 70% of the initial work in their second and third use cases, respectively.

This additional investment leads to compound value in several ways. First, more data digested on the platform means predictions and insights across all areas improve when the models can take new cross-functional information into account. Second, software artifacts, including APIs and ML models, from the initial use case can be reused across new ones — driving the value from that first investment up as re-use accelerates AI application development and customers can realize results from new use cases quickly. With the C3 AI Platform, customers can re-use up to 90% of the code. This isn’t just about the end results of business value: it also makes the work of data scientists, engineers, and anyone working on the development of these AI applications easier and faster.

Figure 5: As AI teams build beyond their first AI application, they do not start from scratch with each project using the C3 AI Platform. They can build on top of previous work, continuously and seamlessly adding new data sources, expanding the data model, and building on top of the existing data and AI pipelines to quickly build new applications at the fraction of the cost of the alternative approach of starting from zero.

Though Critical, Machine Learning Models Alone Can’t Drive Value

With C3 AI’s ML models often outperforming that of benchmarks or customer models, we often get asked if the customer can just purchase the ML models and reimplement them on their own cloud environments. It is a common misconception that what makes enterprise AI powerful is the ML model itself. Rather, ML code is only 5% of what you need to efficiently design, develop, deploy, and manage AI applications at scale.

Figure 6: Only a tiny fraction of the code in many AI systems is devoted to learning or prediction. Much of the remainder may be described as “plumbing.” The C3 AI Platform has taken care of the remaining 95% of the work, freeing up time of developers and data scientists to focus on the parts which brings competitive advantage to companies, solving the business problem at hand.

To answer this question, let’s examine the steps you’d need to take if you only purchased C3 AI’s ML models and tried to run them on a different environment: to operationalize that model and use it for making predictions, you need to containerize the models, manage the runtime and ensure it is the same as the model training runtime; you need to deploy the model and connect to the right subjects it needs to predict on. Then you need to feed the model with data; the data must be in the exact format that you trained the model on. That means you also need to recreate the whole data pipeline which was used in the C3 AI Platform to train the model.

Soon, you realize the model by itself is useless unless you only want to use it for making predictions. And to put this model to use, you need to build an equivalent of the C3 AI Platform and maintain it on your own.

The full architecture — in addition to ML models — of what it takes to build and scale AI applications is shown in Fig. 7, which is the platform that C3 AI has built. It includes data integration and connectors, integration services, extensible data model capabilities, abstraction for data persistence layer, data governance, continuous analytics, machine learning, and UI capabilities.

Figure 7: The successful development of AI and IoT applications requires a complete suite of tools and services that are fully integrated and designed to work together, provided by the C3 AI Platform.

Where Do Cloud Providers Come into the Picture?

Many companies have invested in cloud solutions from providers such as AWS, Google Cloud, or Microsoft Azure for IoT sensors and devices, data lakes, and AI/ML features. A frequent question we get asked from customers who have invested in solutions from cloud providers is: why haven’t these providers solved the issues of efficiency, scalability, collaboration, and reusability when it comes to building and deploying large-scale AI solutions?

The answer lies in these cloud providers’, sometimes called hyperscalers’, business model. They are in the business of selling compute, while C3 AI in contrast is in the business of providing end-to-end tooling and accelerators for designing, developing, and deploying end-user-centric AI applications that solve business problems. It is paramount for C3 AI’s success to build a platform that improves productivity of AI teams such that they can build these AI applications in the shortest time with minimal effort and ensure the process is repeatable. C3 AI partners with cloud providers and we believe our products are complimentary, and both are critical.

Results When It Matters: Customer Success

The results customers see from working with us prove how valuable this approach is to enterprise AI. Let’s take the utility company we were discussing earlier: in just a few months, C3 AI has demonstrated that the C3 AI Reliability application can enhance fault detection and resolution by deploying a complex AI model at the circuit level. Thousands of these models are efficiently implemented with just a few lines of code, ensuring coverage of each low-voltage circuit in the network. Cloud autoscaling simplifies the training and deployment process, adapting the system dynamically to grid demands. This approach allows for proactive fault identification, minimizing downtime and reducing maintenance costs.

In the case of the oil and gas company mentioned previously, they were able to re-platform their four applications onto C3 AI Platform in just nine months. Since then, they have scaled those applications across tens of facilities globally with minimal to no code change, thanks to the abstractions provided by the platform.

These are just some of the reasons customers choose to work with C3 AI. Our significant investment in an architecture that makes AI application development more efficient brings value to both people working in the system and business leaders extracting insights and seeing results from that system. Beyond productivity, C3 AI not only brings valuable industry and domain experience to customers, but also brings unique advantages with the C3 AI Platform including scalability to include tens of thousands of assets or use cases.

With C3 AI, customers change the way they think about and approach AI. Turning to enterprise AI creates a new way to see and understand how every facet of business fits together; not only inspiring creative solutions to previously difficult problems but transforming the future of work.

About the Author

Mehdi Maasoumy is a Vice President of Data Science at C3 AI where he leads AI teams that develop machine learning, deep learning, and optimization algorithms to solve business problems including stochastic optimization of supply chains, reliability, sustainability, and energy management solutions across industries. Mehdi holds a PhD from the University of California at Berkeley in Optimization and Machine Learning. He has authored more than 50 peer-reviewed papers and books in machine learning and optimal control, and is the recipient of three best paper awards from ACM and IEEE.