It didn’t take long for professionals to discover that generative AI is a remarkable tool to get tasks done at work. Need to send an email? Generative AI can write it. Need to review a press release? Generative AI can edit it. Don’t want to review your meeting notes? Generative AI can summarize them.

It also didn’t take long for businesses to recognize the limitations of the public large language models (LLMs) that consumer generative AI technologies like ChatGPT are based on, leaving them and their employees searching for a secure and reliable generative AI tool.

The solution: C3 Generative AI — it’s designed for the enterprise and is distinct from consumer LLMs in several ways. It provides:

Security for an enterprise’s IP:

Yes, consumer LLMs can summarize meeting notes, but that information is at risk of being leaked since your data gets stored on external servers. C3 Generative AI eliminates LLM-leakage by isolating a business’s proprietary data from the LLM. This allows workers to submit queries and conduct data processing and analysis without fear of jeopardizing their business’s privacy and security.

Accurate answers:

While consumer generative AI can quickly return facts and figures, their answers are predicted based on the public, unverified data they are trained on, making them prone to generating false information, known as hallucinations. Relying on an enterprise’s proprietary data, C3 Generative AI ensures that answers come from the appropriate sources. When a user asks an unanswerable question, a safeguard blocks the application from hallucinating and generating an incorrect answer.

Consistent responses:

Two people can ask a consumer LLM like ChatGPT the same question, yet the LLM can produce two different answers. Rather than having the LLM predict an answer, C3 Generative AI employs a separate system, like a vector store connected to a retrieval model or a retrieval model connected to a database, to source relevant information from an enterprise’s data, which gets summarized by the LLM.

Full traceability:

Even if consumer LLMs generated accurate and consistent information, workers have no way of tracing its source. With C3 Generative AI, the system shows which documents it’s using to generate answers.

Complete access control:

Consumer LLMs give everyone equal access to all underlying data. This doesn’t suit the needs of a business or government agency, where what documents people can access are tied to their role. C3 Generative AI gives enterprises total control over which employees can see what.

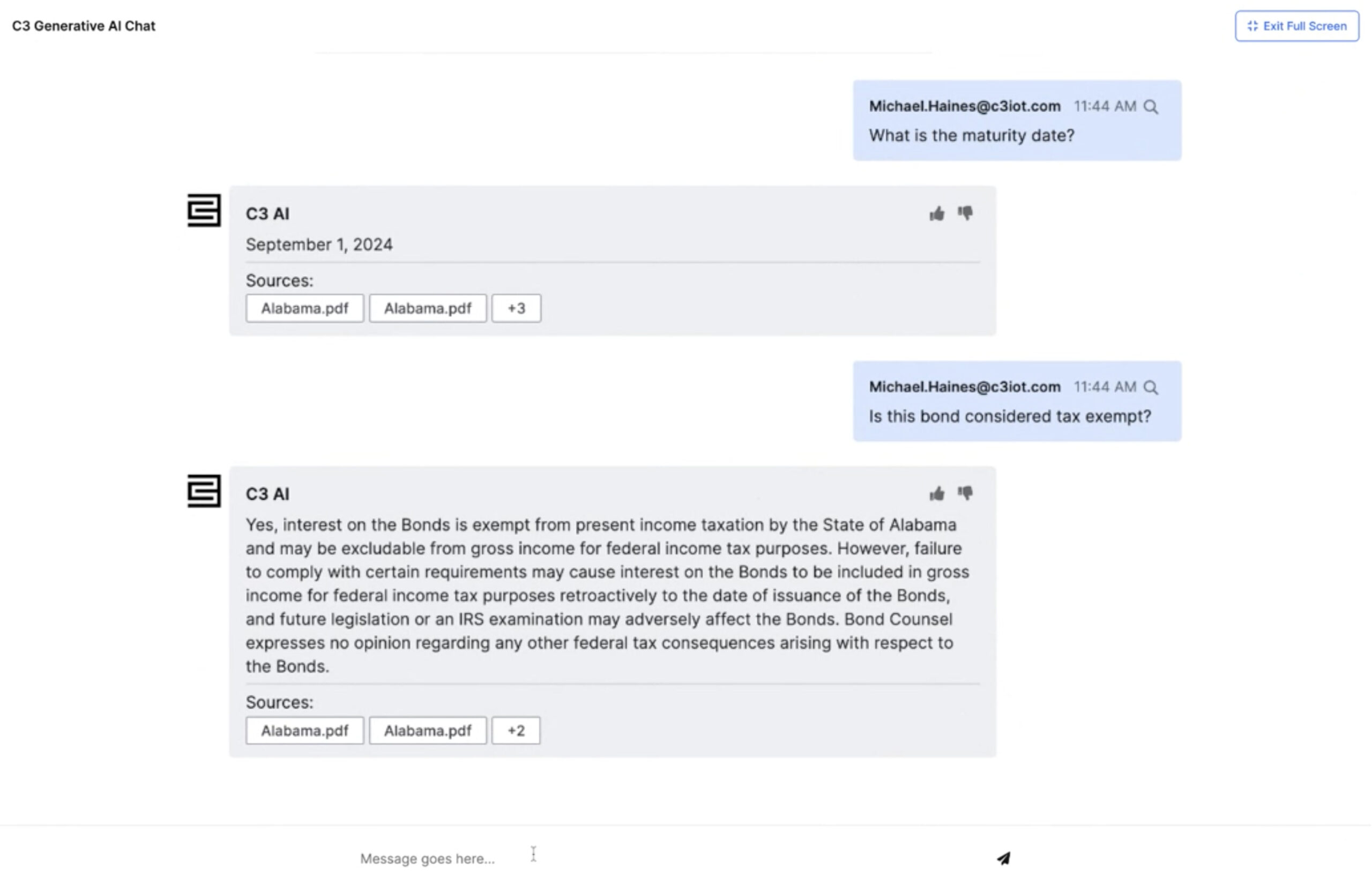

In a webinar on generative AI in the workplace, C3 AI’s lead product manager, Michael Haines, showcased these features, demonstrating how an investment banking analyst could review a lengthy financial bond document.

Through his queries, C3 Generative AI identified the purpose of the bond and located crucial information scattered throughout the document, completing the hours-long review process in seconds. If Haines wanted to further investigate where in the document answers are sourced from, the responses provide links to their exact locations.

Professionals want to use generative AI at work, but it’s important that they do so in a trustworthy and secure manner. Only by adopting generative AI designed specifically for the needs of the enterprise will professionals realize its full potential to help them work securely, efficiently, and effectively. In turn, workers can spend more time focusing on the high value and high complexity tasks that demand their attention.

To learn more, watch our webinar: How Generative AI Will Change the Way We Work.