Flexibility, model-driven architecture enables high-performance AI applications for low-resource environments

By Ashish Somani, Customer Solution Architect, C3 AI

To operate an industrial plant, companies regularly rely on edge devices to collect and transmit data from equipment or sensors within the facility. Companies looking to adopt modern software solutions — including AI applications for predictive maintenance in plants — often run into roadblocks from the restrictions edge devices set.

One company leading in digital transformation and AI innovation in the sustainable building solutions industry is Holcim, who uses C3 AI Reliability across multiple plants for predictive maintenance. To ensure plant control engineers can promptly respond to alerts while continuing to use existing systems and processes, Holcim worked with C3 AI to deploy C3 AI Reliability on edge devices. To do this, the teams created a customized configuration of the AI application, powered by Version 8 of the C3 AI Platform. The C3 AI Platform is designed to process data and deliver AI insights at scale for highly critical and high value business use cases, such as predictive maintenance, supply chain optimization, and sustainability management.

Running AI Applications on Edge Devices at Holcim

Currently, Holcim is monitoring thousands of sensors from critical equipment, including vertical roller mills that are vital to the company’s plants’ operations. Work to date has resulted in advancements in predictive maintenance and operational efficiency at scale, while enabling Holcim to achieve a step-change in asset lifecycle management, improved reliability, and capacity for its customers.

Edge devices have robust security and can perform compute but are designed to operate outside of traditional digital infrastructure, including reliable, large bandwidth internet connections and cloud computing. But there are many edge use cases — including critical equipment maintenance — that require real-time decision making. To meet that need, these edge devices and platforms need to perform instant data processing and analytics locally without first sending data to the cloud so decisions can be made rapidly.

The C3 AI Reliability application and V8 of the C3 AI Platform running on the edge can overcome these typical edge device limitations and provide a fully featured application for Holcim plant engineers to obtain machine learning (ML) insights in a timely manner. To ensure the success of this collaboration, the C3 AI services team worked closely with Holcim to understand their edge infrastructure and deploy a customized solution.

Holcim wanted to deploy C3 AI Reliability to run on resource-constrained and semi-air-gapped edge devices to protect their investments and improve maintenance activities at the plants. The C3 AI services team analyzed and extensively optimized the compute resources required without compromising the performance, quality, and availability of C3 AI predictions while remaining in scope, given the limited size of the edge devices. This approach enabled plant engineers to promptly respond to alerts while continuing to use their existing systems and processes and removed the plant maintenance team’s dependency on central availability of alerts.

C3 AI Platform Features that Enable AI Predictions on the Edge

The C3 AI Platform, deployed on Kubernetes, provides a flexible architecture that enables efficient customization of services to meet specific edge requirements. We leveraged this capability of the C3 AI Platform by:

- Deploying the C3 AI Platform on open-source Kubernetes (Rancher), with a persistent volume distributed store (Longhorn).

- Adjusting Kubernetes resources (Workloads) requests/limits to fit within the constrained infrastructure.

- Engineering a fit-for-purpose datastore to store sensor measurements data on the edge device.

- Using a C3 AI Model Registry component to host latest trained ML models.

- Using the C3 AI Platform artifact store to deploy and manage the application.

- Ingesting IoT data as available, running model inference and sending alerts to plant engineers.

In the initial production deployment, we worked closely with the Holcim team to deploy the required C3 AI Platform components — including a Kubernetes cluster, persistent volume distributed store, the C3 AI Reliability application, and the latest trained ML models, involving several edge instances in multiple plants across the globe.

The Value of Flexibility on the C3 AI Platform

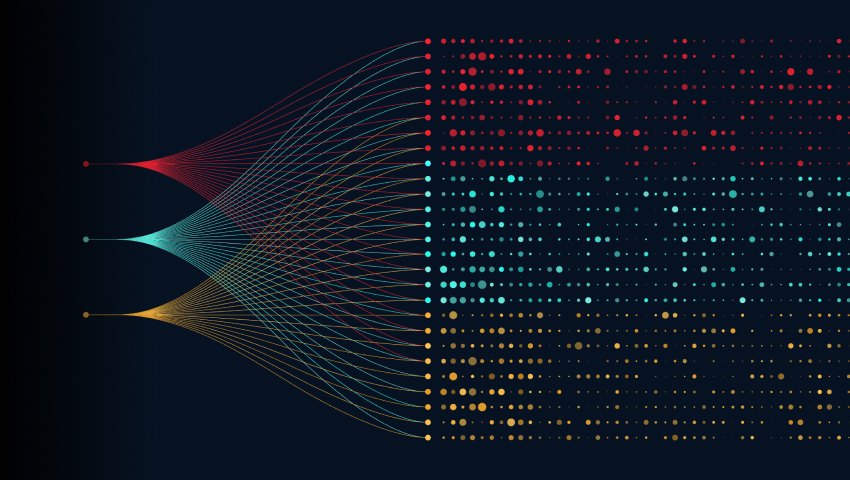

The above figure shows how the C3 AI Platform and C3 AI Reliability are configured to be deployed on small edge devices and integrated with the C3 AI Reliability instance on the customer’s cloud environment. While the former (edge deployment) produces alerts from limited live sensor data from the past seven days utilizing small and flexible software services as described above, the latter (C3 AI Reliability on the cloud environment) has all the services to train the models on millions of rows of data and all the DataOps and MLOps services.

C3 AI architecture therefore provides all the flexibility one would desire to easily adjust and design the resources required for the applications and environments they operate in in the most optimum manner. This helps to manage all pod resources in line with the requirements and deploy a secure and reliable cluster on small edge devices.

AI Application Design for Edge Devices

Based on this experience, we’ve outlined some key considerations when designing AI applications for edge devices at scale:

- Use a solution with high availability on edge devices: In context of model inferencing, timely access to alerts is crucial. The C3 AI Platform has a self-recovery capability that allows the application to recover on its own without any loss of data with limited or no impact on the end-user.

- Allocate appropriate edge compute resources: V8 of the C3 AI Platform provides flexibility around resource allocation and distribution of processes that strikes a balance around resource utilization on edge devices.

- Ensure resiliency: Due to limited connectivity at an industrial plant, manual or immediate remote management is not always possible. With the self-recovery capability of the C3 AI Platform, the platform and application recover on their own in case of unplanned disruptions.

- Central artifact management: The C3 AI artifact hub manages application packages, and, as appropriate, pushes to edge devices. On the edge, the C3 AI package store (a component of the artifact hub) manages the application.

- Specify ML model deployment to each plant: The C3 AI Model Registry manages models for all plants in the cloud with specific tags. The model deployment on the edge device is plant-specific, meaning non-relevant champion models must not be deployed.

- Automate end-to-end workflows: Considering plant engineers have limited awareness about the C3 AI Platform, it’s important that workflows be fully automated — so we used the C3 AI Platform scheduler service to automate data ingestion and model inference jobs as required.

- Streamline monitoring and troubleshooting: Due to limited connectivity, immediate remote troubleshooting may not be possible, but V8 of the C3 AI Platform maintains the container logs for a defined period within storage constraints, allowing for remote analysis of issues.

- Deploy a self-managed datastore: One of the major advantages for users in the plant is to assess and act on predictive maintenance alerts from the C3 AI Platform and not to be concerned with data storage. The C3 AI Platform storage management APIs are used to manage storage and are scheduled with the C3 AI scheduler service.

- Simplify maintenance and updates: Multi-location remote edge deployments can face sync challenges across a platform — but V8 of the C3 AI Platform is containerized, which simplifies the deployment process.

There are a few other considerations to keep in mind, such as how to best manage logs and operationalize alerts in case of issues at the plants. These issues are essential when considering a hybrid approach that further centralizes edge deployment in a client server architecture across a network of interconnected plants.

Edge Deployment as a Network

Edge deployment is not just an innovation, it is a strategic solution from C3 AI Platform that liberates AI capabilities from network limitations, allowing for real-time alert generation even behind secure firewalls. This enhanced capability elevates the plant control team’s efficiency, adaptability, and near-real-time decision making.

There are multiple ways to approach edge deployment using C3 AI solutions — let’s quickly explore another as an example. Another efficient and scalable approach to process data and generate AI alerts is by deploying in a client-server architecture across a network on interconnected plants. In this approach, the edge machine acts as a client that connects to a central server for all required data. This technique offers a solution that is less maintenance heavy and scalable. Some key design considerations would be:

- Functional data partitioning: Data should be persisted in the data store so that specific plants can access their data at any time, including models and AI alerts — the C3 AI Platform offers a composite key partitioning capability for NoSQL data to meet this requirement.

- Robust data access controls: Data accessibility rules would differ across plants and across applications deployed on the edge infra, but the C3 AI Platform has a built-in, role-based access control solution that can be configured to meet this requirement.

By deploying edge solutions at plants, C3 AI empowers businesses with a formidable arsenal to navigate challenges and capitalize on emerging opportunities.

Learn more about the C3 AI Platform and explore all its advanced capabilities, including edge deployment, that enable improved efficiency across industrial plants and delivers accurate AI alerts with no dependency on external network availability.

About the Author

Ashish Somani, an AI Solution Architect at C3 AI, leads the implementation of C3 AI applications for various customers. With over 20 years of experience, Ashish is a technology leader in delivering technology solutions and complex data and analytics services for clients.