- AI Software

- C3 AI Applications

- C3 AI Applications Overview

- C3 AI Anti-Money Laundering

- C3 AI Cash Management

- C3 AI Contested Logistics

- C3 AI CRM

- C3 AI Decision Advantage

- C3 AI Demand Forecasting

- C3 AI Energy Management

- C3 AI ESG

- C3 AI Health

- C3 AI Intelligence Analysis

- C3 AI Inventory Optimization

- C3 AI Process Optimization

- C3 AI Production Schedule Optimization

- C3 AI Property Appraisal

- C3 AI Readiness

- C3 AI Reliability

- C3 AI Smart Lending

- C3 AI Supply Network Risk – bak

- C3 AI Turnaround Optimization

- C3 Generative AI Constituent Services

- C3 Law Enforcement

- C3 Agentic AI Platform

- C3 Generative AI

- Get Started with a C3 AI Pilot

- Industries

- Customers

- Events

- Resources

- Generative AI for Business

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can the Generative AI Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- What Is Enterprise AI?

- Machine Learning

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download eBook

- All Resources

- Publications

- Customer Viewpoints

- Blog

- Glossary

- Developer Portal

- Generative AI for Business

- News

- Company

- Contact Us

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Problem Scope and Timeframes

- Cross-Functional Teams

- Getting Started by Visualizing Data

- Common Prototyping Problem – Information Leakage

- Common Prototyping Problem – Bias

- Pressure-Test Model Results by Visualizing Them

- Model the Impact to the Business Process

- Model Interpretability Is Critical to Driving Adoption

- Ensuring Algorithm Robustness

- Planning for Risk Reviews and Audits

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download e-Book

- Machine Learning Glossary

Selecting the Right AI/ML Problems

While the potential economic benefits of AI/ML are substantial, many organizations struggle with capturing business value from AI. Many enterprises have widespread AI prototype efforts, but few companies are able to run and scale AI algorithms in production. Fewer still are able to unlock significant business value from their AI/ML efforts.

Based on our work with several of the largest enterprises in the world, the most critical factor in unlocking value from AI/ML is the selection of the right problems to tackle and scale up across the company.

During problem selection, managers should think through three critical dimensions. Managers should ensure that the problems they select (1) are tractable, with reasonable scope and solution times; (2) unlock sufficient business value and can be operationalized to enable value capture; and (3) address ethical considerations.

Tractable Problems

Ability to Solve the Problem

A first step to AI/ML problem selection is ensuring that the problem actually can be solved. This involves thinking through the premise and formulation of the problem. At their core, many AI/ML tasks are prediction problems – and data are at the center of such problems.

That’s why a consideration of problem tractability should involve analysis of the available data. For supervised learning problems, this involves thinking through whether sufficient historical data are available and whether there are sufficient data signals and labels for an algorithm to be trained successfully.

Data Availability

For many use cases that involve supervised learning problems, the number and quality of available labels becomes a key limiting issue. Supervised models typically require hundreds of labels for training and often thousands, or even millions, of labels to learn to accurately predict outcomes. Many organizations may not have the historical data sets needed to support supervised learning models, particularly because the underlying enterprise IT systems and data models were never designed with either machine learning or labels in mind.

For unsupervised learning problems, this involves thinking through whether sufficient “normal” historical periods can be identified for the algorithms to determine what a range of normal operations look like.

Problem Formulation

Another factor that must be considered up front is whether data scientists and SMEs consider the fundamental problem formulation to be tractable. There is an art to analyzing problem tractability.

Problem tractability analysis may involve assessing whether there are sufficient signals encoded within the data set to predict a specific outcome, whether humans could solve the problem given the right data, or whether a solution could be found given the fundamental physics involved.

For example, consider a problem in which a bank is trying to identify individuals involved in money laundering activities. The bank has years of transaction records, with millions of transactions that contain useful information about money transfers and counterparties. The bank also has significant contextual information about its customers, their backgrounds, and their relationships, and access to external data sources including news feeds and social media. The bank also may have thousands of historical suspicious activity reports to act as labels from which an algorithm could learn.

Further, humans may be able to diagnose and identify individual money laundering cases very well – but humans can’t scale to interpret data from millions of transactions and customer accounts. This is an example of a data-rich and label-rich environment in which the tractability of the problem formulation is established – humans can perform the diagnostic task to a limited degree – but the key challenge is performing the identification task at scale and with high fidelity. This is a good, tractable problem for a supervised machine learning algorithm.

Contrast this with a different example problem, in which an operator is trying to predict the failure of a very expensive and complex bespoke machine. The machine may be only partially instrumented with few input signals and may only have one or two historical failures from which an algorithm could learn. Given the available signals and data inputs, human operators may not be able to effectively predict upcoming failures. This is an example of a data-poor and label-poor environment and system in which the tractability of the problem formulation is unclear. Ultimately, this may not be a tractable problem for a supervised machine learning algorithm.

Tractable and Intractable Use Cases

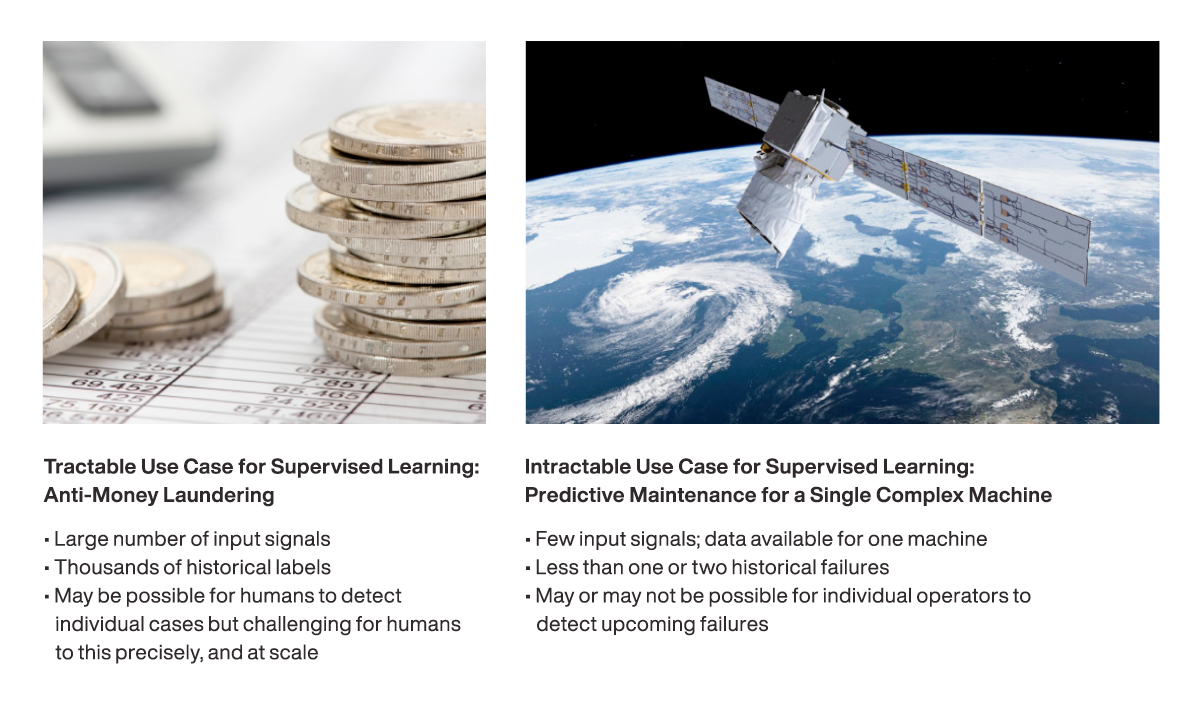

Depending on the amount of historical data and instrumentation available, this problem may be amenable to other AI/ML techniques (for example, unsupervised anomaly detection methods). But this is an example of a problem that solution teams may want to examine carefully before pursuing. The following figure summarizes examples of tractable and intractable ML use cases.

Figure 20: Examples of tractable and intractable machine learning use cases

In most organizations, understanding and analyzing available data requires input and cooperation from both IT managers and business managers or subject matter experts (SMEs). Business teams usually have a good understanding of their data sets, but do not understand the underlying source data systems. IT teams usually have a good understanding of the data sources, but usually do not know what the data represent.

Based on our experience, the data complexity for most enterprise business problems is significant. It is reasonable to assume that at least five or six disparate IT and operational software systems will be required to solve most real-world enterprise AI use cases that unlock substantial business value. At most organizations, the individual IT source systems weren’t designed to interoperate and typically have widely varying definitions of business entities and ground truth.

A cross-functional business and IT team is required to identify a range of relevant data sources for any problem (a combination of all sources that have relevant signals and labels) and then to analyze those sources to characterize the available data.