- AI Software

- C3 AI Applications

- C3 AI Applications Overview

- C3 AI Anti-Money Laundering

- C3 AI Cash Management

- C3 AI Contested Logistics

- C3 AI CRM

- C3 AI Decision Advantage

- C3 AI Demand Forecasting

- C3 AI Energy Management

- C3 AI ESG

- C3 AI Health

- C3 AI Intelligence Analysis

- C3 AI Inventory Optimization

- C3 AI Process Optimization

- C3 AI Production Schedule Optimization

- C3 AI Property Appraisal

- C3 AI Readiness

- C3 AI Reliability

- C3 AI Smart Lending

- C3 AI Supply Network Risk – bak

- C3 AI Turnaround Optimization

- C3 Generative AI Constituent Services

- C3 Law Enforcement

- C3 Agentic AI Platform

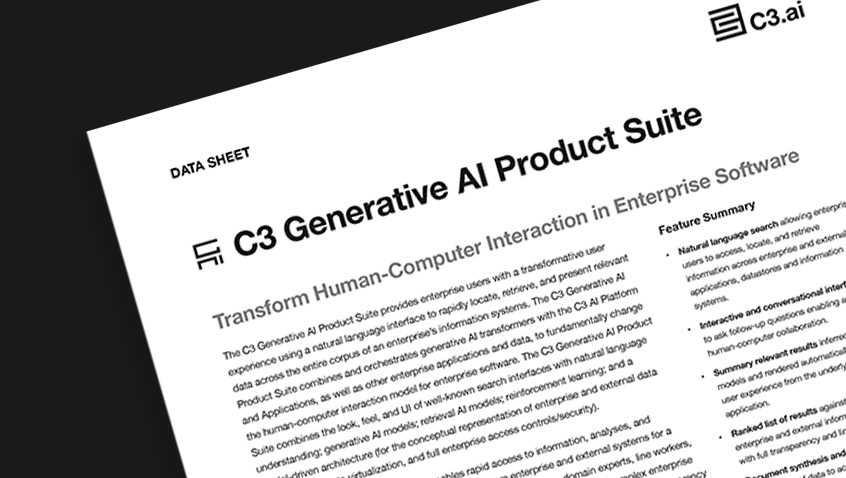

- C3 Generative AI

- Get Started with a C3 AI Pilot

- Industries

- Customers

- Events

- Resources

- Generative AI for Business

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can the Generative AI Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- What Is Enterprise AI?

- Machine Learning

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download eBook

- All Resources

- Publications

- Customer Viewpoints

- Blog

- Glossary

- Developer Portal

- Generative AI for Business

- News

- Company

- Contact Us

- Generative AI for Business

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can the Generative AI Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- C3 Generative AI: Getting the Most Out of Enterprise Data

- The Key to Generative AI Adoption: ‘Trusted, Reliable, Safe Answers’

- Generative AI in Healthcare: The Opportunity for Medical Device Manufacturers

- Generative AI in Healthcare: The End of Administrative Burdens for Workers

- Generative AI for the Department of Defense: The Power of Instant Insights

- C3 AI’s Generative AI Journey

- What Makes C3 Generative AI Unique

- How C3 Generative AI Is Transforming Businesses

C3 Generative AI: How Is It Unique?

What Makes C3 Generative AI Unique

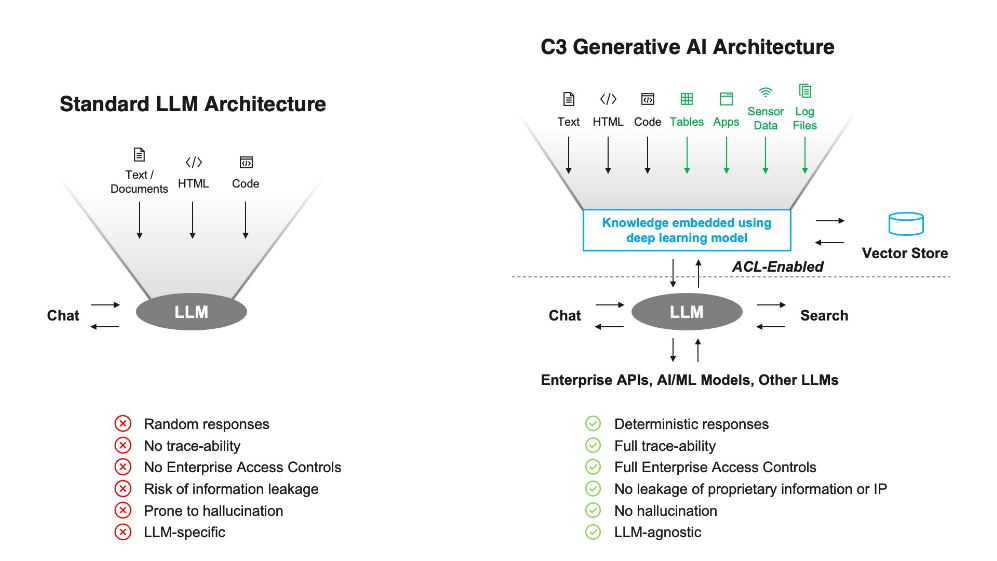

Standard LLMs Vs C3 Generative AI’s solution

Six critical capabilities differentiate C3 Generative AI from consumer generative AI applications – making it the only generative AI solution that business and their users can rely on to get consistent, trusted, and secure answers to all of their business questions.

1. Consistent Answers

One problem with GPT LLMs is they frequently provide random, inconsistent responses. In fact, two people can ask the same question and get different answers. The LLMs aren’t designed to provide precise, deterministic answers necessary for any commercial or government application.

Generative AI for the enterprise must provide consistent answers and handle nuance. If two people ask the same question, or even phrase it in slightly different ways, the system needs to connect to an overall technical architecture that ensures those queries are routed to the applicable data sources within the enterprise to generate identical and correct answers. C3 Generative AI for the enterprise needs accesses an organization’s entire corpus of data, including ERP, CRM, SCADA, text, PDFs, Excel, PowerPoint, and sensor data.

To produce deterministic, traceable, accurate answers, C3 Generative AI doesn’t rely on the LLM to come up with the answer. Instead, the LLM delivers the query to a different system (a retrieval model connected to a vector store) to understand which documents or data sources are the most relevant. With this method, the answers generated always comes from the best sources in the system at that given moment, ensuring answers that an enterprise user can trust.

2. Full Traceability

The speed at which LLMs provide seemingly polished answers can create the impression those answers are accurate and authoritative. They are not. In addition to sometimes providing random answers, consumer LLM applications lack what’s known as traceability. Put simply, they don’t have the ability to track and provide information about the datasets they’re relying on to generate answers. They are not designed to do so, making it all but impossible to check the veracity of any claim.

It is a level-zero requirement that any generative AI solution for the enterprise always indicates where its answers come from. It should synthesize an answer and provide a chat interface so the user can dive deeper with follow-up questions — and the dashboard needs to provide instant traceability. C3 Generative AI provides all of that. It’s similar to searching the web, where the user can decide whether a website that’s served up is credible. If business users are going to take action based on answers from generative AI, they must be able to trust the software’s response.

3. Full Access Controls

Every business and government agency gives different access to different employees. The general counsel can see documents other employees can’t. The same is true for the CFO and everyone in various functions across an organization.

One of the significant opportunities with generative AI is that people throughout an organization—not just business and data analysts, for instance—can take advantage of the technology so they can do their jobs far better and far more productively. It’s also an opportunity to upskill more junior employees. To make all this possible, a generative AI enterprise solution must provide rigorous access controls to ensure each user gets fast and accurate answers while maintaining security and data confidentiality for your entire enterprise. C3 Generative AI manages access via a comprehensive role-and-policy based framework built into the underlying C3 AI Platform.

4. Protection From LLM-Caused Leakage of Proprietary Information

In offices everywhere, millions of workers are using ChatGPT and other LLM-based generative AI tools to help them do their jobs, even as their bosses and IT departments are often unaware. What workers likely don’t realize is that any time they engage with a consumer generative AI service, what they feed into the system—the prompts they enter to help generate marketing announcements, say, or create code requests—are then stored on external servers, resulting in IP and trade secret exfiltration.

In May 2023, Samsung banned employees from using ChatGPT after discovering some of its sensitive code was uploaded to the internet. Other companies have also cracked down on the use of ChatGPT and other generative AI tools and the like. Such risks don’t exist with C3 Generative AI. With C3 AI’s solution, the deep learning models that derive answers do so on the other side of a firewall built into the C3 AI Platform. As such, all queriers—along with all processing and data analysis—happen within your enterprise systems without connection to the internet. The result is zero risk of IP or data exfiltration by the LLM.

5. Free of Hallucination

As ChatGPT and other LLMs have soared in popularity, so has attention to the fact that its seemingly remarkable answers are sometimes flat-out wrong. The LLMs that interpret a user’s queries don’t have a good way to check the accuracy of source data, nor are they designed to tell you when they don’t know an answer. Similar to the iPhone keyboard’s predictive-text tool, LLMs form coherent statements by stitching together data — words, characters, and numbers — based on the probability of each piece of data succeeding the previously generated piece of data.

When LLMs do not know an answer, they frequently make one up. The results are pure fiction – technically known as hallucination. Any Generative AI solution for the enterprise must prevent hallucination.

Instead of relying on an LLM to generate an answer, the LLM should effectively hand off the query to an underlying orchestration agent that retrieves the answers from deep learning models already applied to an enterprise’s data. C3 AI solves this by not relying on the LLM to provide the answer. With C3 Generative AI, the LLMs have no direct access to the data. The LLM is enabling the enterprise AI software already applied to an organization — and thus provides reliable responses. Even better, an ideal generative AI system for the enterprise should tell a user when it doesn’t know an answer instead of generating an answer strictly because that’s what it’s trained to do. C3 Generative AI only provides an answer when it’s certain the answer is correct. If it can’t find an answer, it tells you so. Deterministic answers. No hallucination.

6. LLM Agnostic

Importantly, C3 Generative AI is LLM agnostic. That means C3 AI can use whichever LLM is best suited for a particular customer or industry, and we can take advantage of the rapid innovation in LLM development. If a new LLM emerges that has distinct advantages, we can incorporate it into our architecture. We also fine-tune LLMs so they can support highly secure environments required by some customers.

C3 AI supports Azure GPT-3.5, Google PaLM 2, AWS Bedrock Claude 2, AWS Bedrock Titan, C3 AI Fine Tuned Falcon-40B, C3 AI Fine Tuned Llama 2, and C3 AI Fine Tuned FLAN T5.