- AI Software

- C3 AI Applications

- C3 AI Applications Overview

- C3 AI Anti-Money Laundering

- C3 AI Cash Management

- C3 AI Contested Logistics

- C3 AI CRM

- C3 AI Decision Advantage

- C3 AI Demand Forecasting

- C3 AI Energy Management

- C3 AI ESG

- C3 AI Health

- C3 AI Intelligence Analysis

- C3 AI Inventory Optimization

- C3 AI Process Optimization

- C3 AI Production Schedule Optimization

- C3 AI Property Appraisal

- C3 AI Readiness

- C3 AI Reliability

- C3 AI Smart Lending

- C3 AI Supply Network Risk – bak

- C3 AI Turnaround Optimization

- C3 Generative AI Constituent Services

- C3 Law Enforcement

- C3 Agentic AI Platform

- C3 Generative AI

- Get Started with a C3 AI Pilot

- Industries

- Customers

- Events

- Resources

- Generative AI for Business

- Generative AI for Business

- C3 Generative AI: How Is It Unique?

- Reimagining the Enterprise with AI

- What To Consider When Using Generative AI

- Why Generative AI Is ‘Like the Internet Circa 1996’

- Can the Generative AI Hallucination Problem be Overcome?

- Transforming Healthcare Operations with Generative AI

- Data Avalanche to Strategic Advantage: Generative AI in Supply Chains

- Supply Chains for a Dangerous World: ‘Flexible, Resilient, Powered by AI’

- LLMs Pose Major Security Risks, Serving As ‘Attack Vectors’

- What Is Enterprise AI?

- Machine Learning

- Introduction

- What is Machine Learning?

- Tuning a Machine Learning Model

- Evaluating Model Performance

- Runtimes and Compute Requirements

- Selecting the Right AI/ML Problems

- Best Practices in Prototyping

- Best Practices in Ongoing Operations

- Building a Strong Team

- About the Author

- References

- Download eBook

- All Resources

- Publications

- Customer Viewpoints

- Blog

- Glossary

- Developer Portal

- Generative AI for Business

- News

- Company

- Contact Us

- What is Enterprise AI

- Introduction: A New Technology Stack

- Requirements of the New Enterprise AI Technology Stack

- Reference AI Software Platform

- Awash in “AI Platforms”

- “Do It Yourself” AI?

- The Gordian Knot of Structured Programming

- Cloud Vendor Tools

- C3 AI Platform: What is Model-Driven Architecture

- Platform Independence: Multi-Cloud and Polyglot Cloud Deployment

- Conclusion: A Tested, Proven AI Platform

- Enterprise AI Best Practices

- Enterprise AI Buyer’s Guide

- 10 Core Principles of Enterprise AI

- IT for Enterprise AI

- Develop AI 26X Faster on AWS

- Develop AI 18X Faster on Azure

- Enterprise AI Resources

What is Enterprise AI?

Cloud Vendor Tools

An alternative to the open source cluster is to attempt to assemble the various services and microservices offered by the cloud providers into a working, seamless, and cohesive enterprise AI and IoT platform. As depicted in Figure 5, leading vendors like AWS are developing increasingly useful services and microservices that in many cases replicate the functionality of the open source providers and in many cases provide new and unique functionality. The advantage of this approach over open source is that these products are developed, tested, and quality assured by highly professional enterprise engineering organizations. In addition, these services were generally designed and developed with the specific purpose that they would work together and interact in a common system. The same points hold true for Google, Azure, and IBM.

Figure 5

Cloud Vendor Tools—AWS

Public cloud platforms like AWS, Azure, and Google Cloud offer an increasing number of tools and microservices, but stitching them together to build enterprise-class AI and IoT applications is exceedingly complex and costly.

The problem with this approach is that because these systems lack a model-driven architecture like that of the C3 AI Platform, described in the following section, programmers still need to employ structured programming to stitch together the various services. This results in the same type of complexity previously described – many lines of spaghetti code and numerous interdependencies that create brittle applications that are difficult and costly to maintain.

The difference between using structured programming with cloud vendor services and using the model-driven architecture of the C3 AI Platform is dramatic. To demonstrate this stark difference, C3 AI commissioned a third-party consultancy to develop an AI reliability application designed to run on the AWS cloud platform. The consultancy – a Premier AWS Consulting Partner, with significant experience developing enterprise applications on AWS for Fortune 2000 customers – was asked to develop the application using two different approaches: the C3 AI Platform and structured programming.

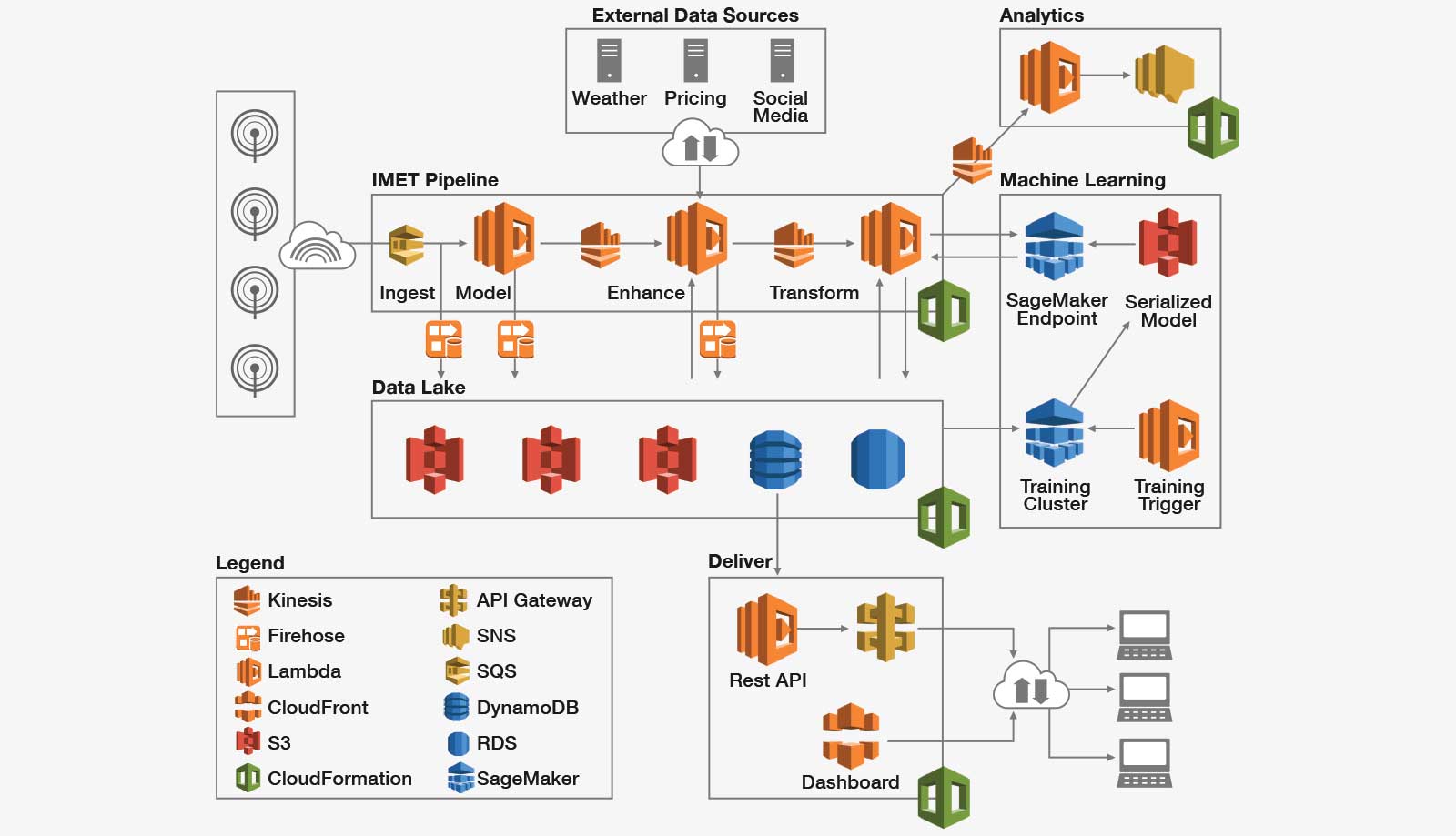

Using structured programming and AWS services, the reference architecture for this relatively simple reliability application is shown in Figure 6. The time to develop and deploy this application was 120 person-days at a cost in 2019 dollars of $458,000. The effort required writing 16,000 lines of code that must be maintained over the life of the application. The resulting application runs only on AWS. To run this application on Google or Azure, it would have to be completely rebuilt for each of those platforms at a similar cost, time, and coding effort.

Figure 6

Architecture to Build a Basic Reliability Application on AWS

Building even a simple AI reliability application using microservices of a public cloud (AWS in this example) and a structured programming approach takes 40 times the work effort of using a model-driven architecture.

By contrast, using the C3 AI Platform with its modern model-driven architecture, the same application, employing the same AWS services, was developed and tested in 5 person-days at a cost of approximately $19,000. Only 14 lines of code were generated, dramatically decreasing the lifetime cost of maintenance. Moreover, the application will run on any cloud platform without modification, eliminating any additional effort and cost of refactoring the application if moving it to a different cloud vendor.